Investigating the Effectiveness of Explainability Methods in Parkinson's Detection from Speech

Eleonora Mancini*, Francesco Paissan*, Paolo Torroni, Mirco Ravanelli, Cem Subakan

*Both authors contributed equally to this research. For these authors, the order is alphabetical.

Description

Project Webpage (Accepted at SPADE Workshop - ICASSP’25). The code of this project is available here.

Abstract

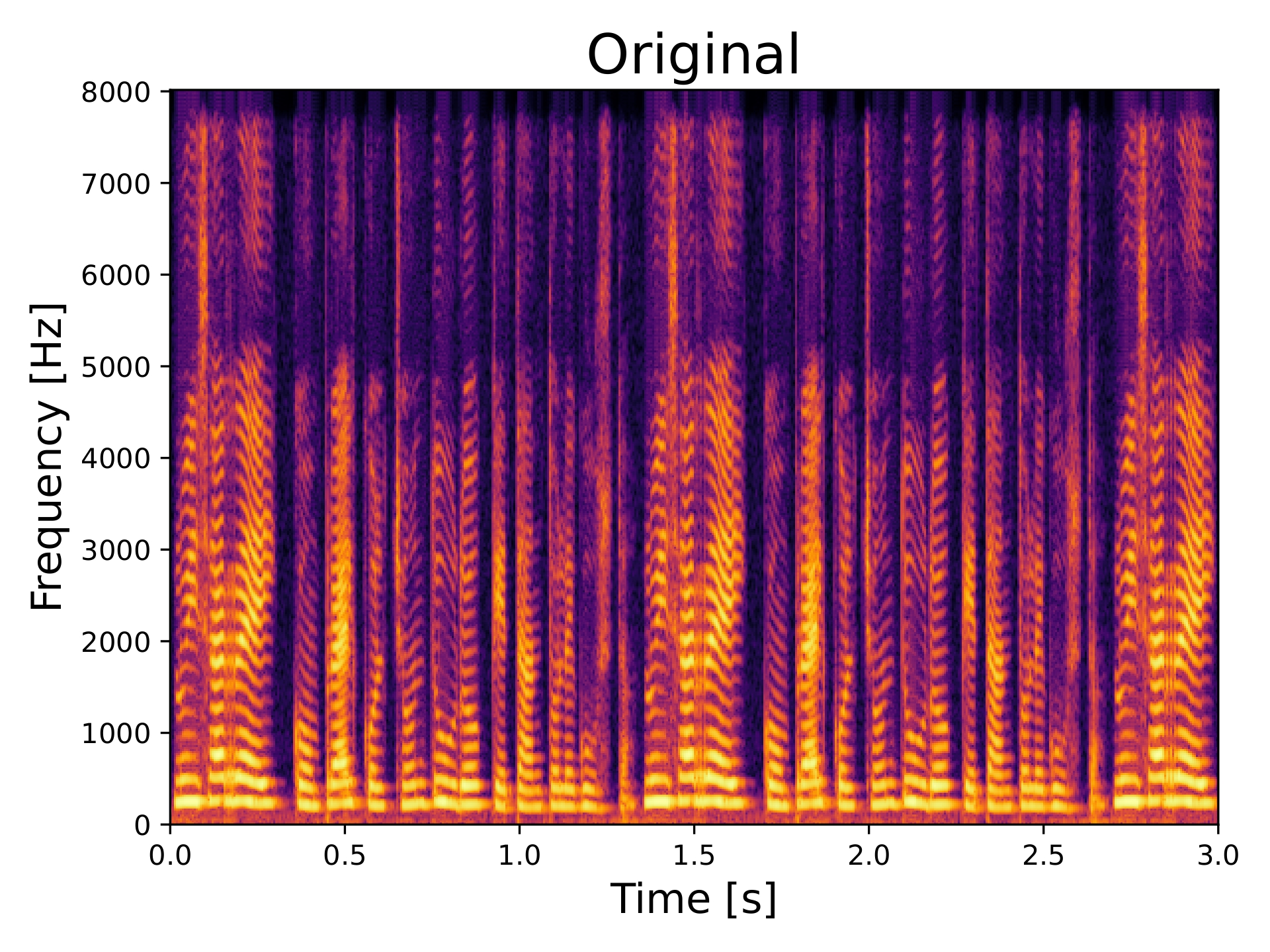

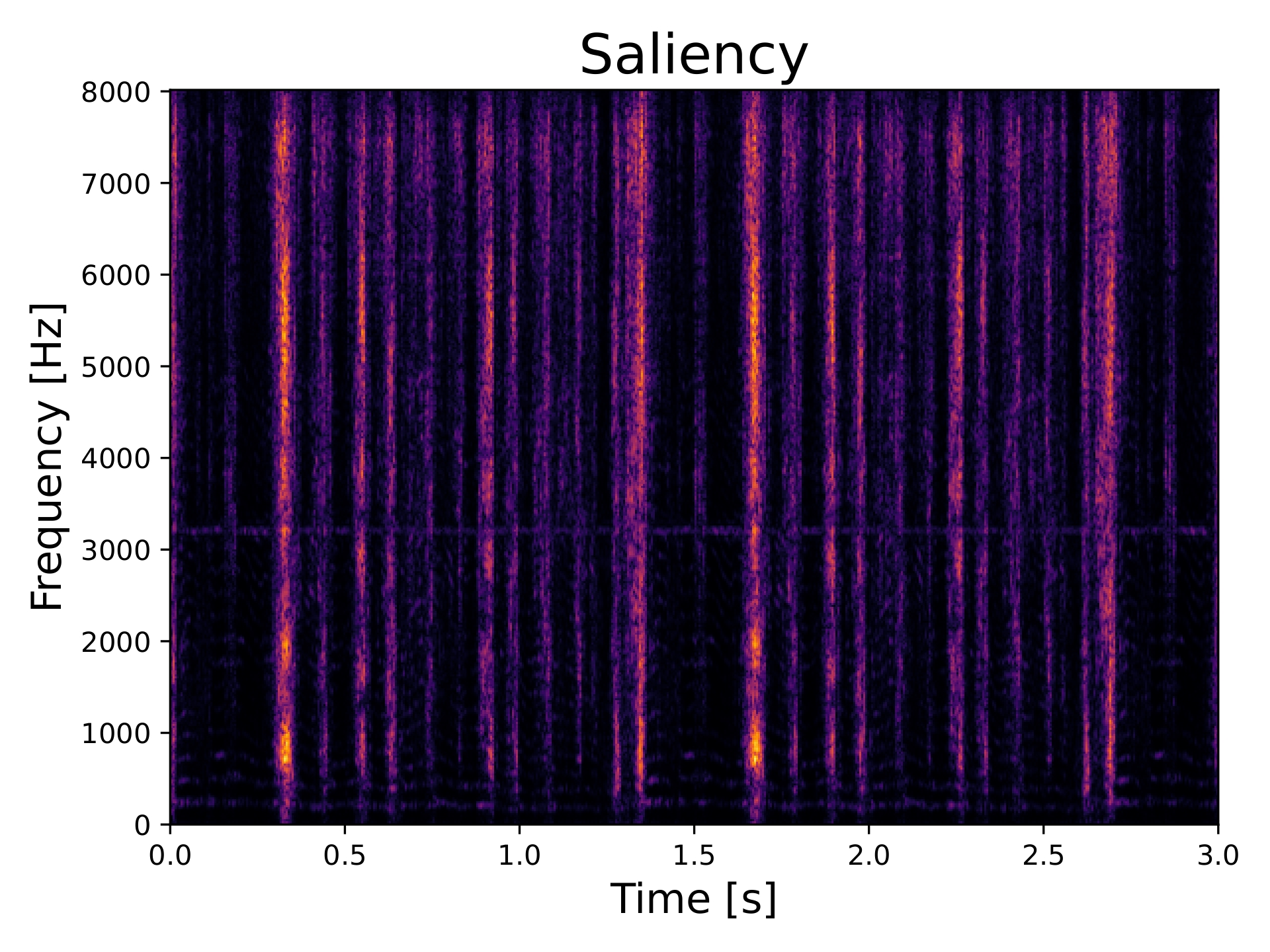

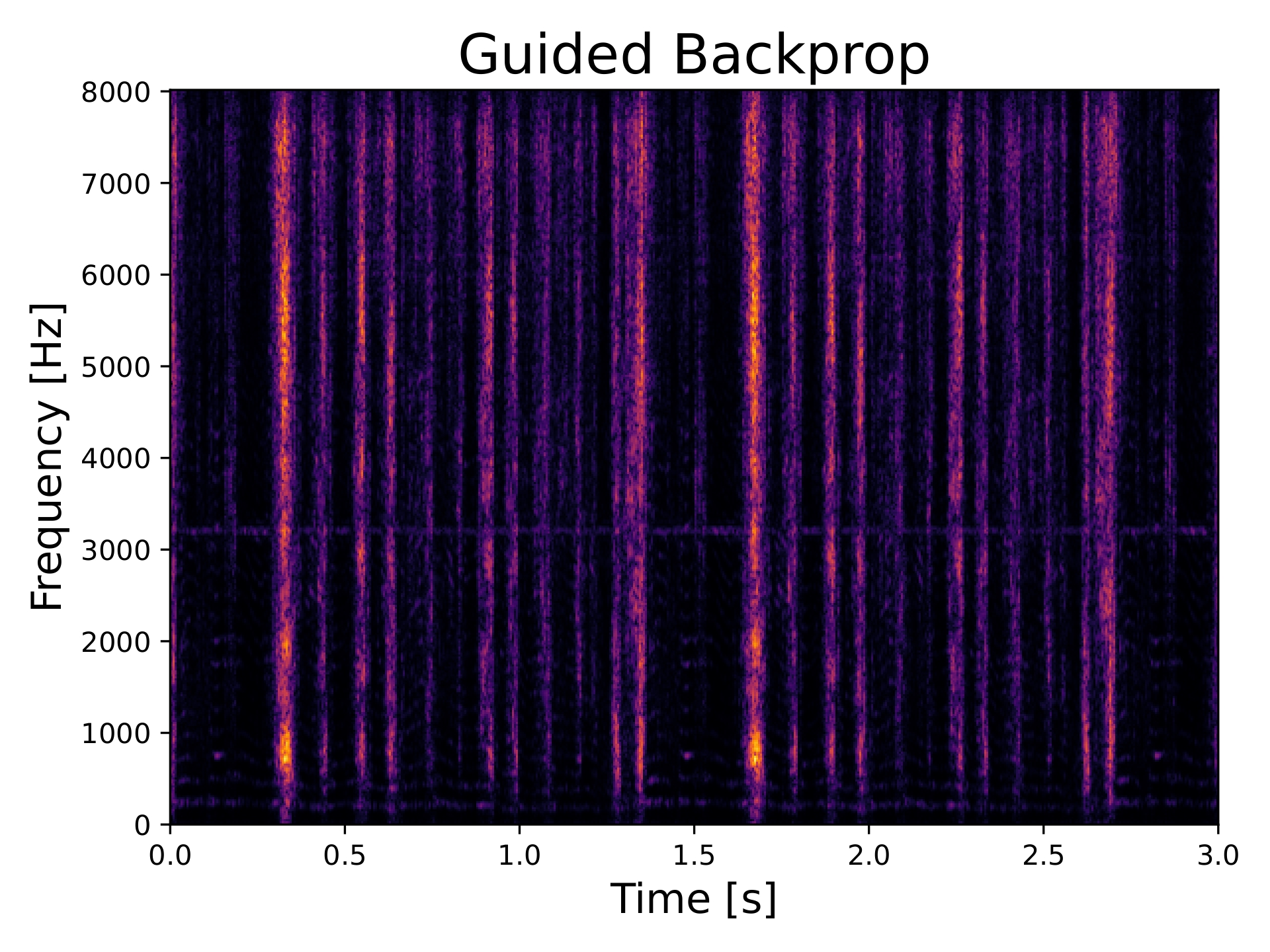

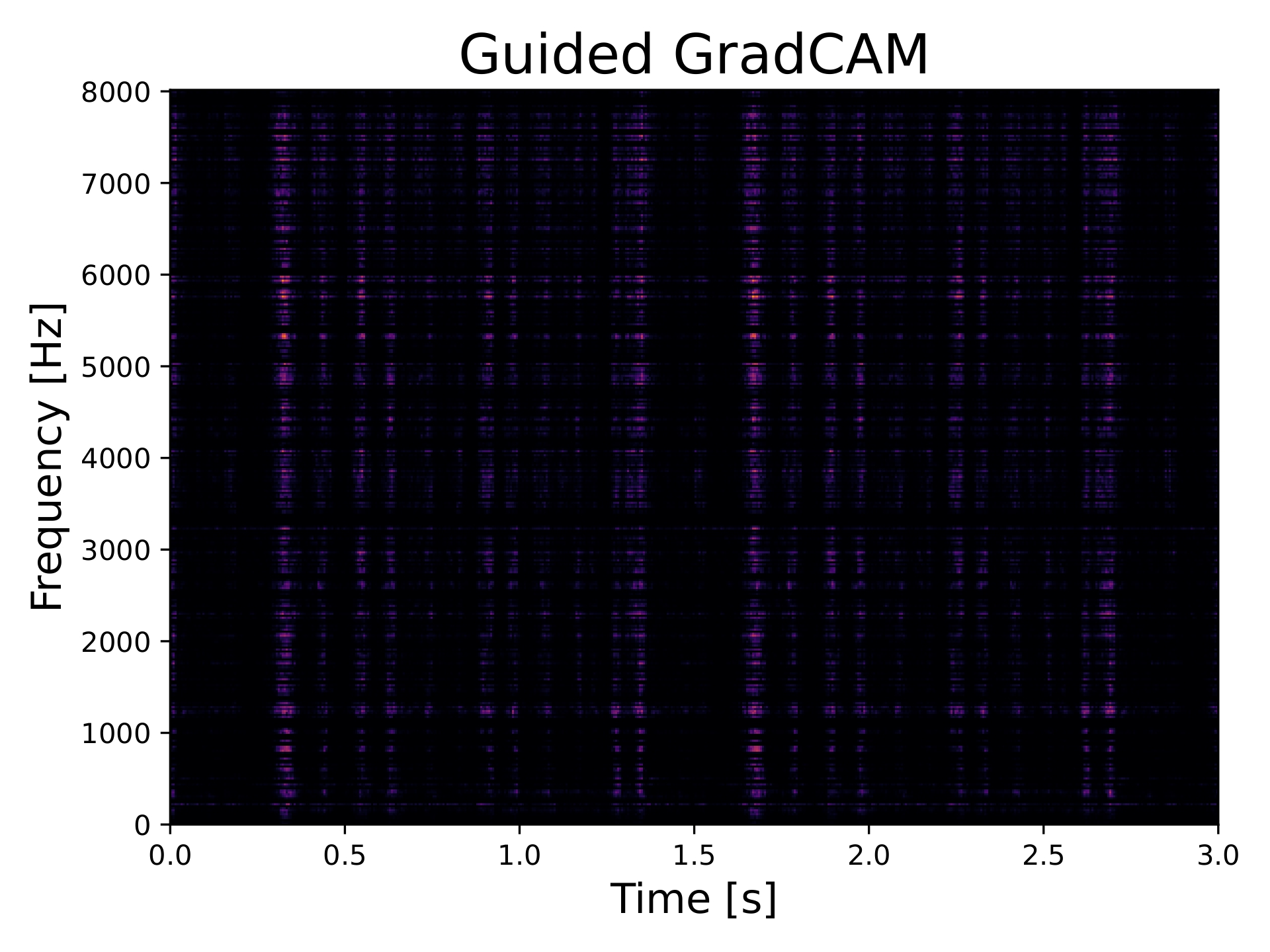

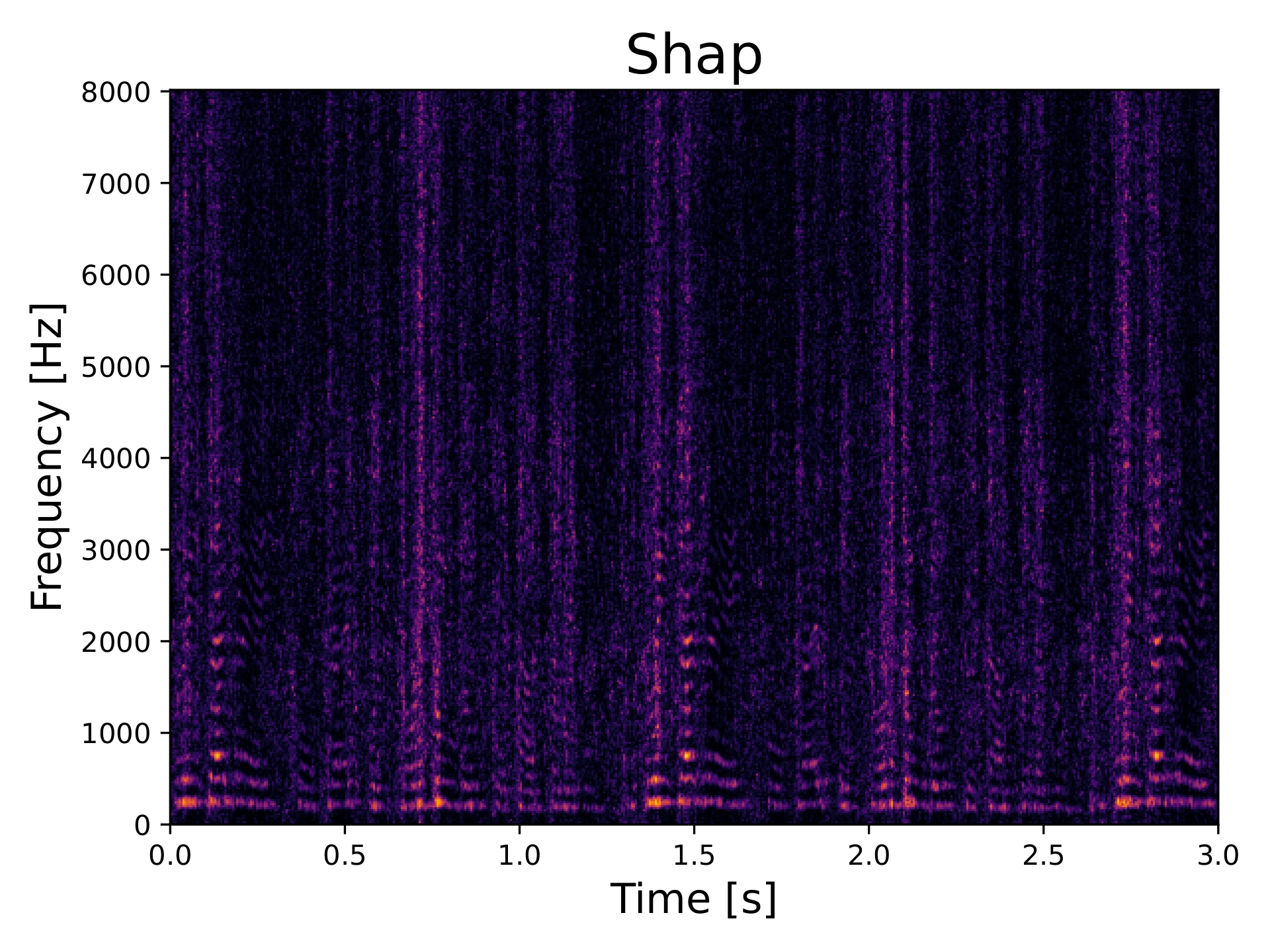

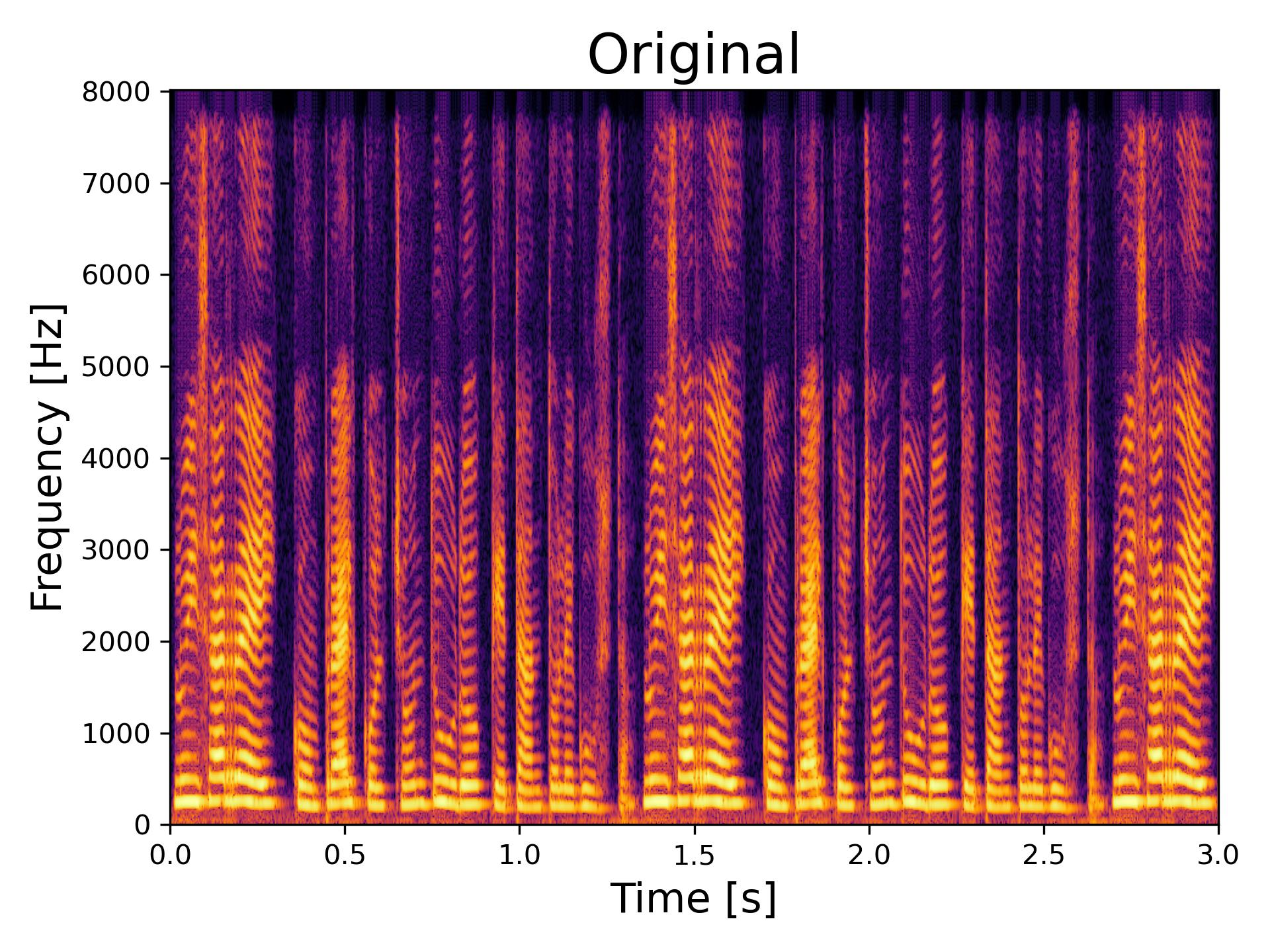

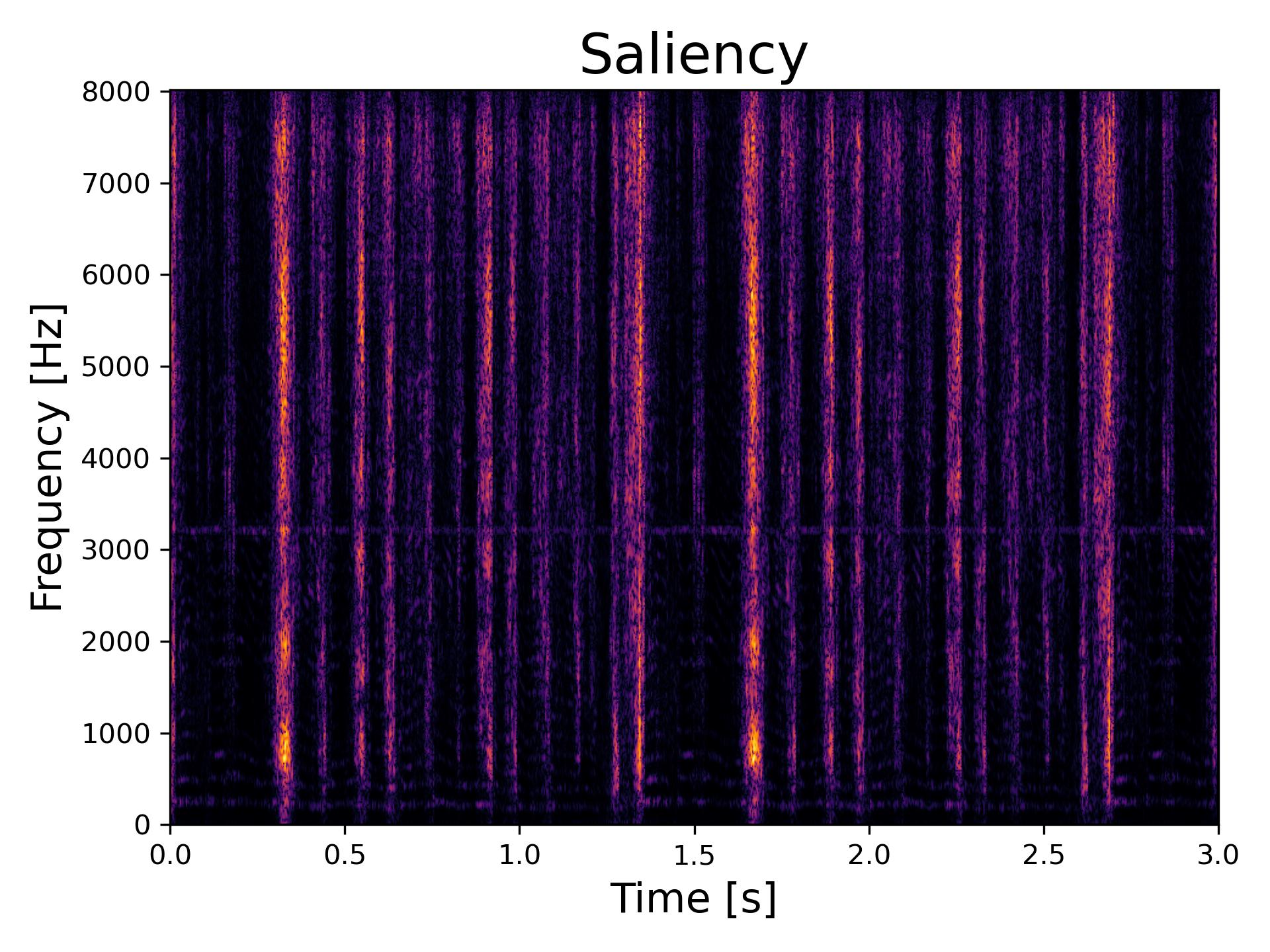

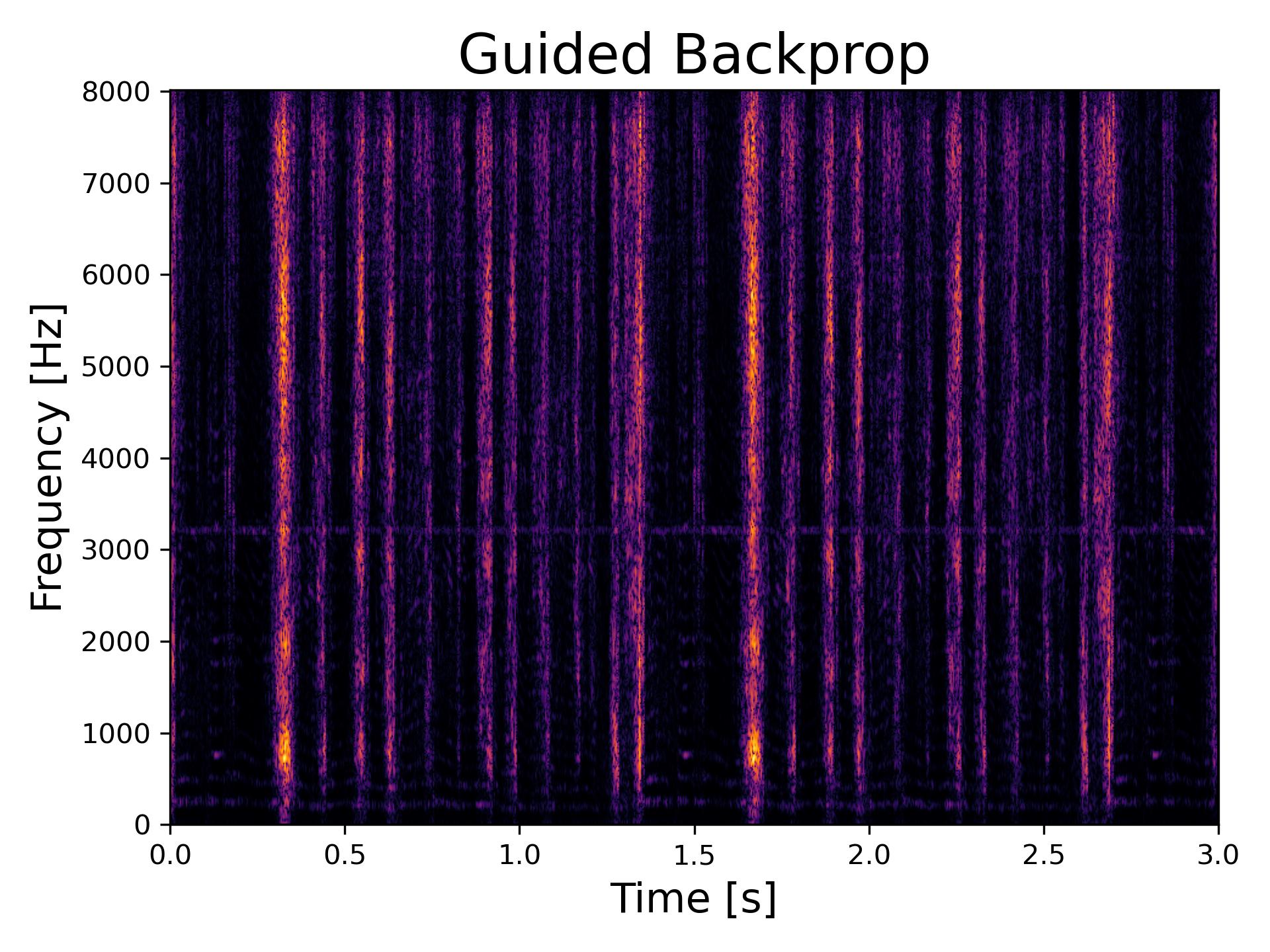

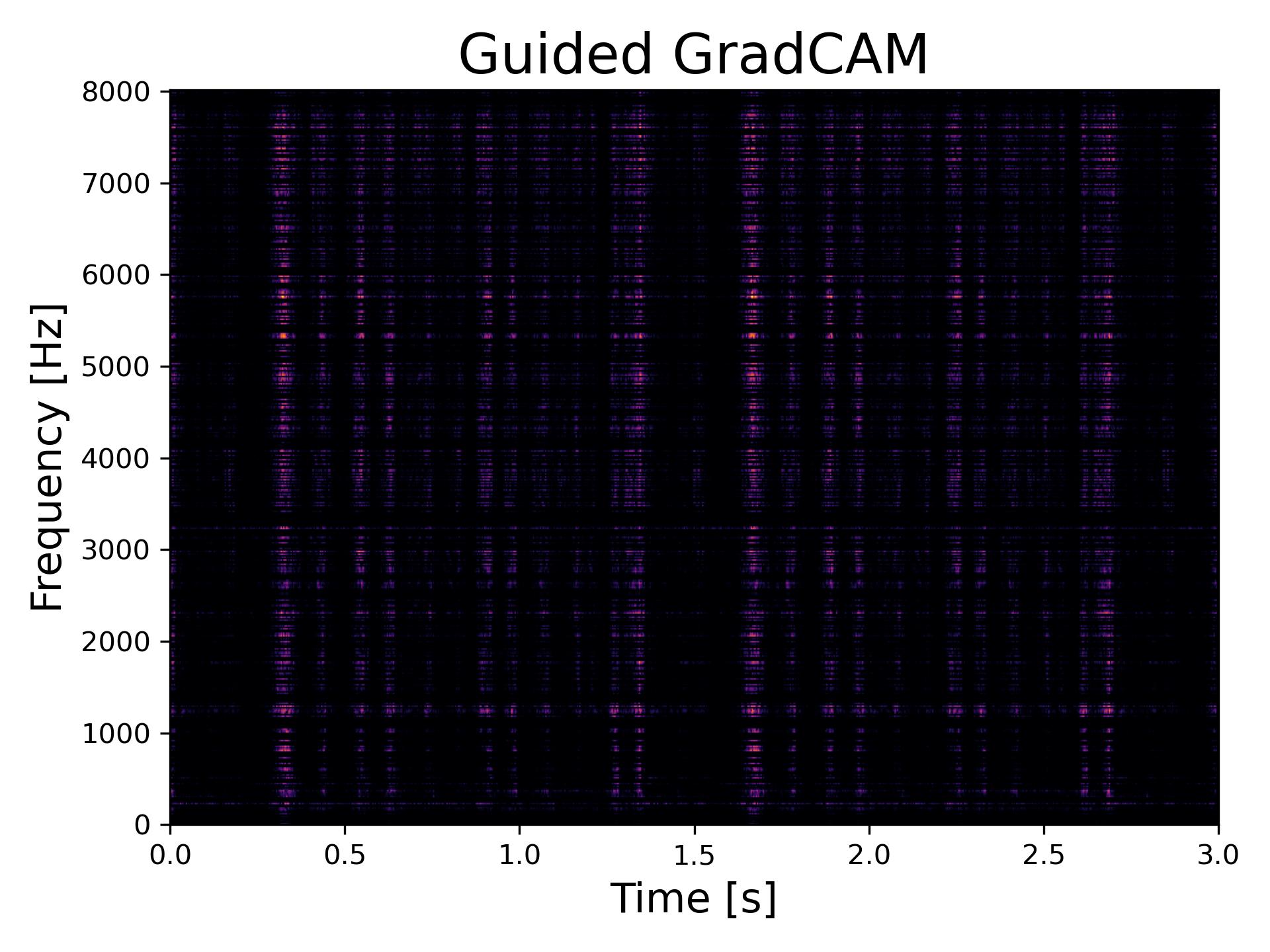

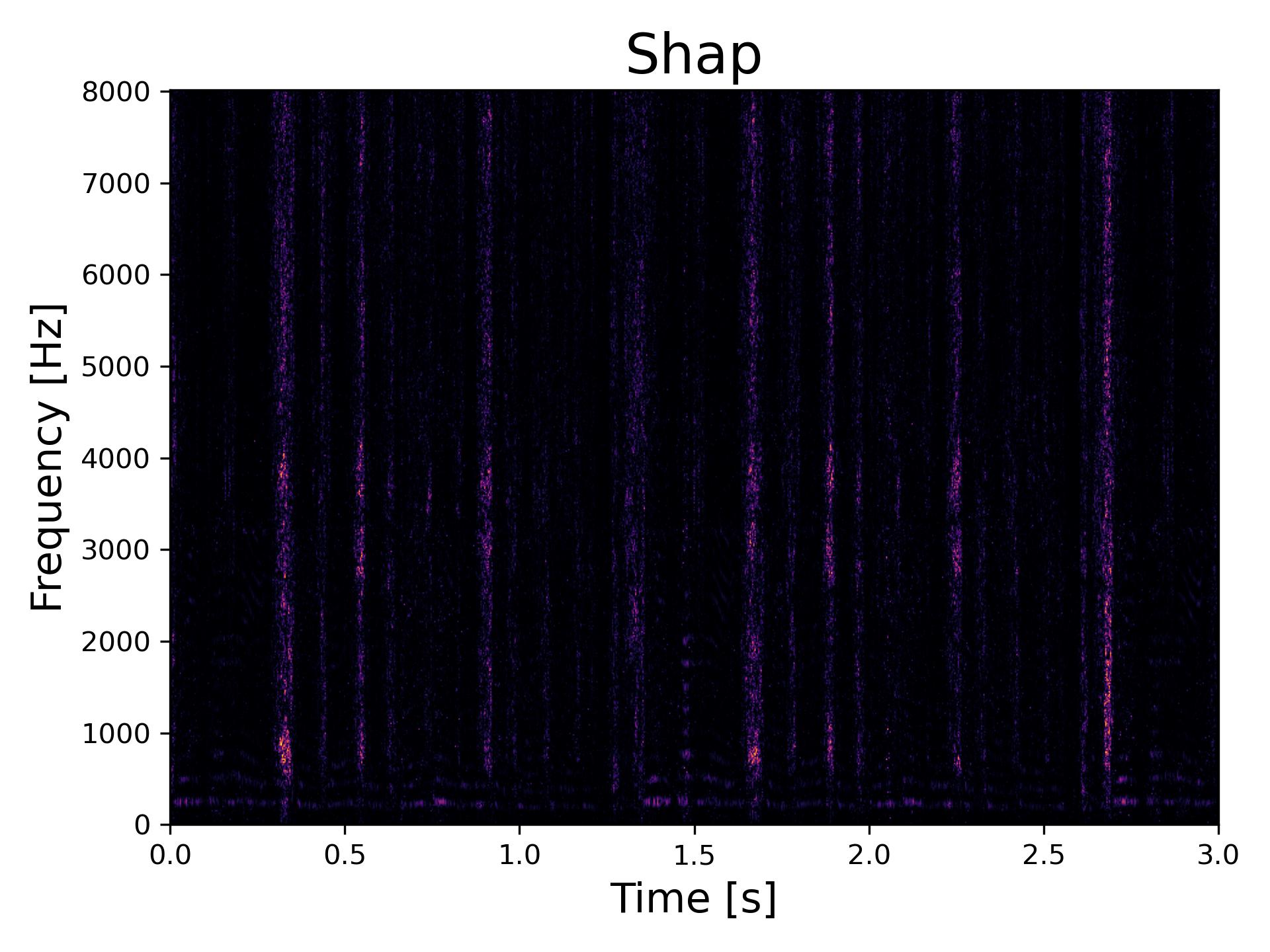

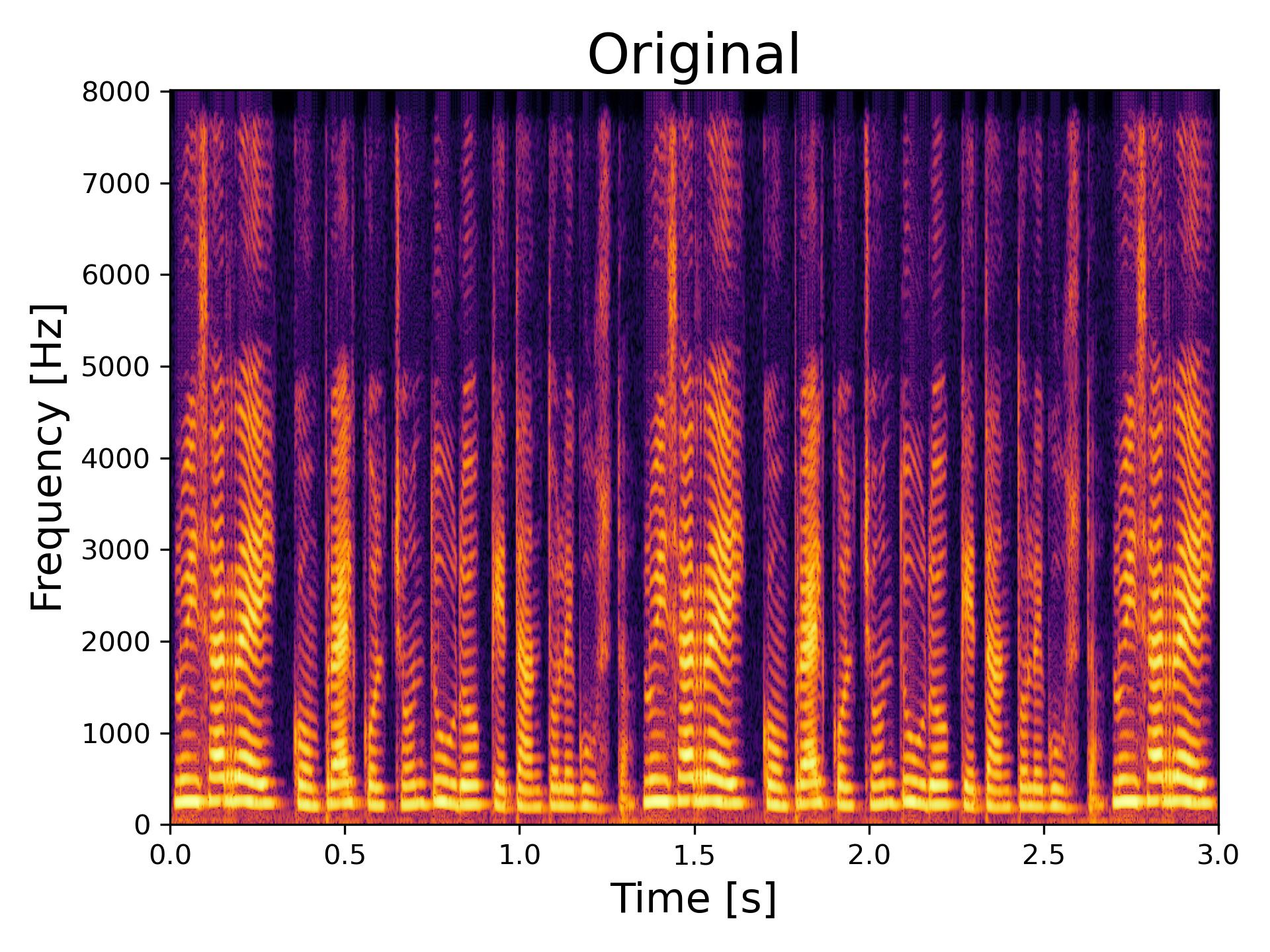

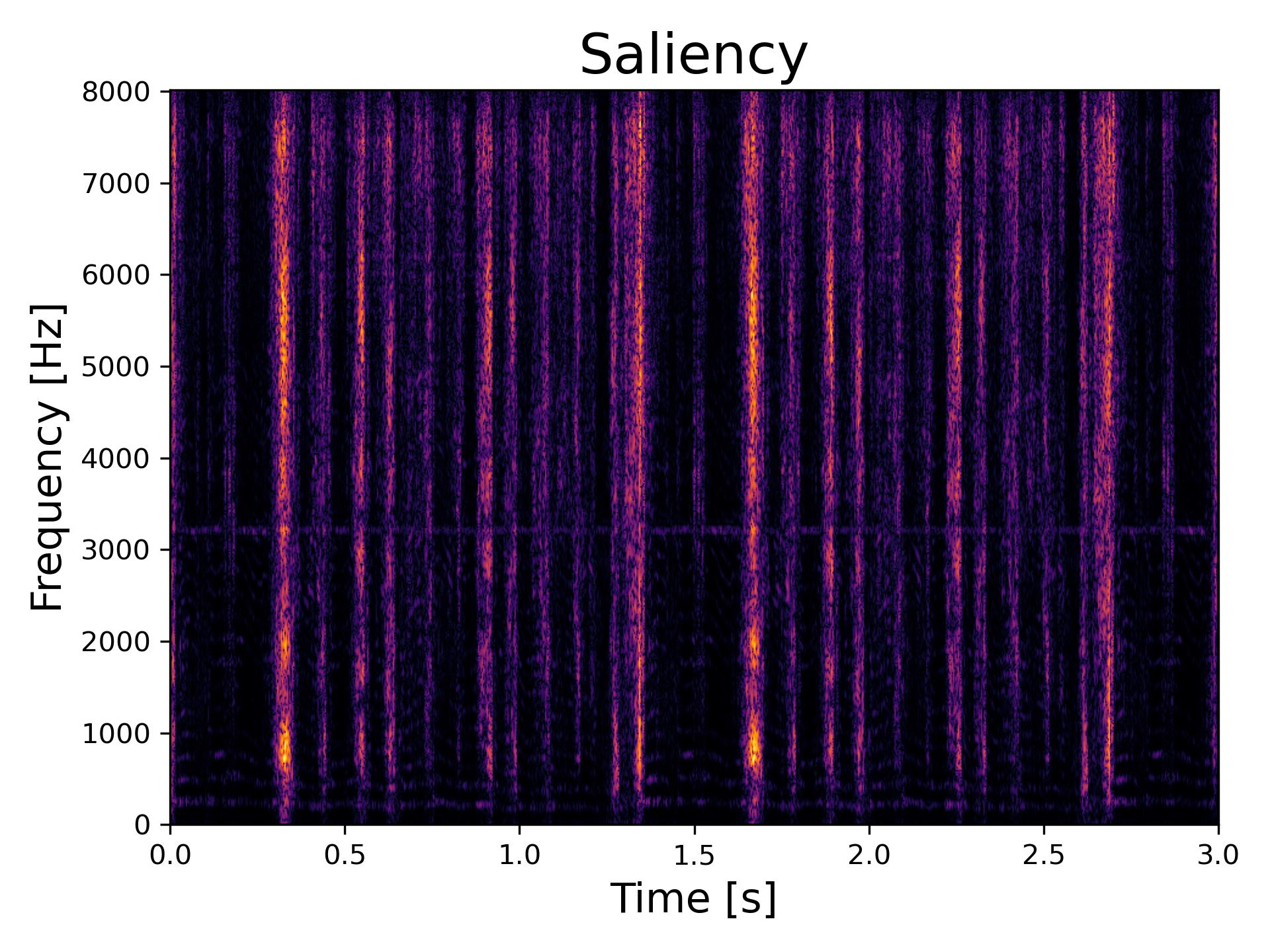

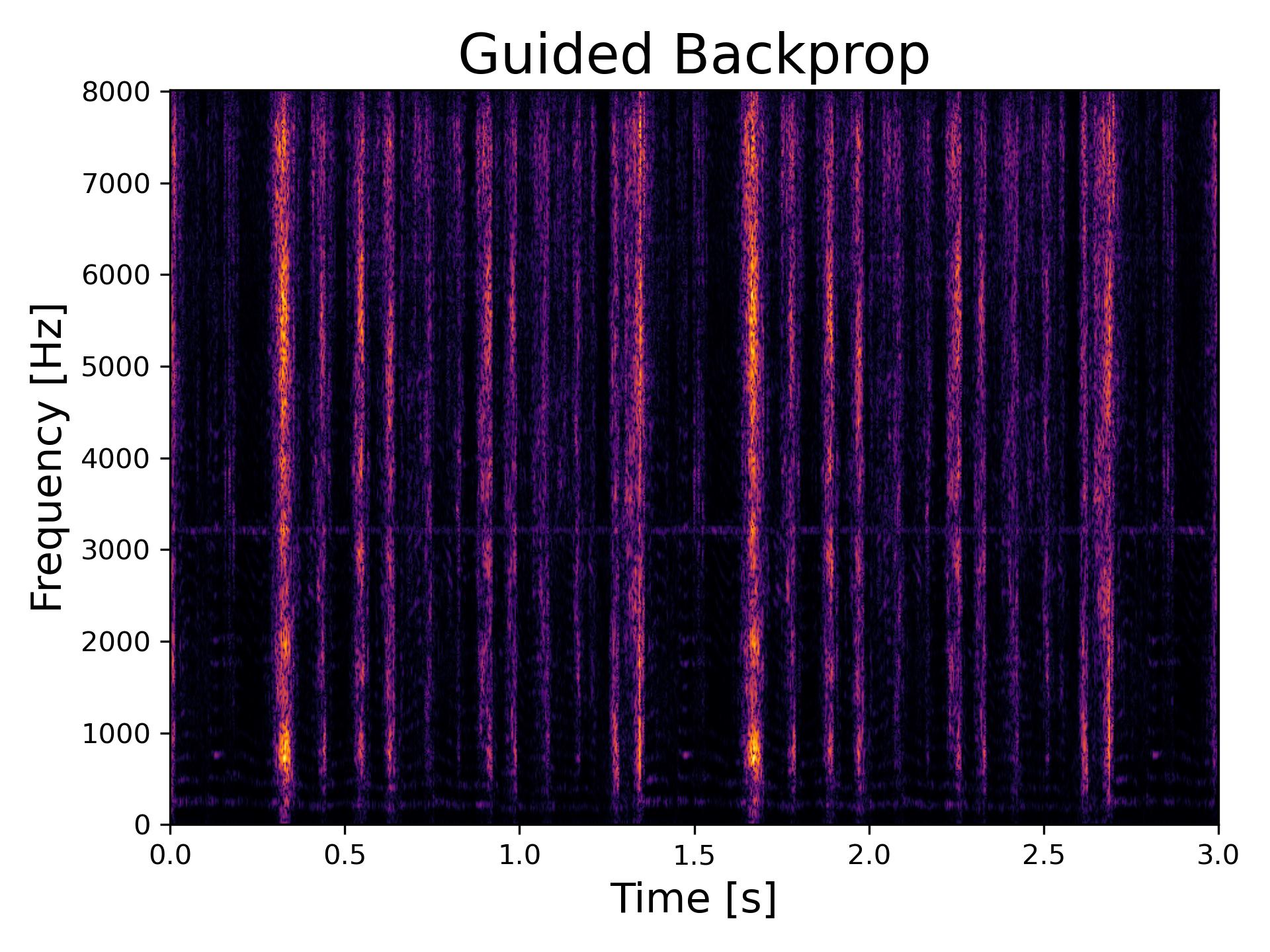

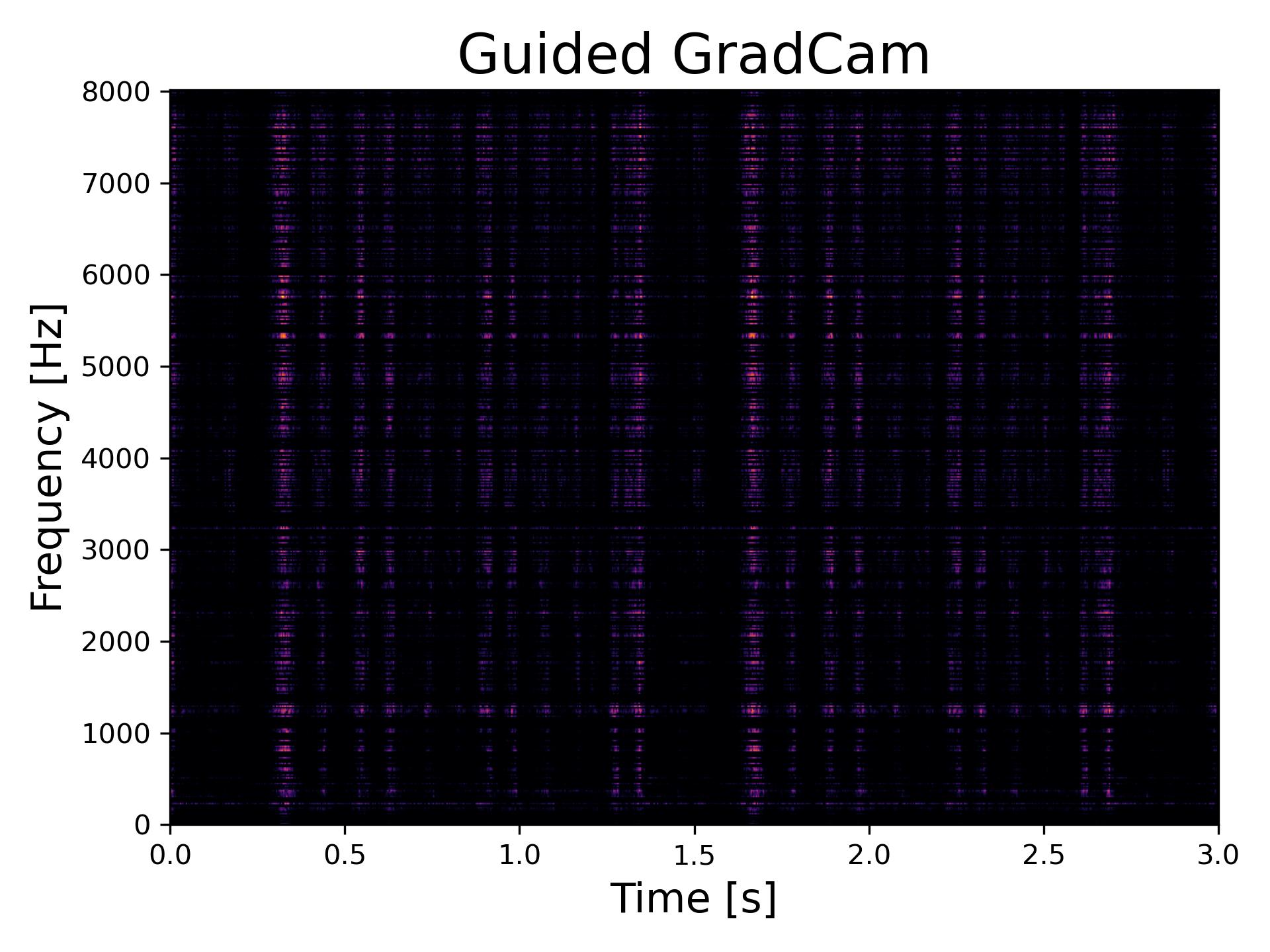

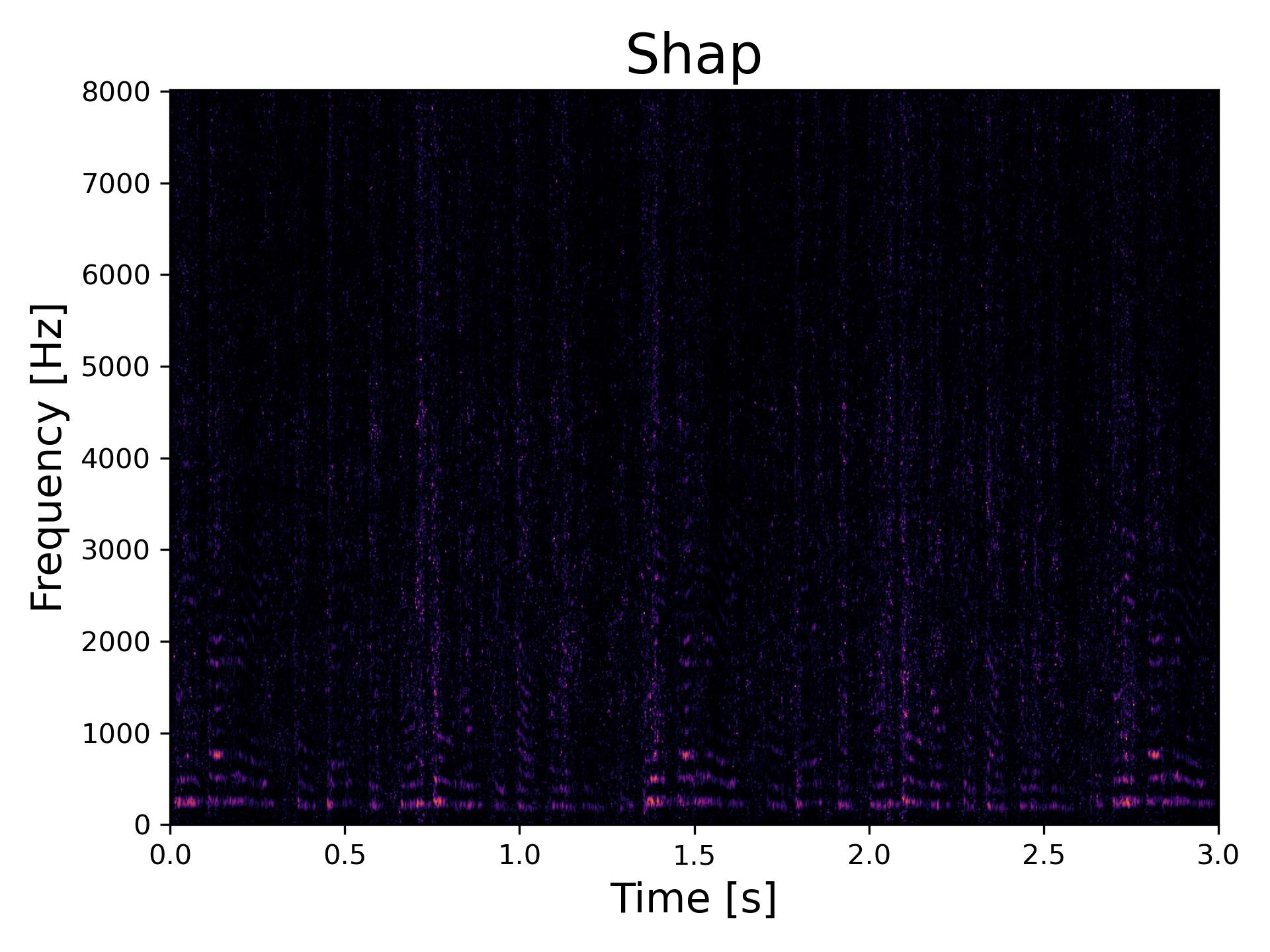

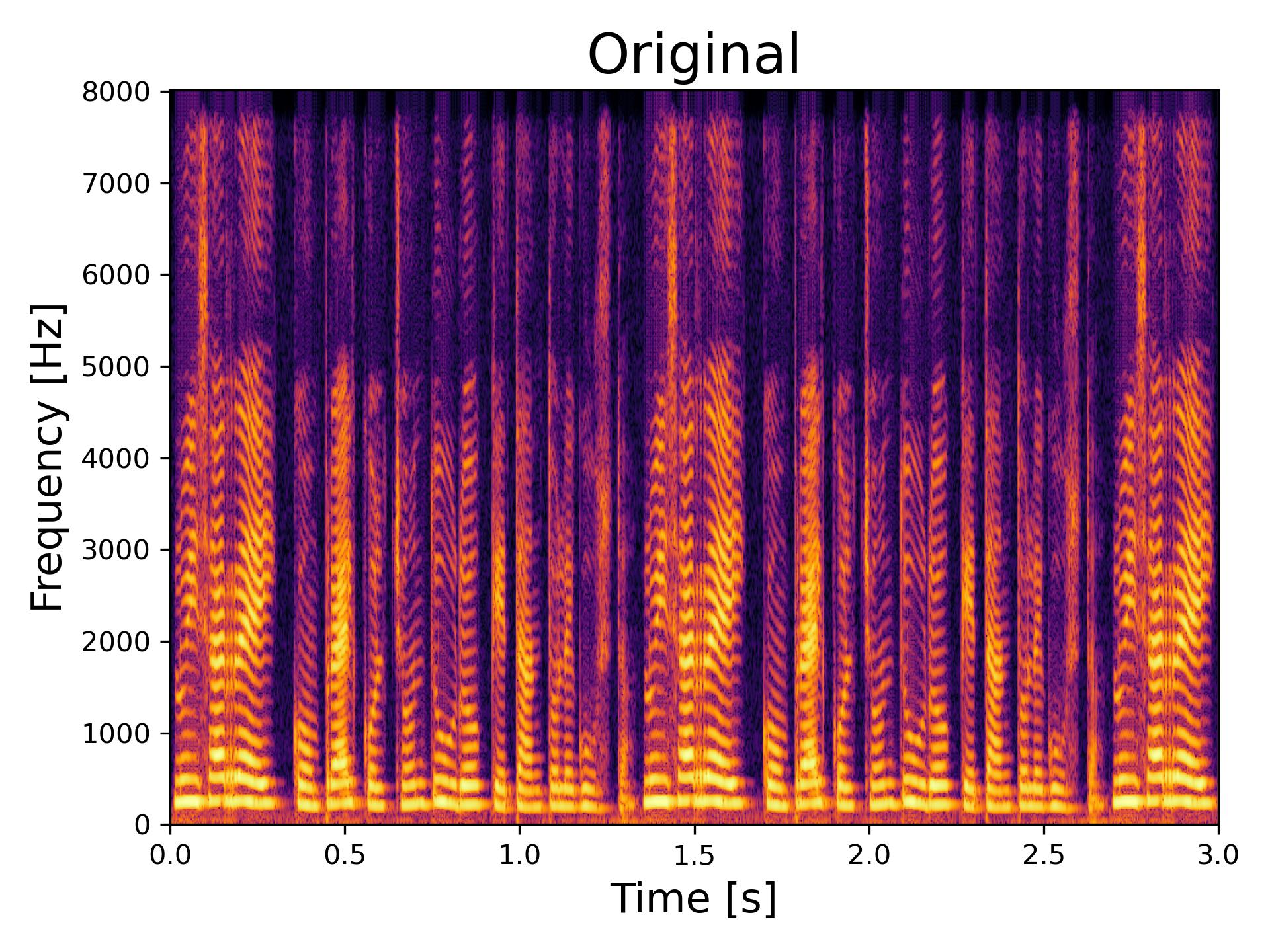

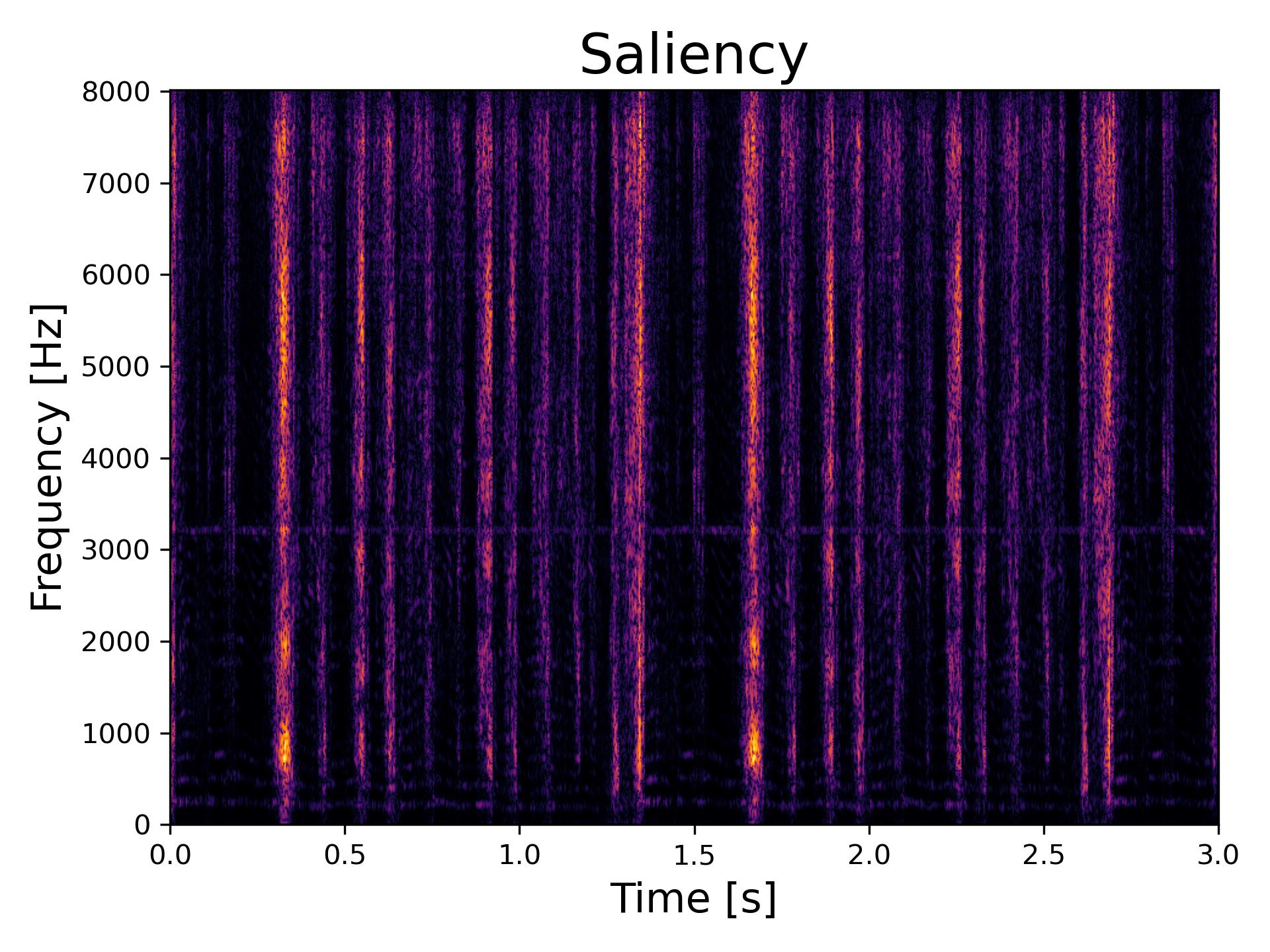

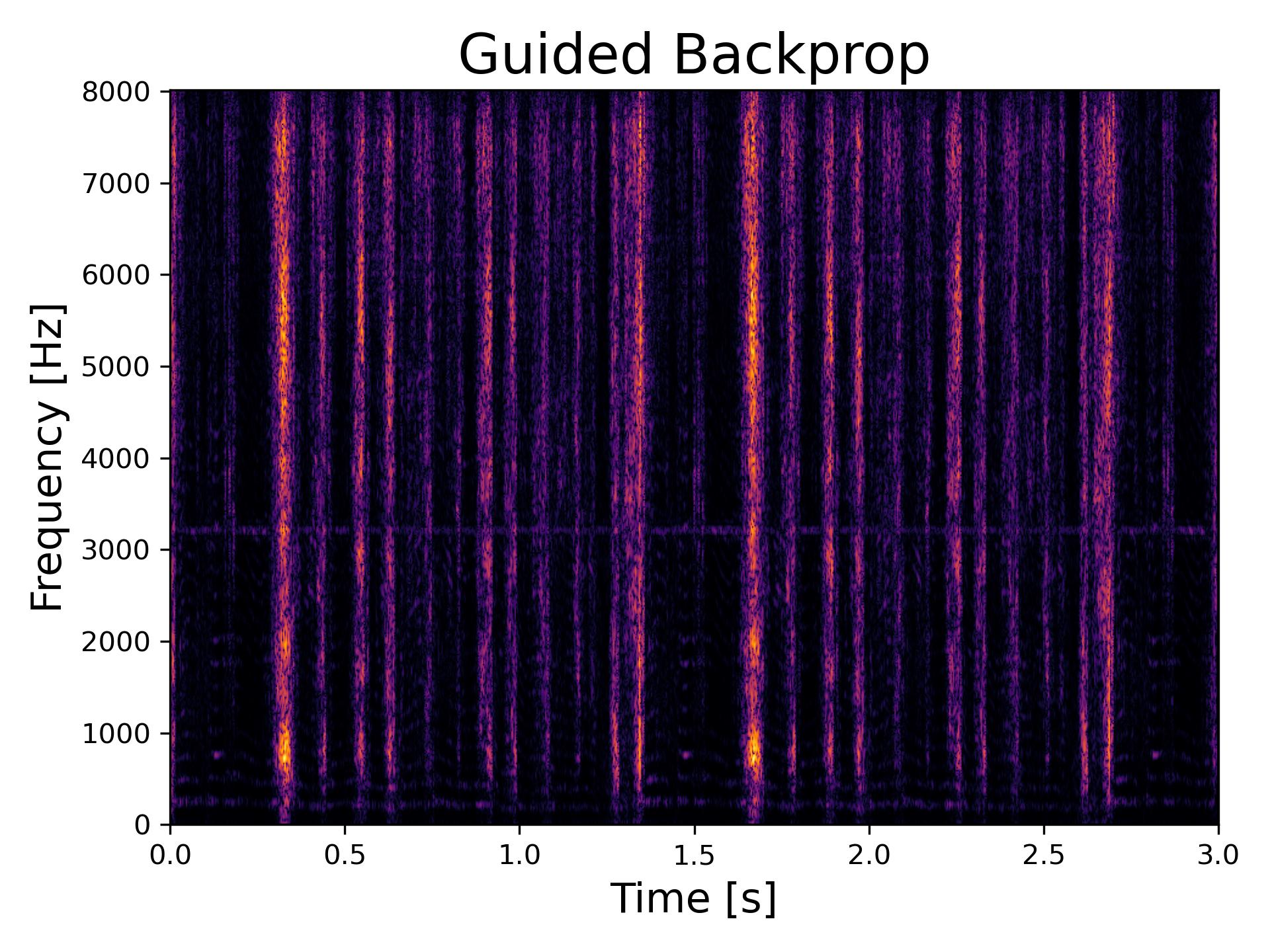

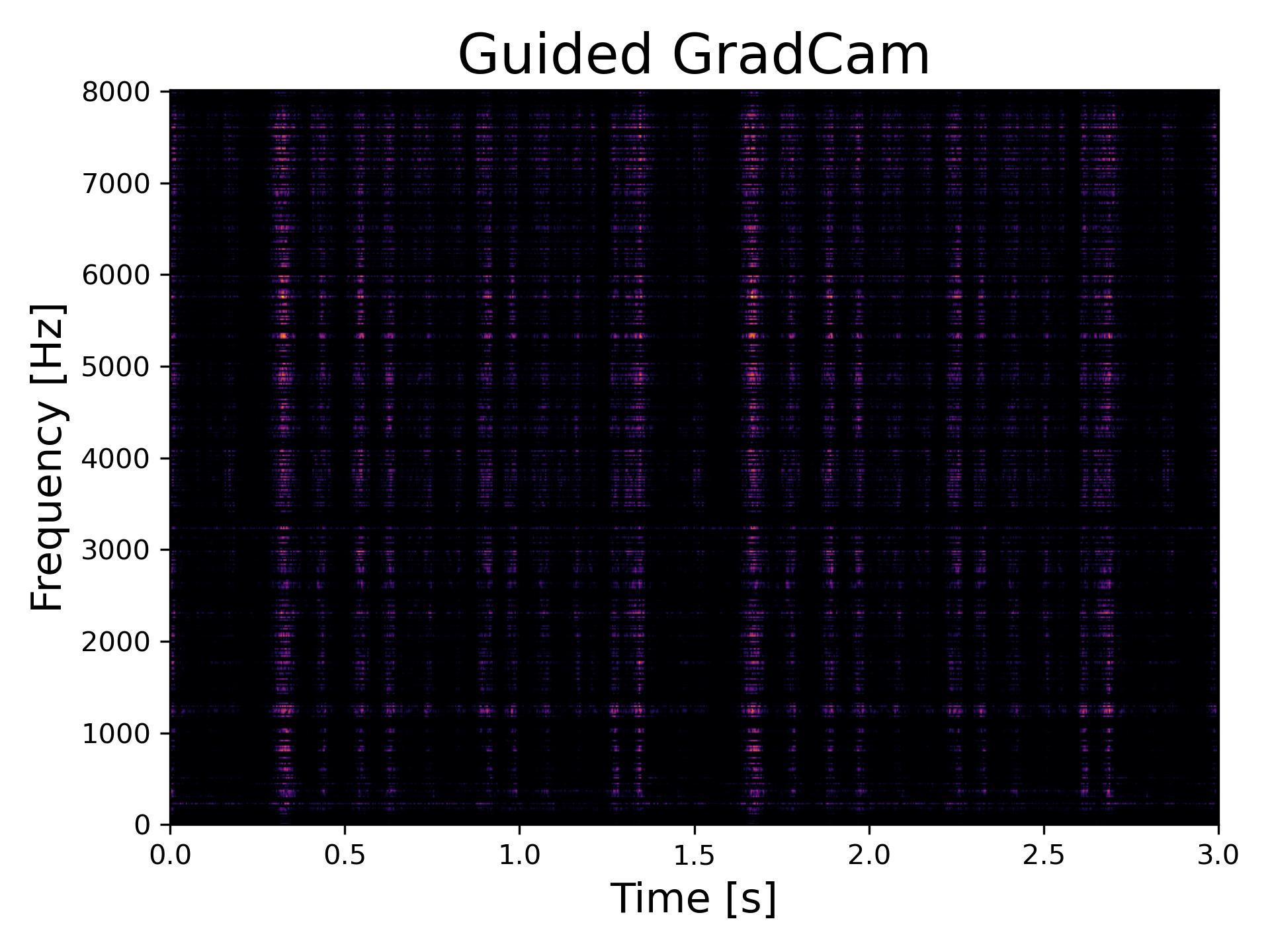

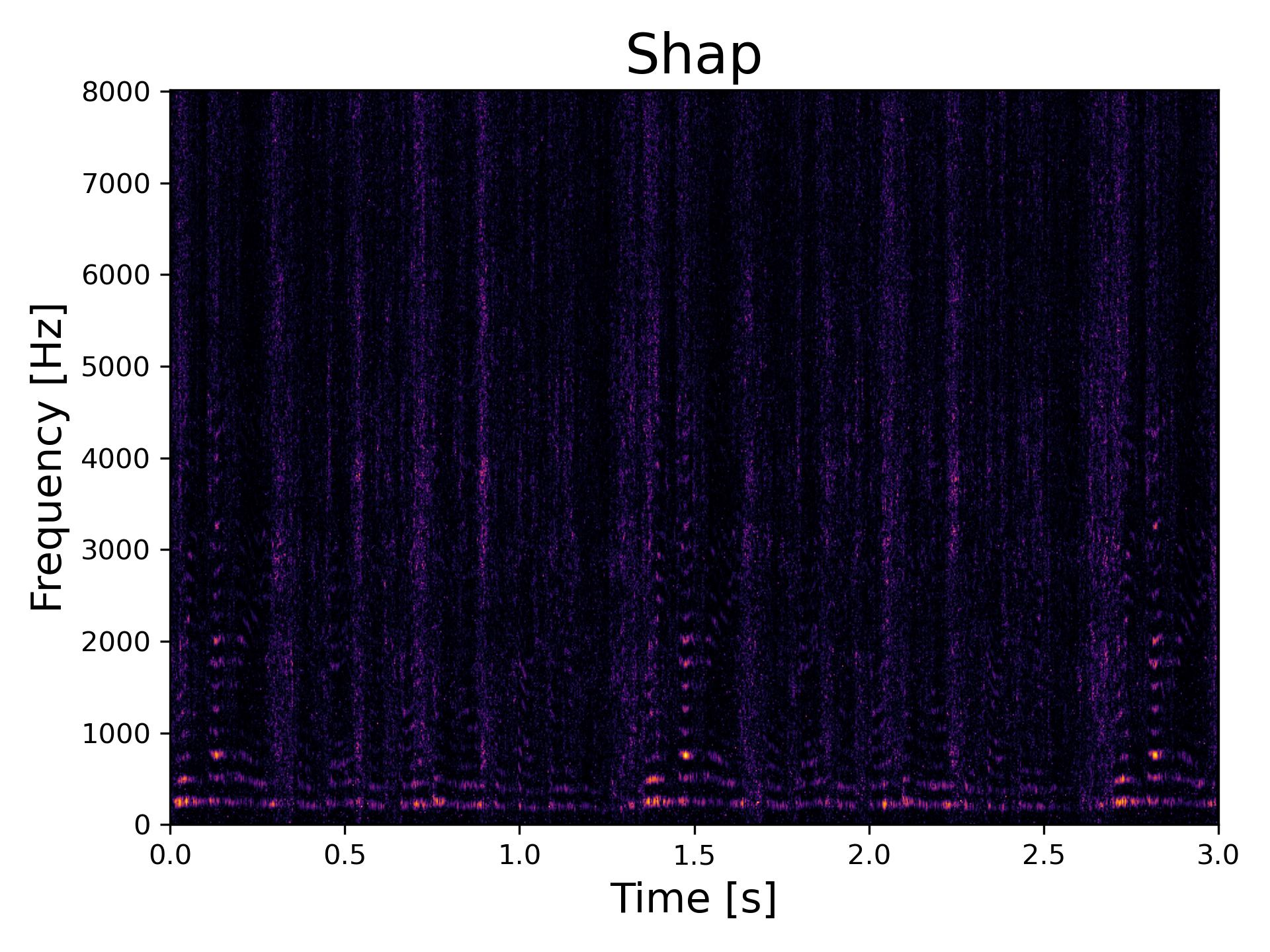

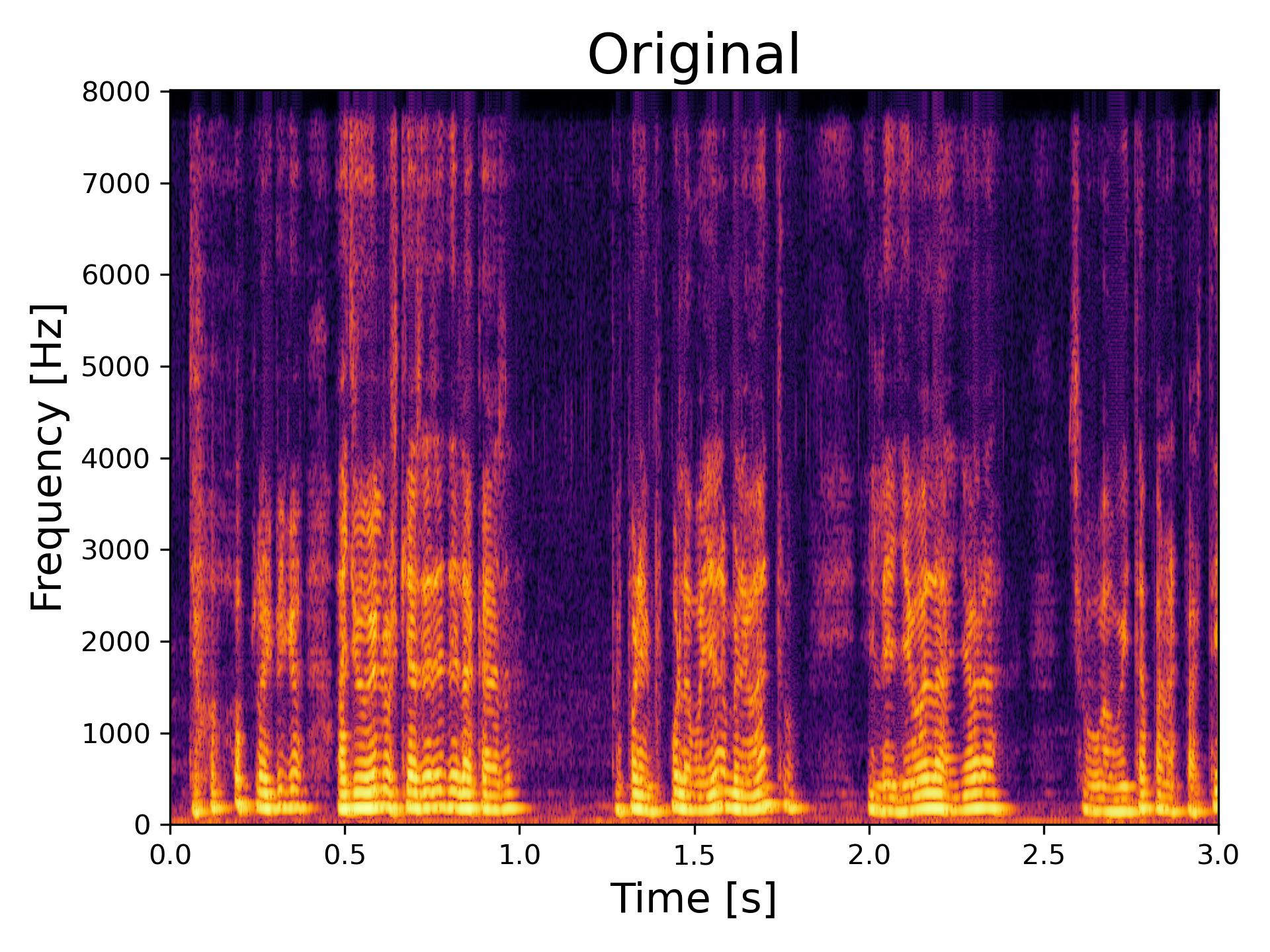

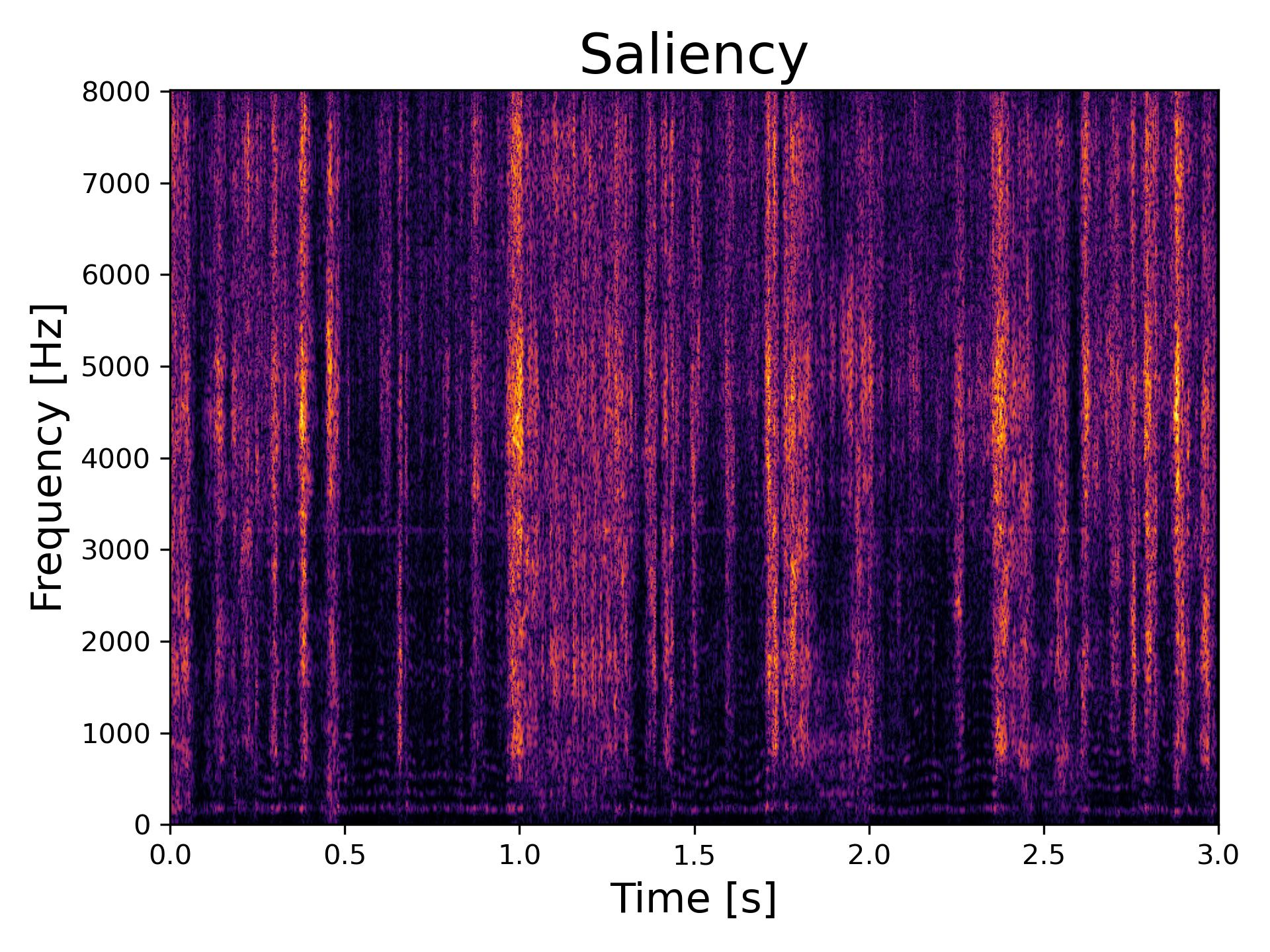

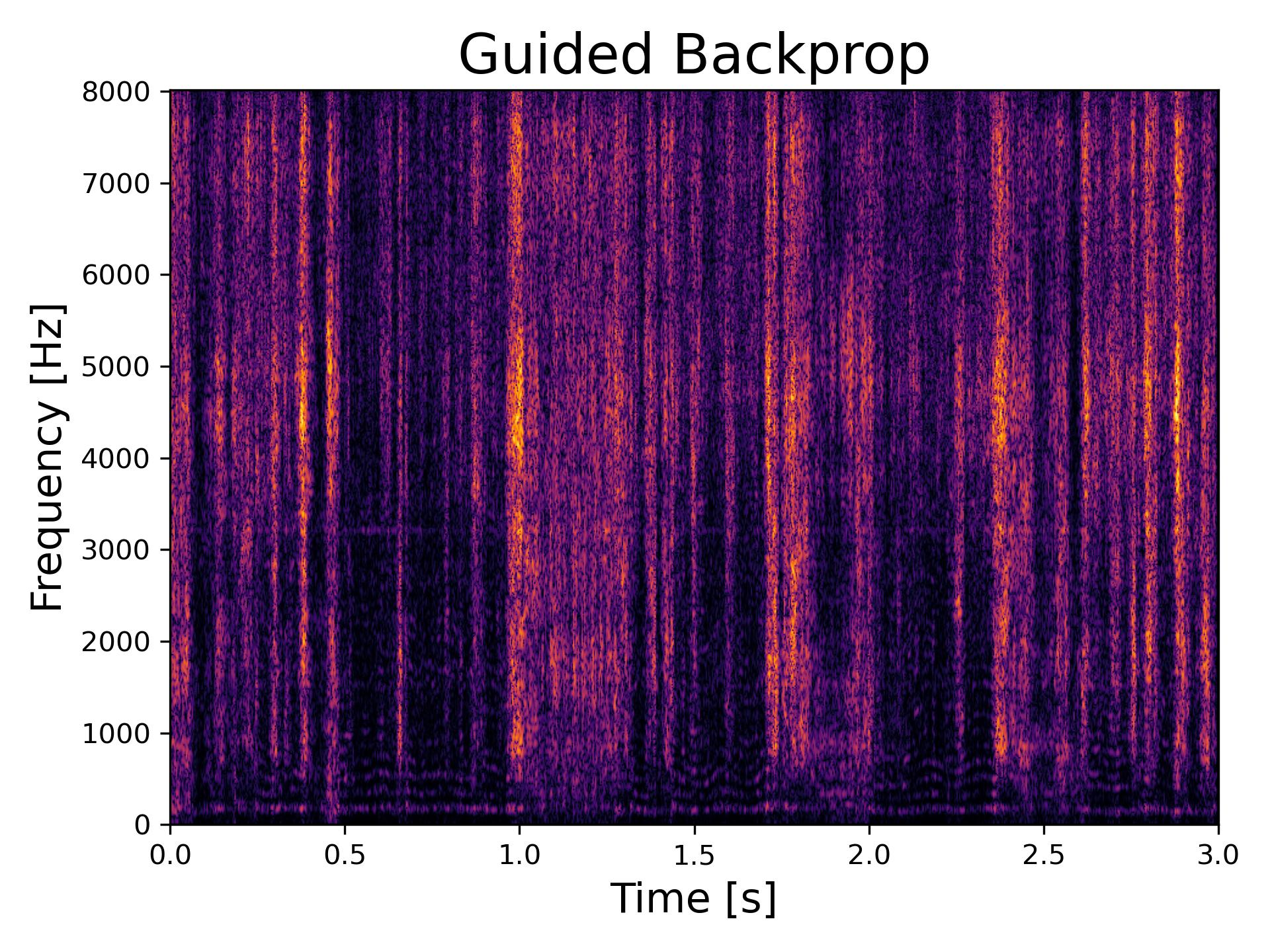

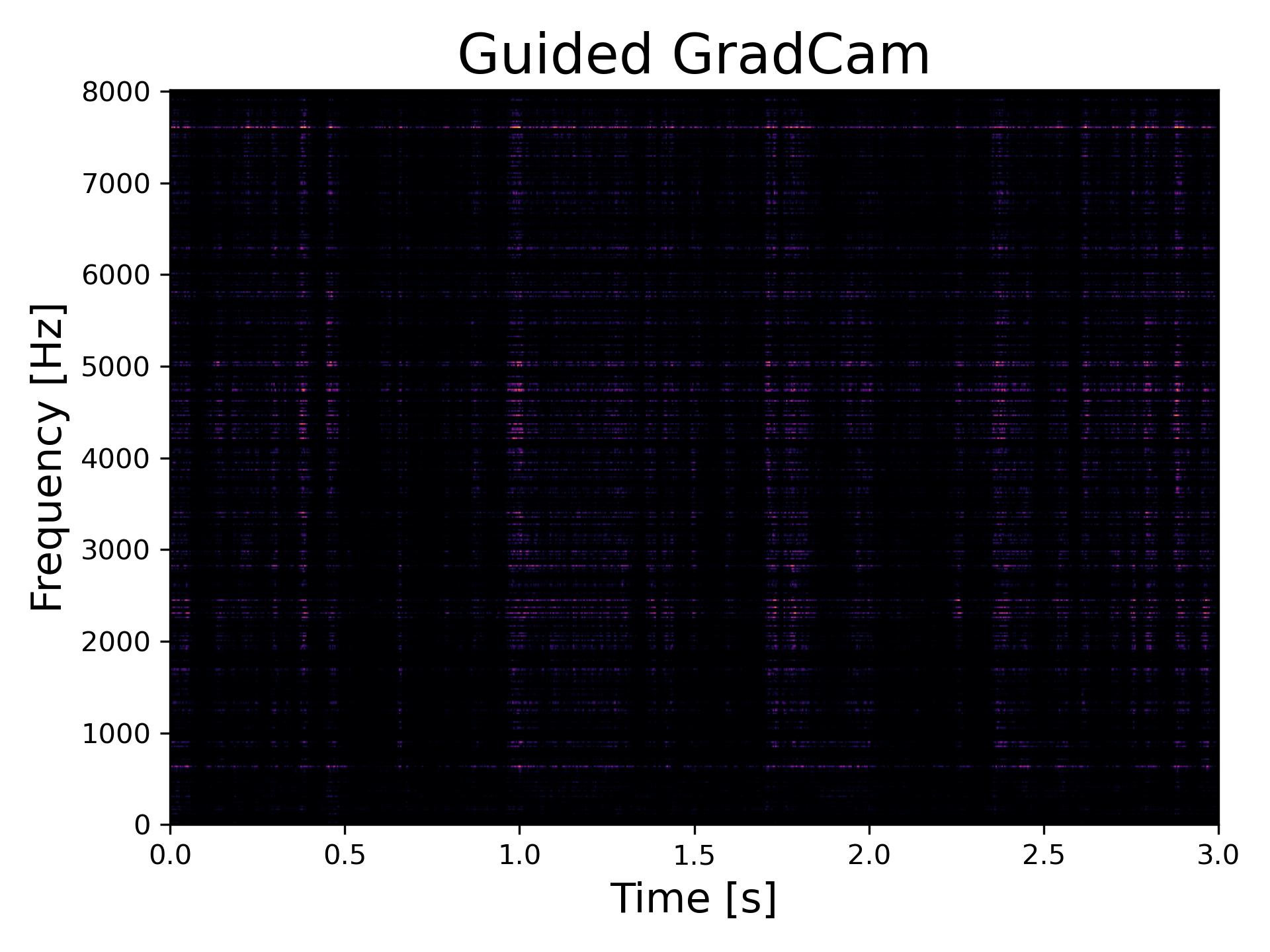

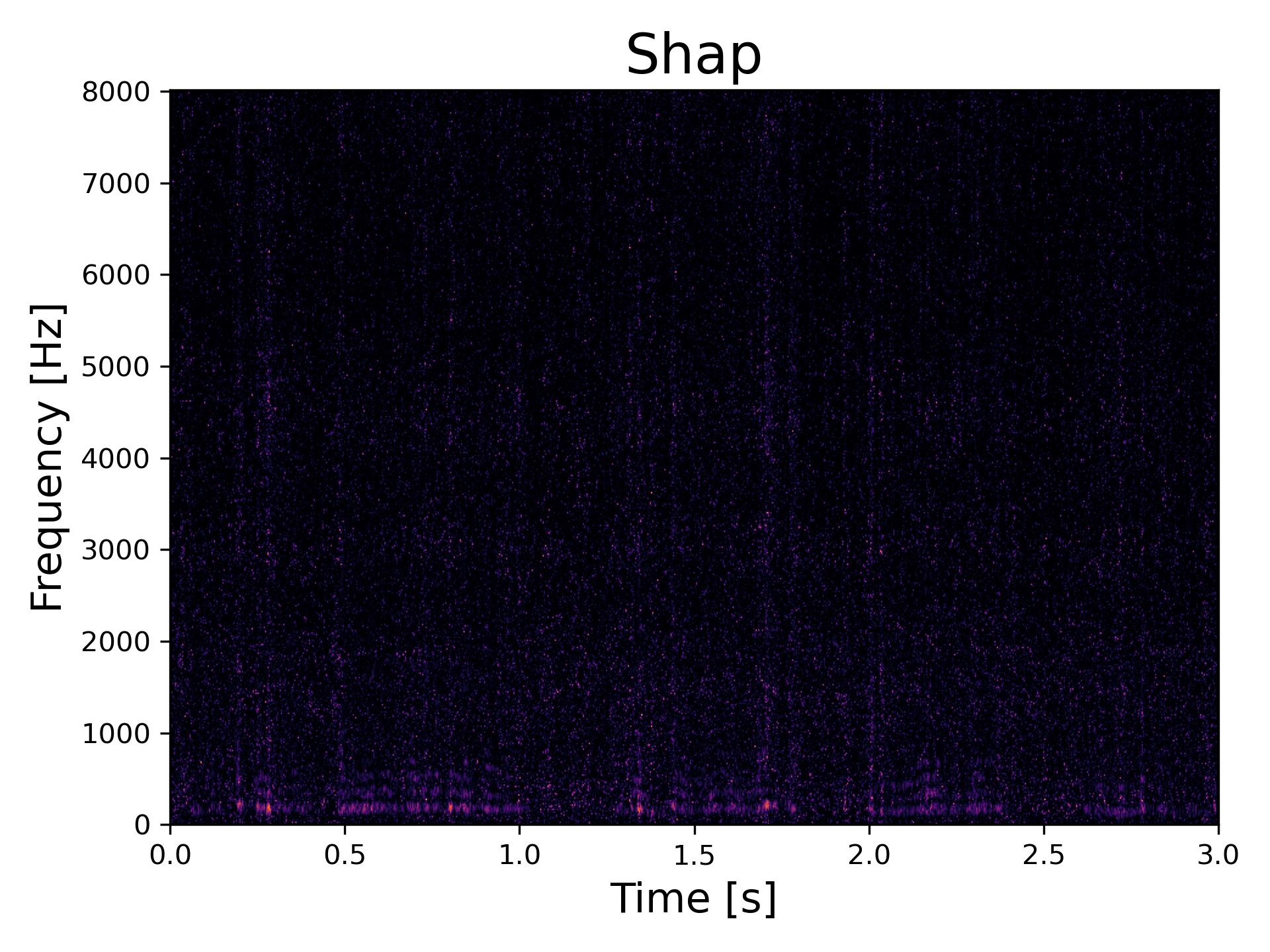

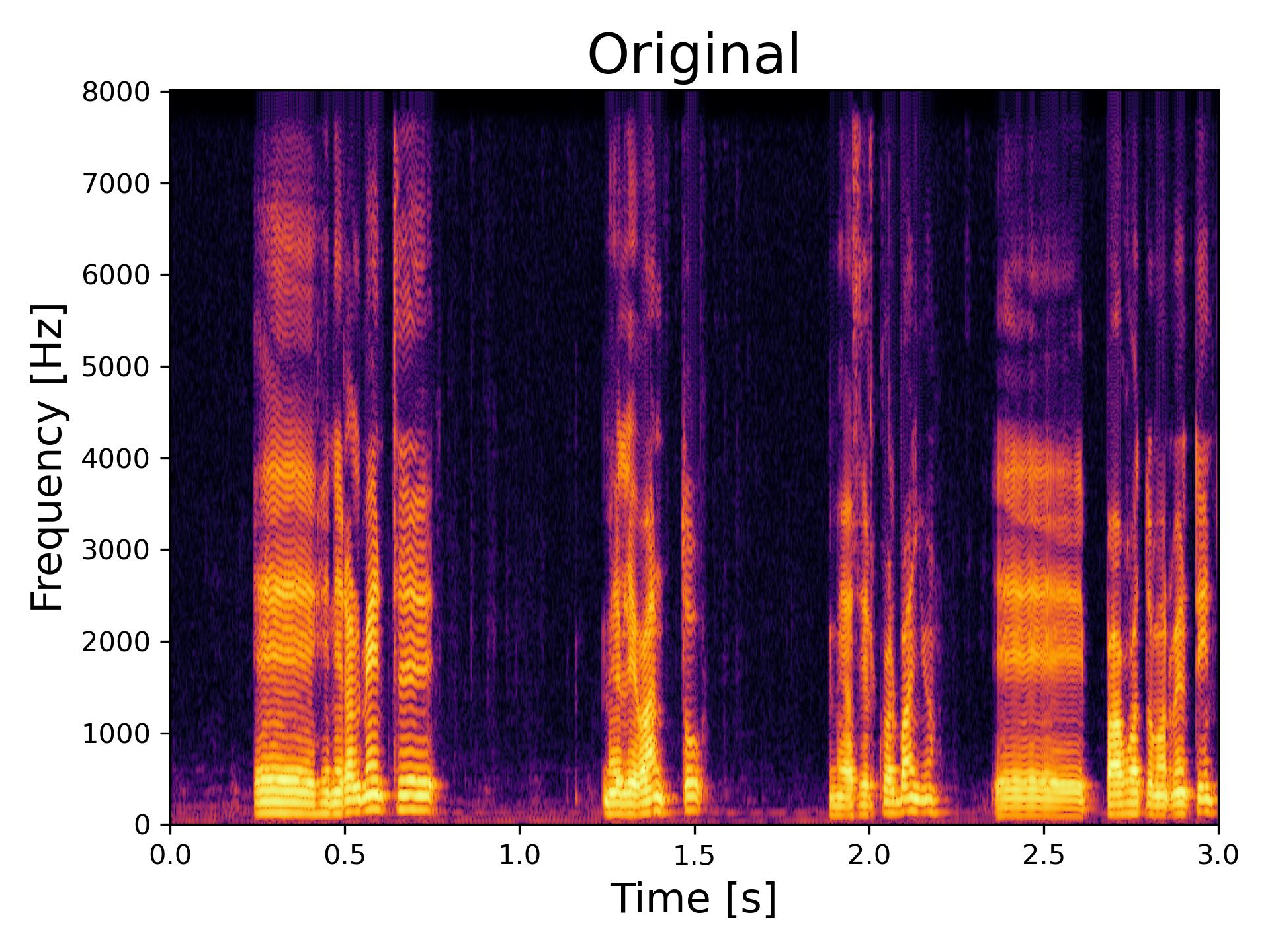

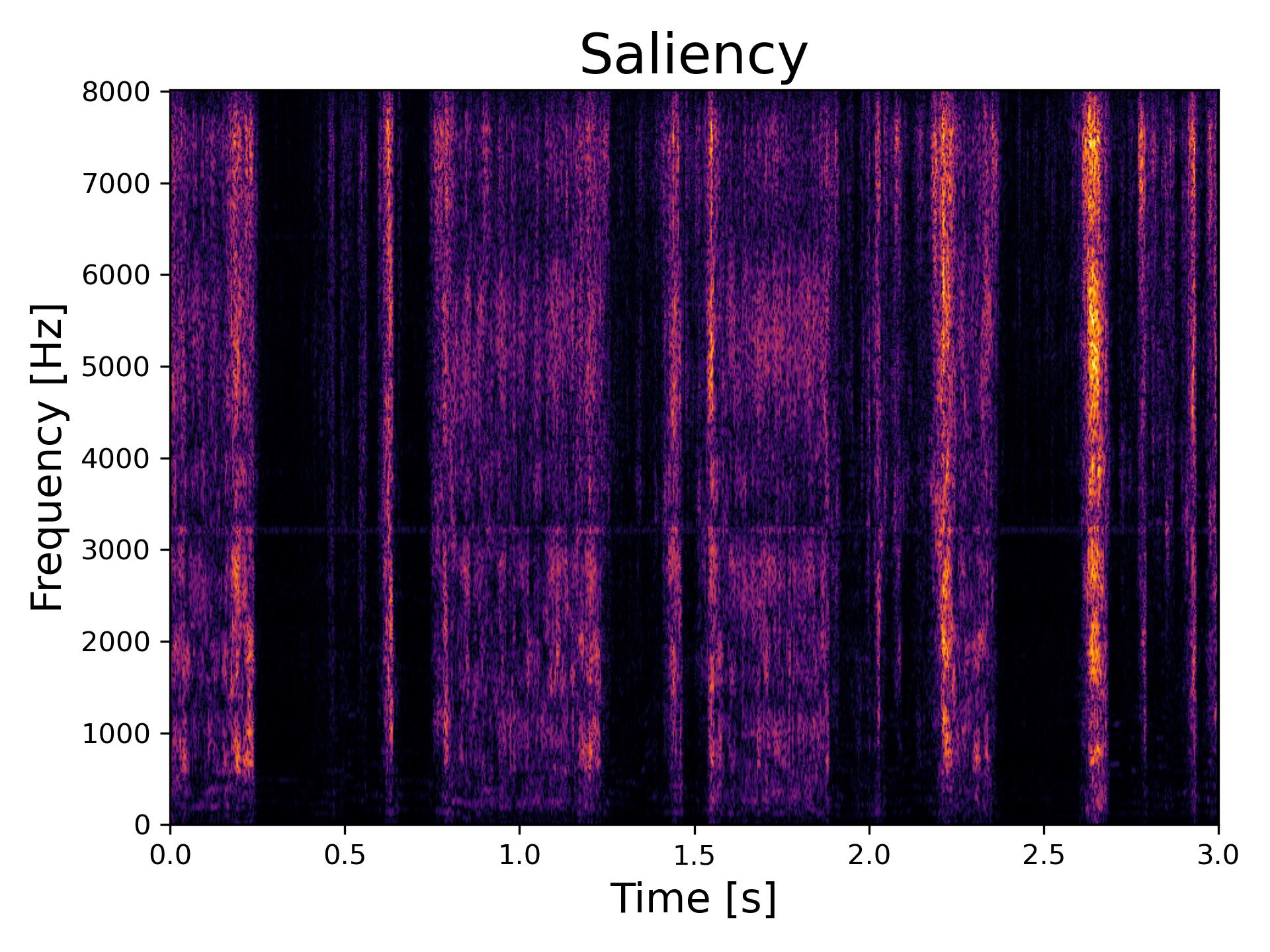

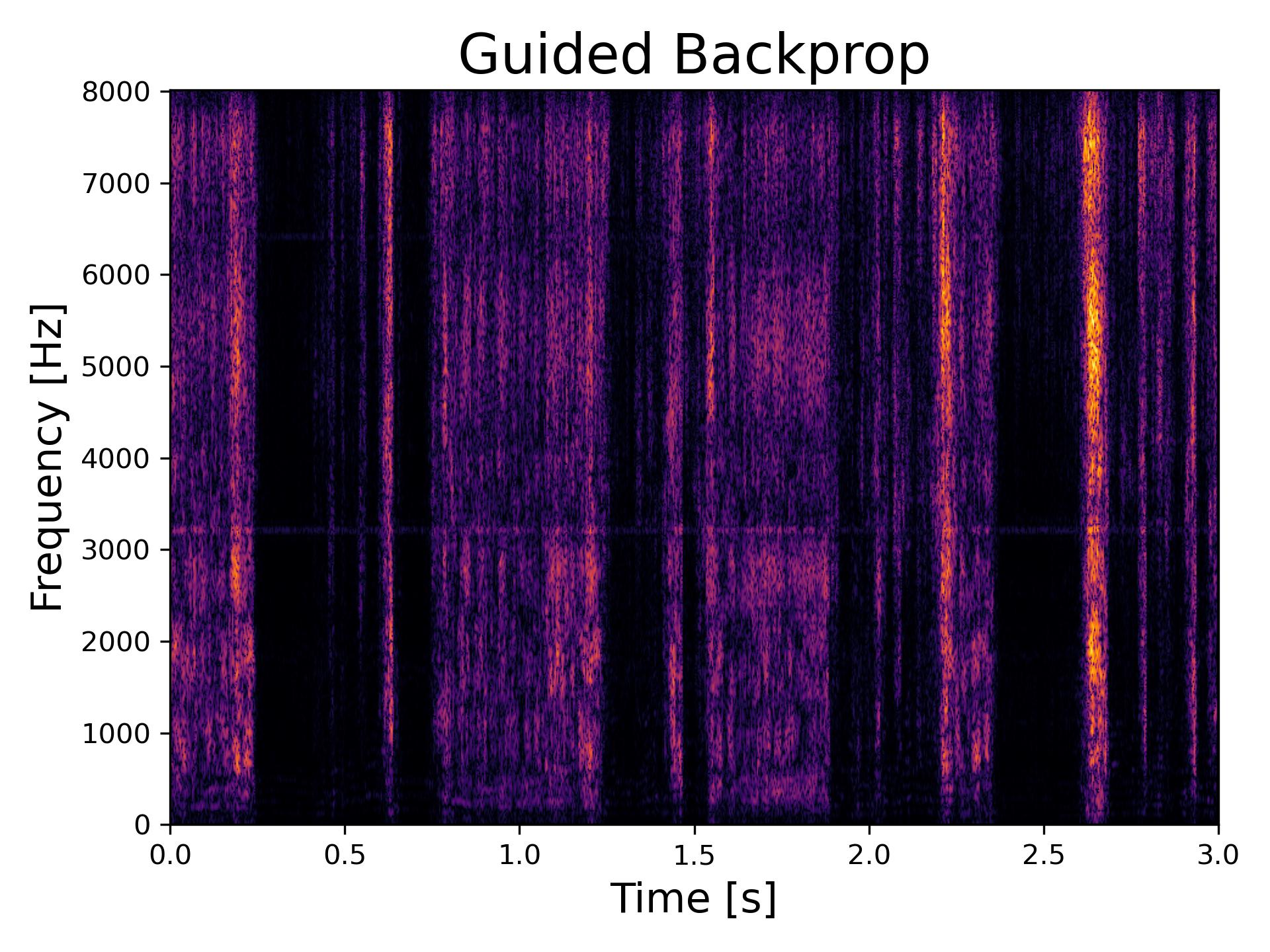

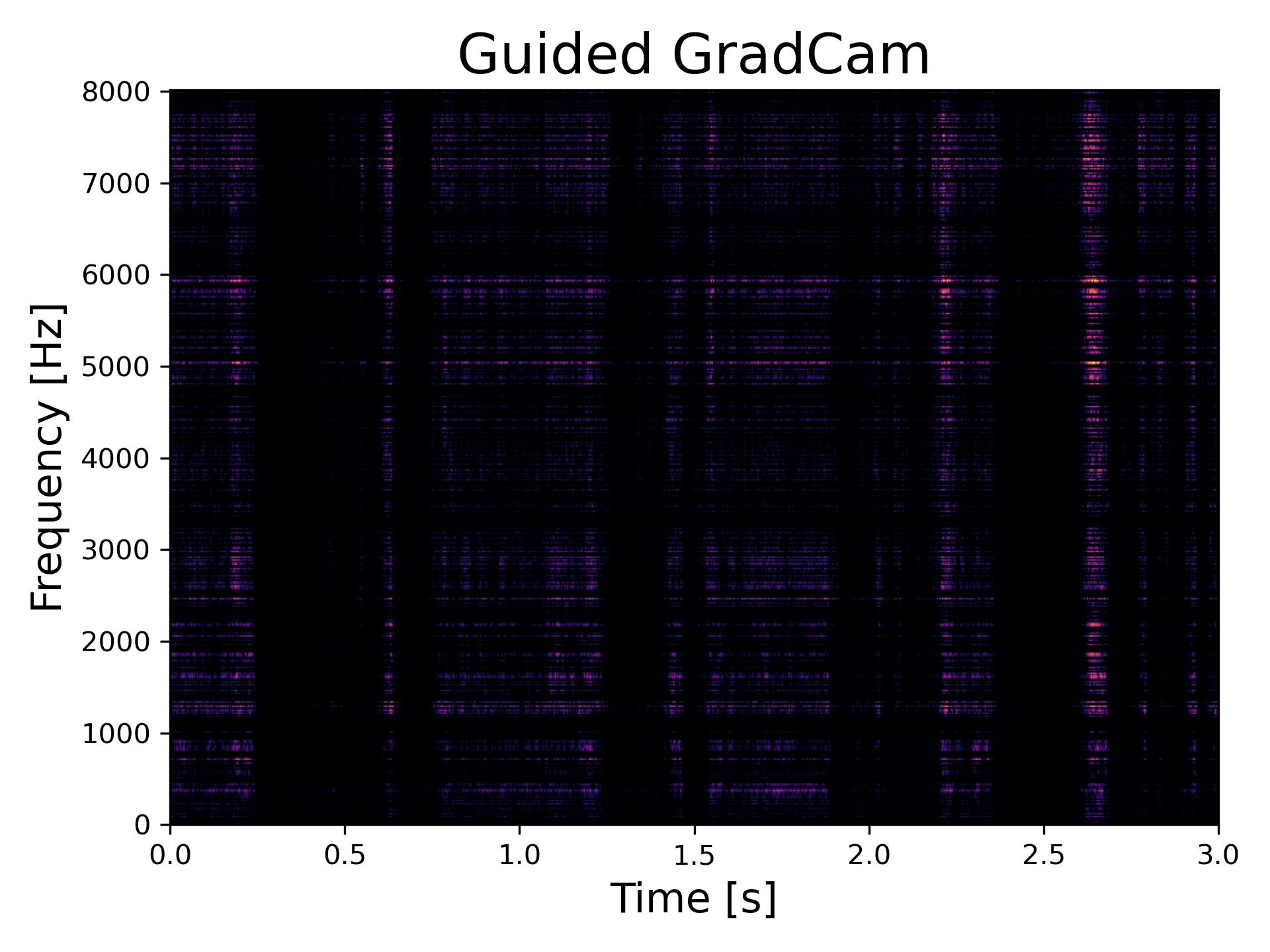

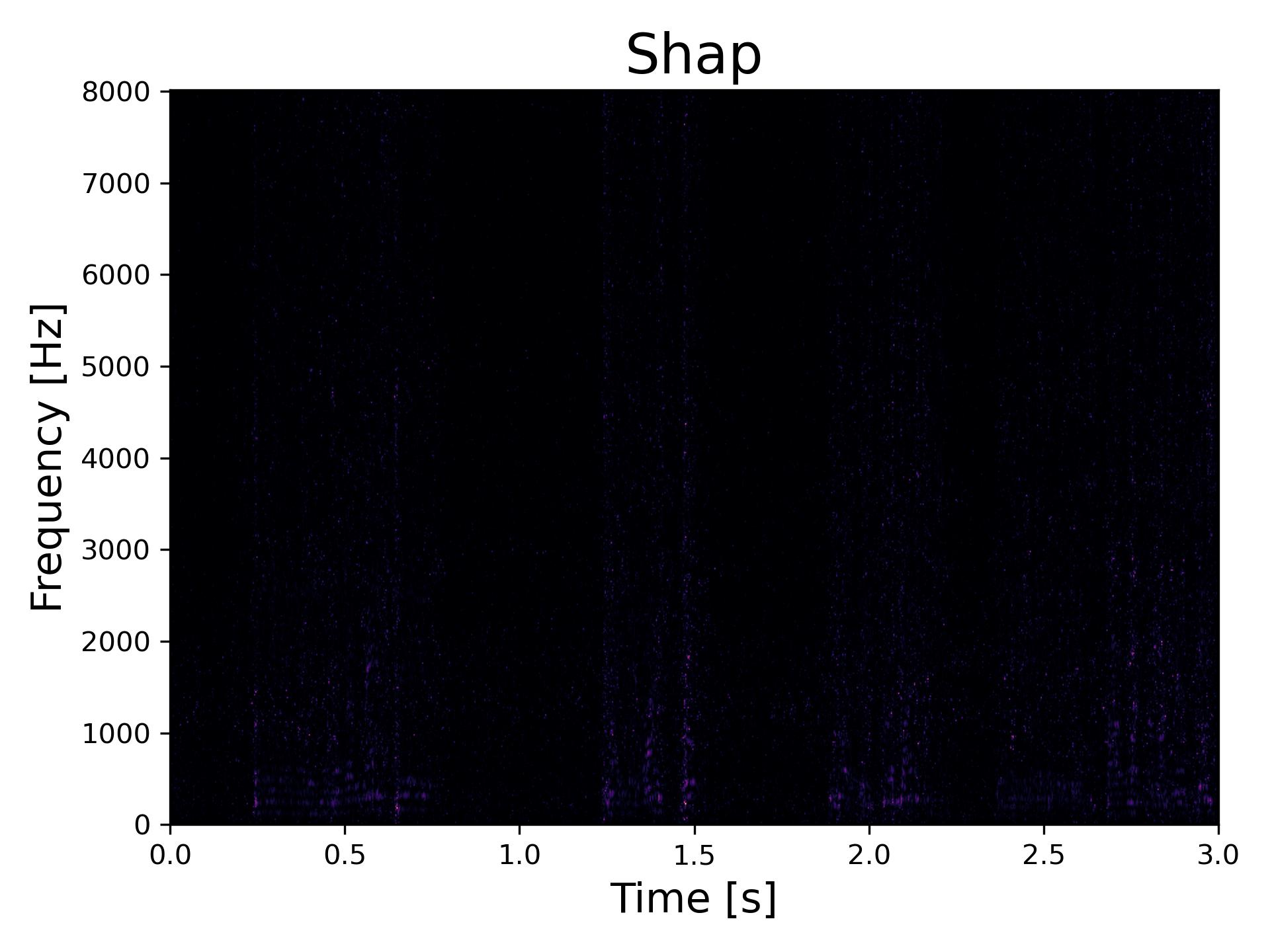

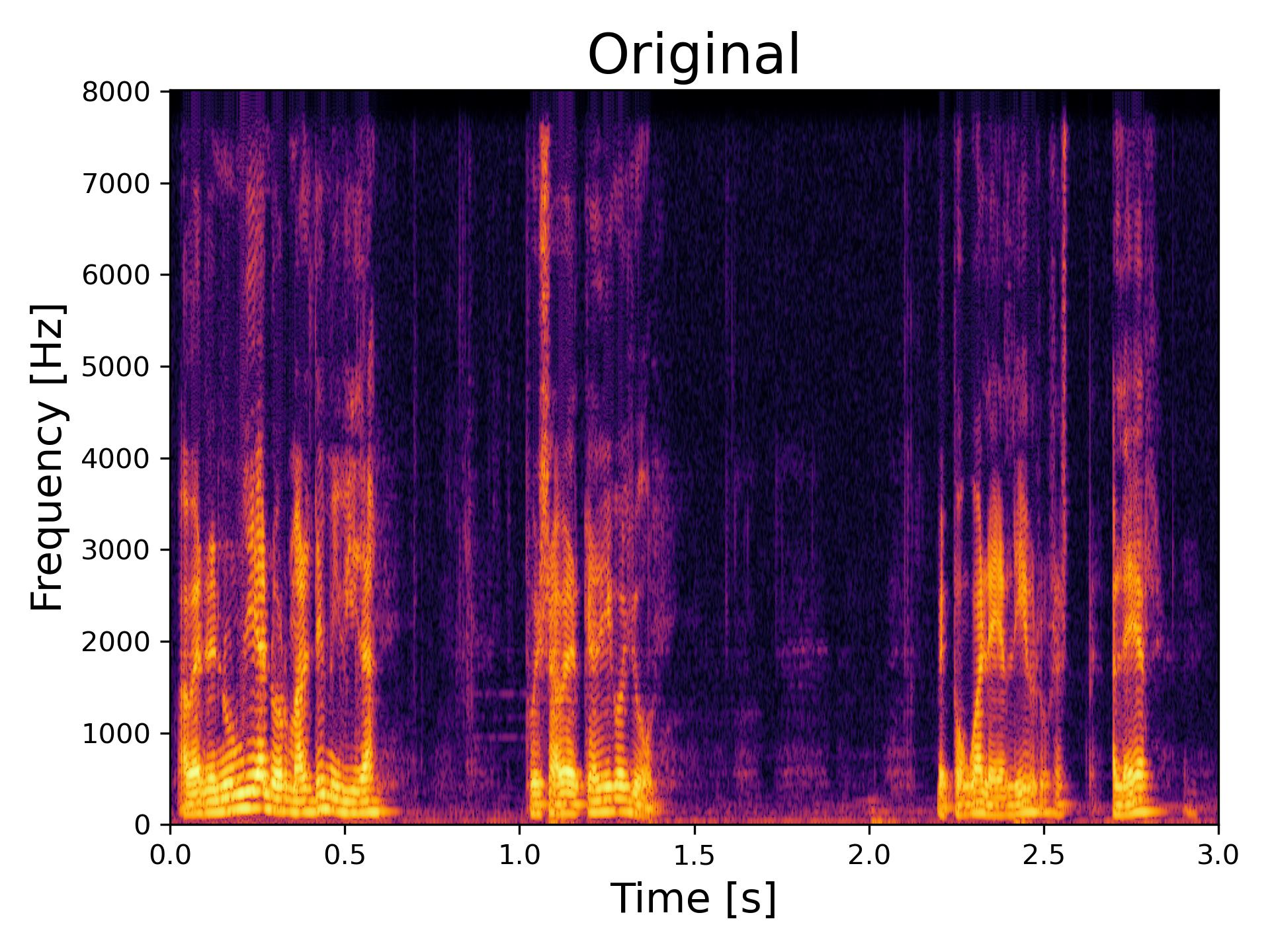

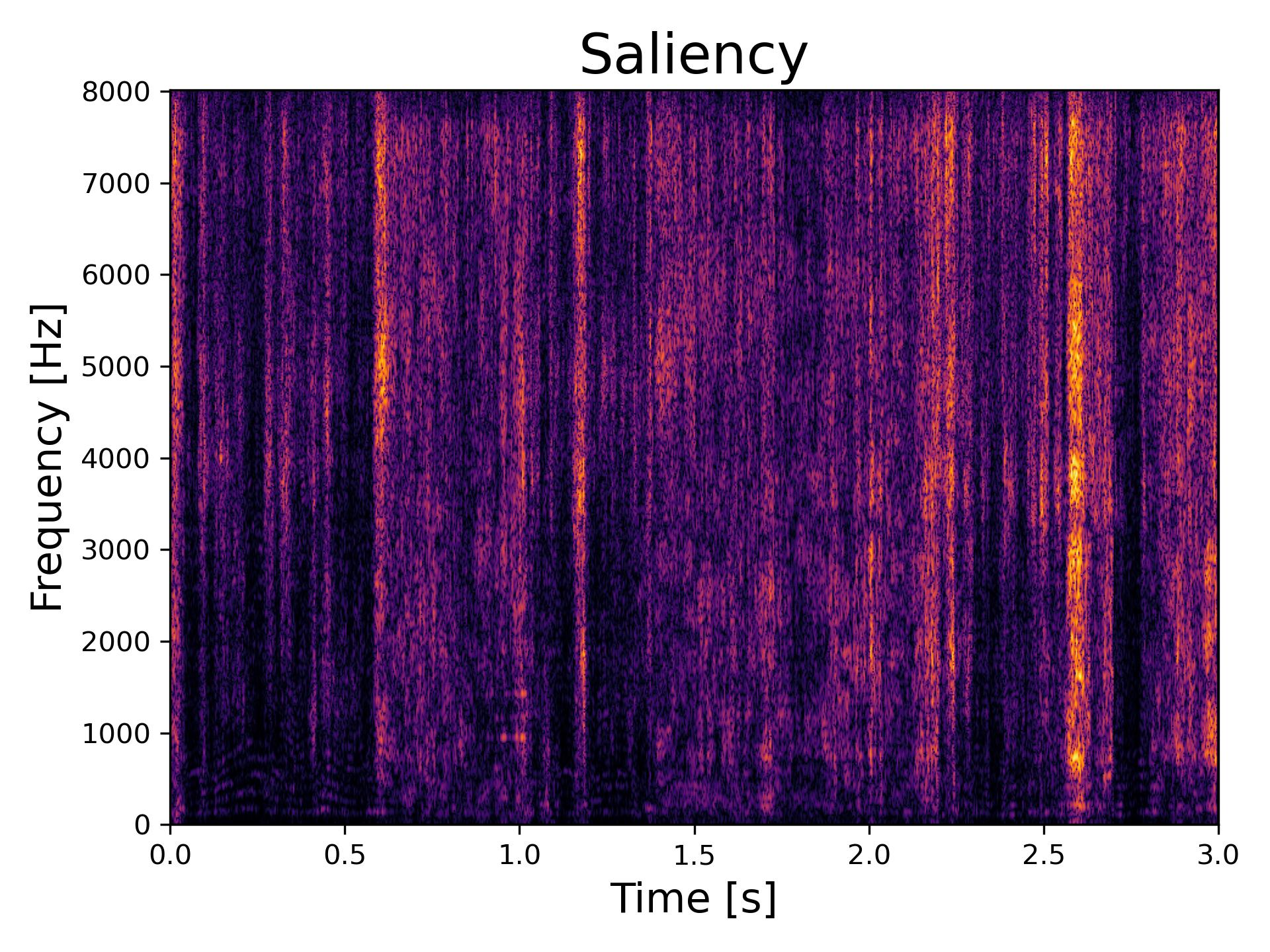

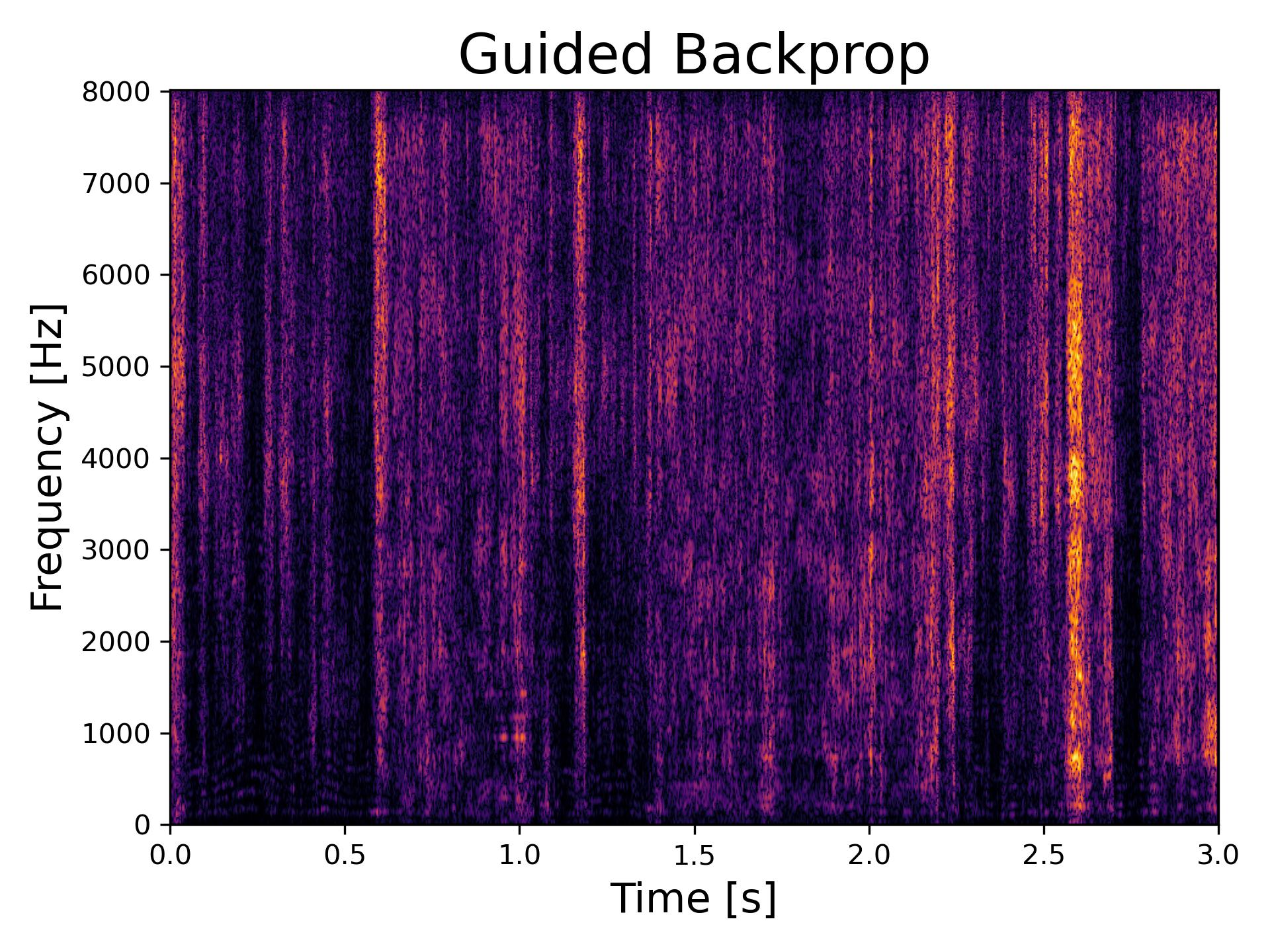

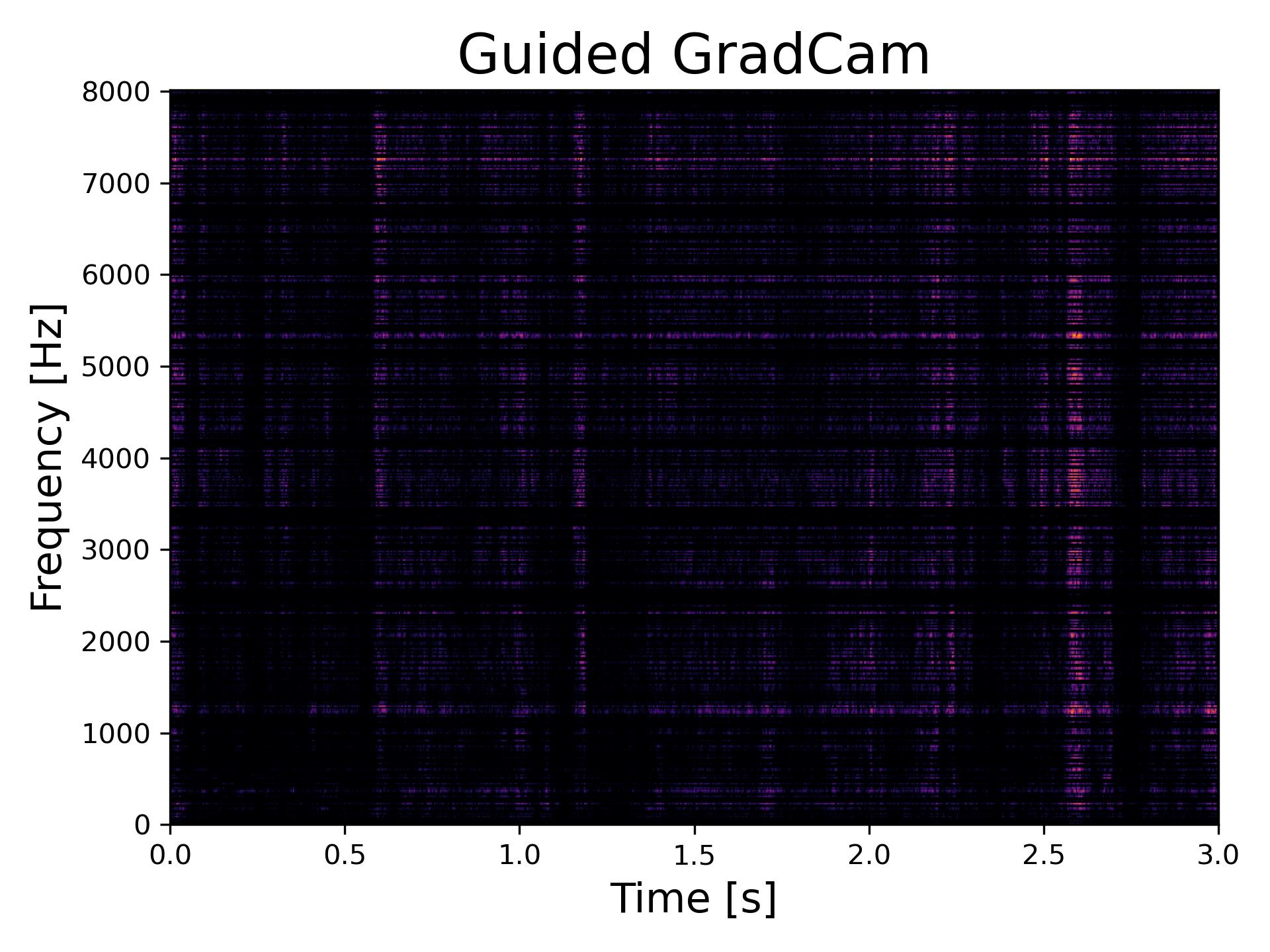

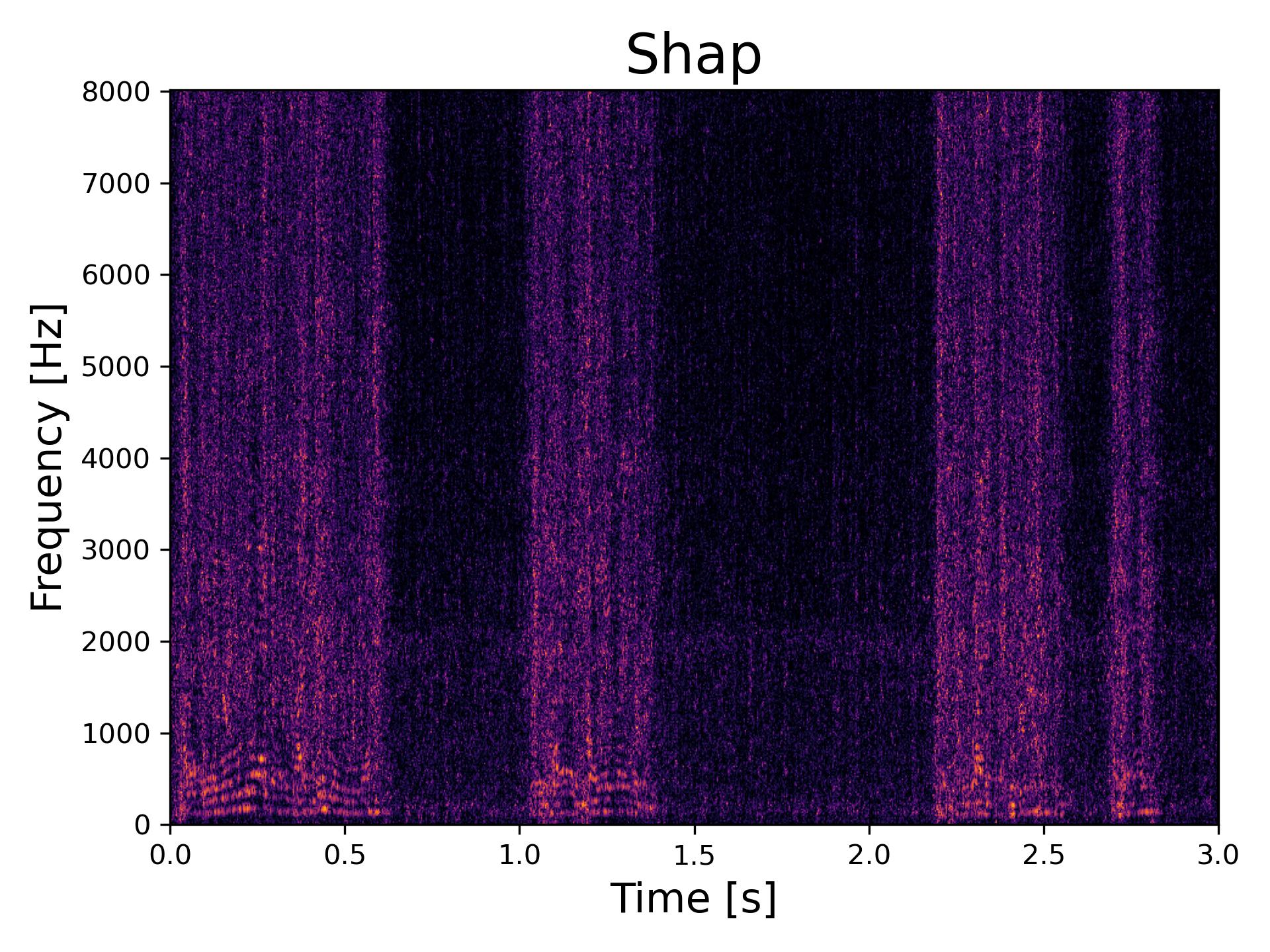

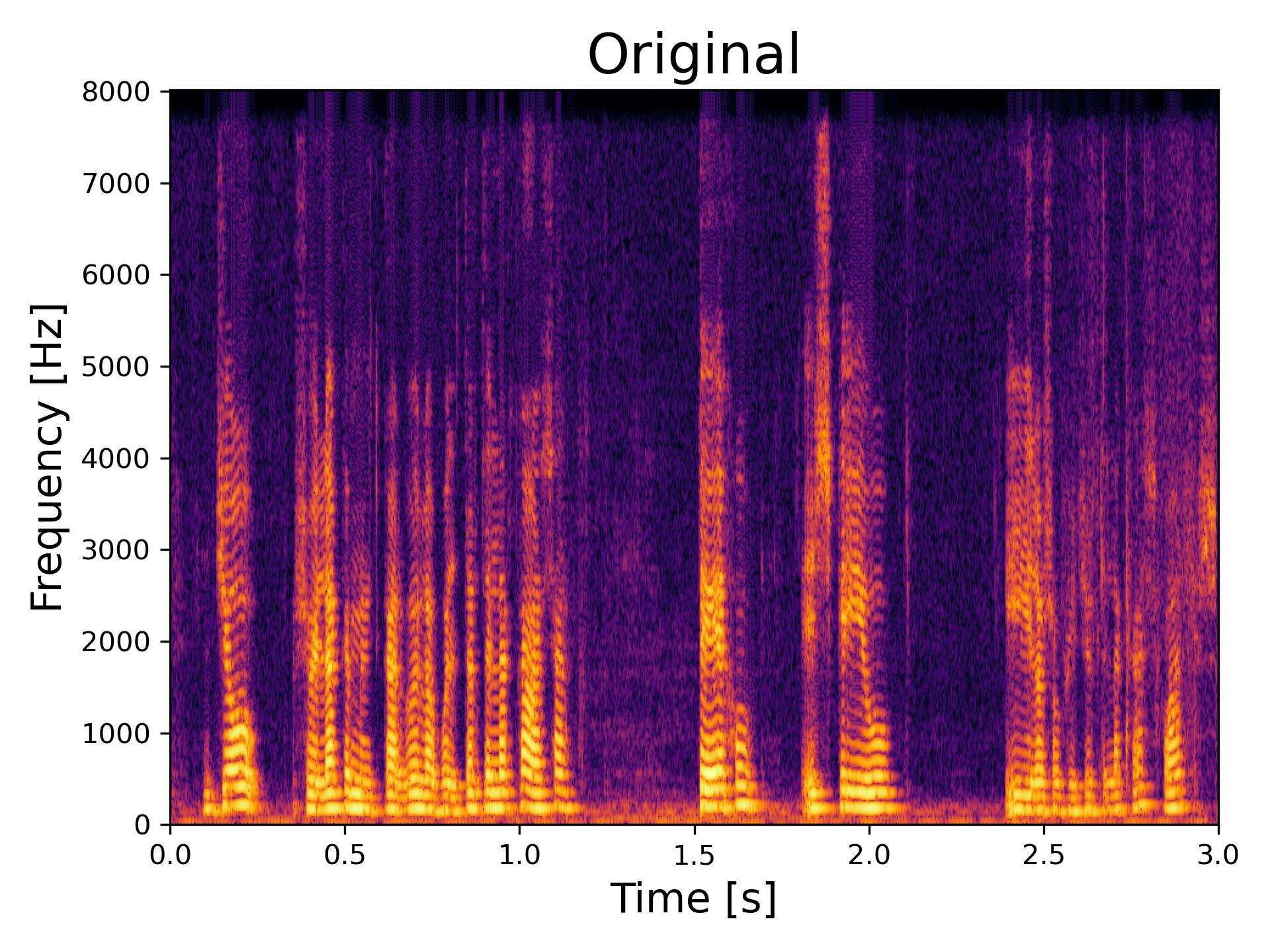

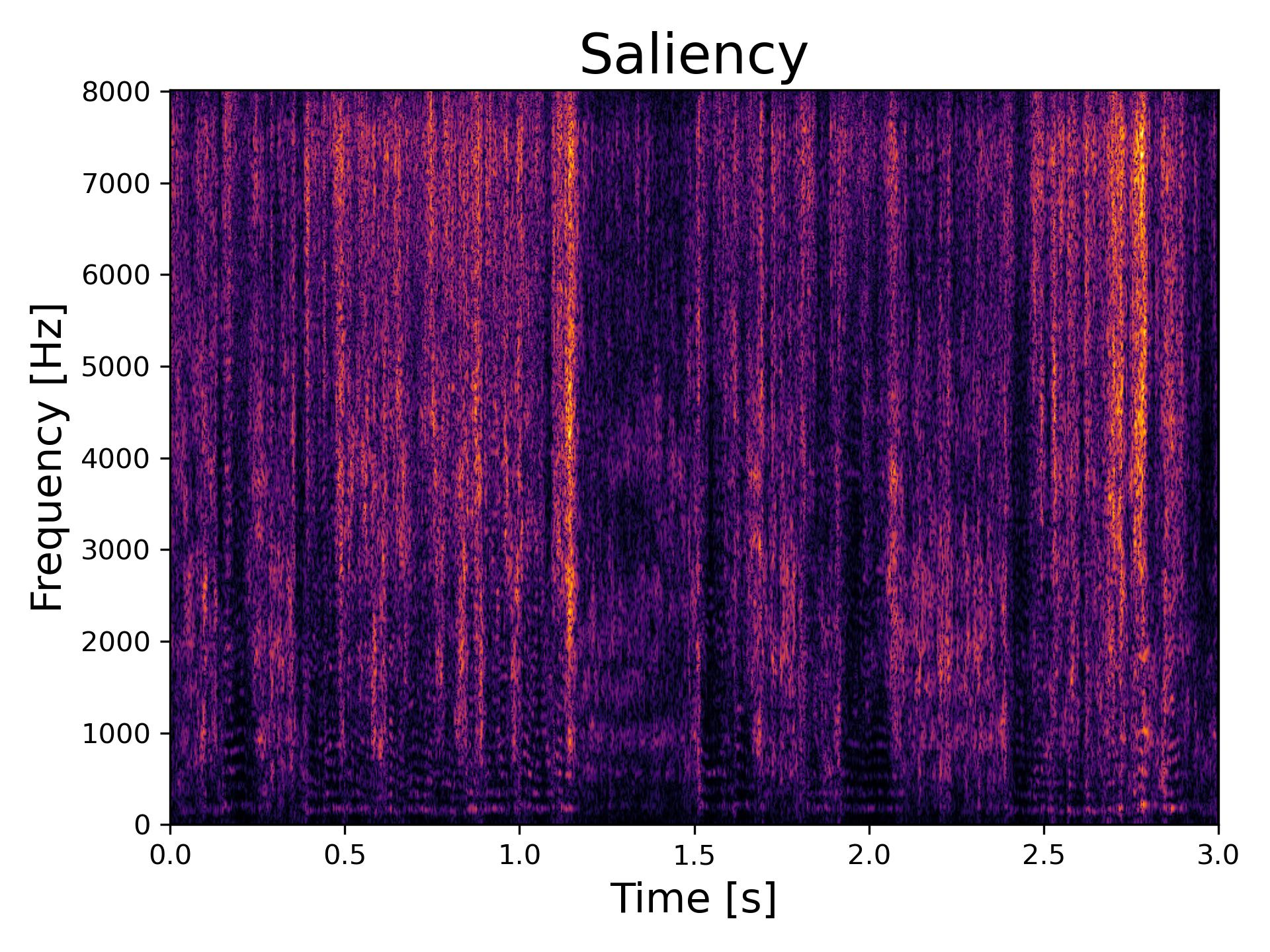

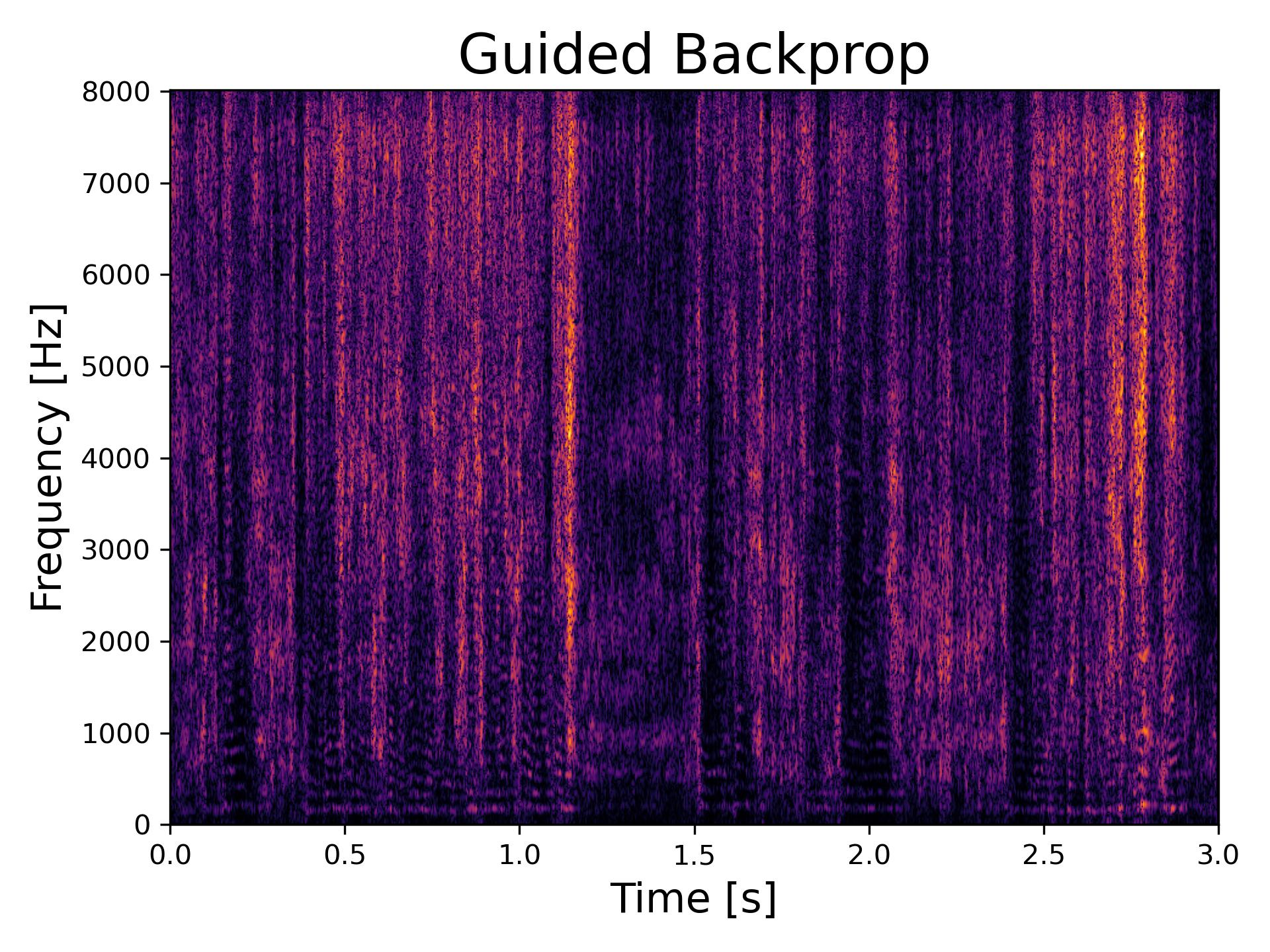

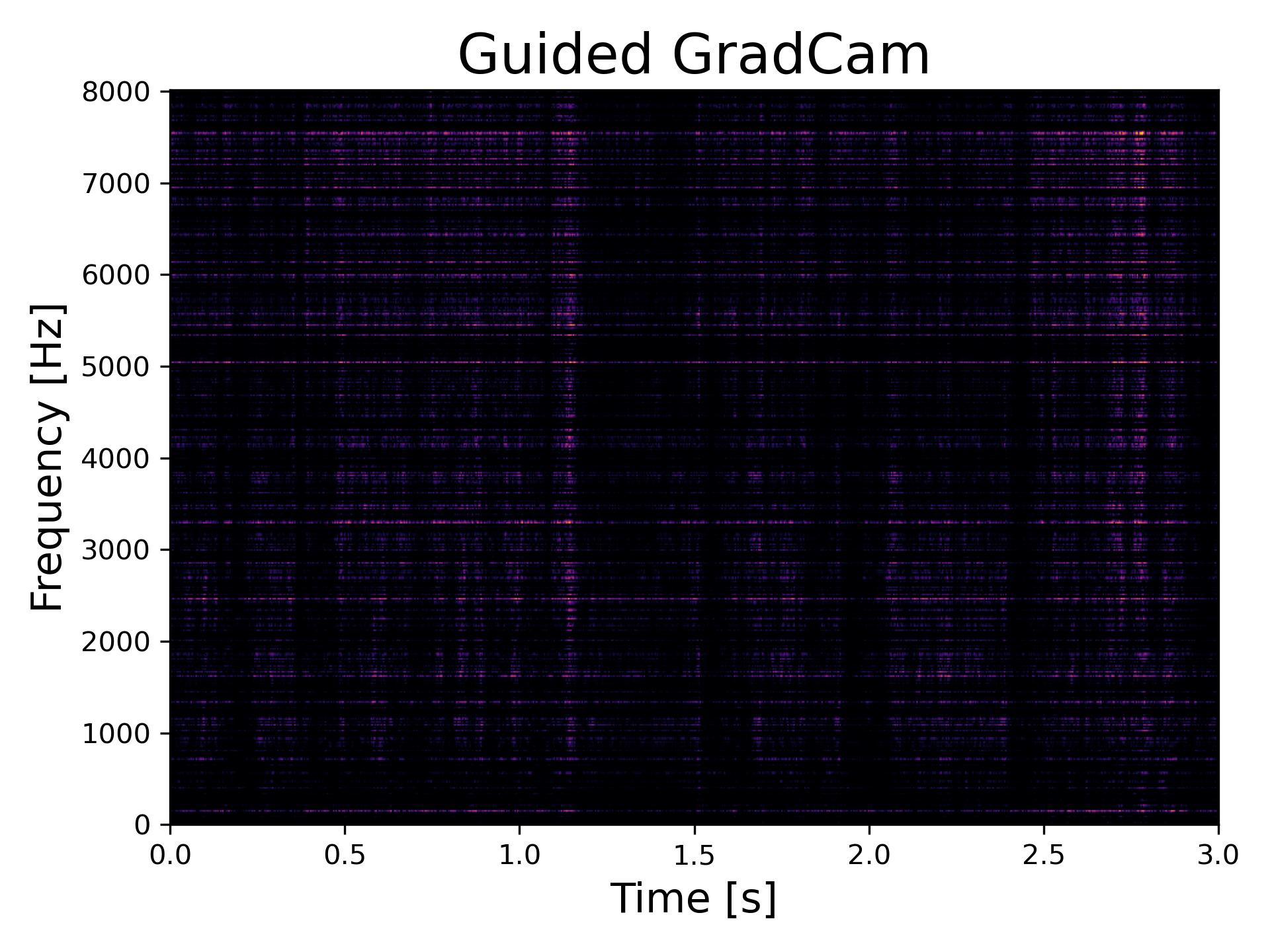

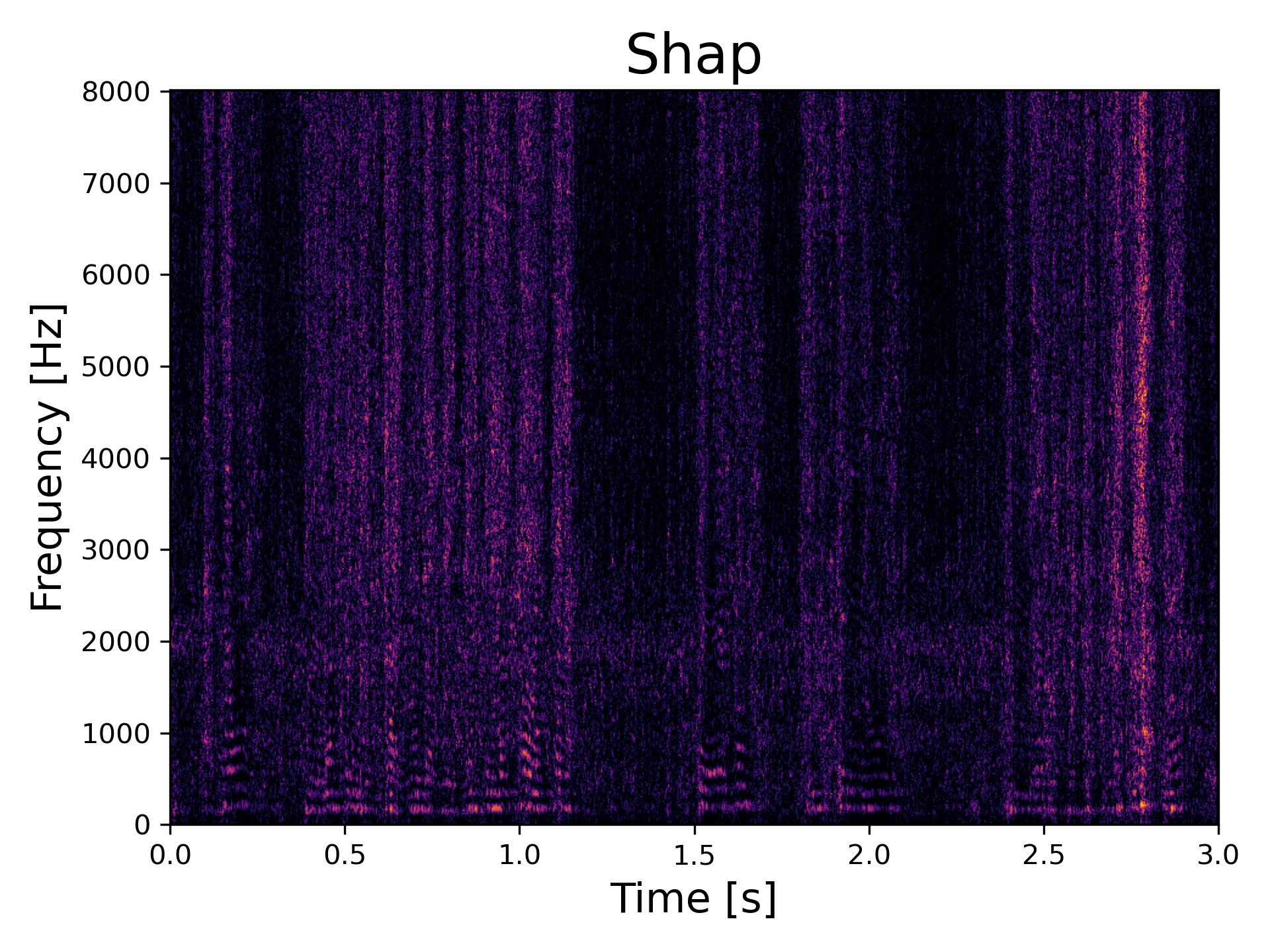

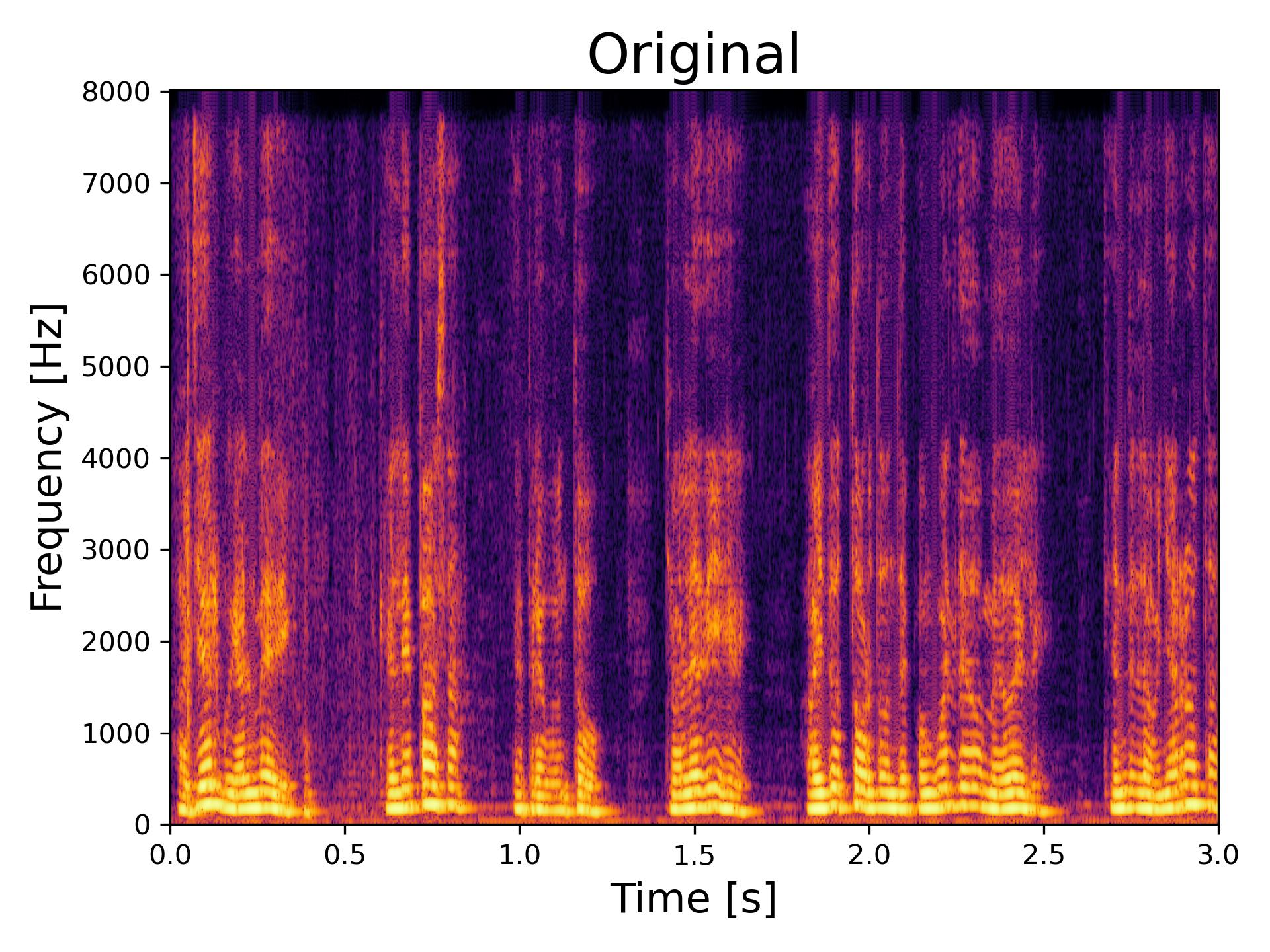

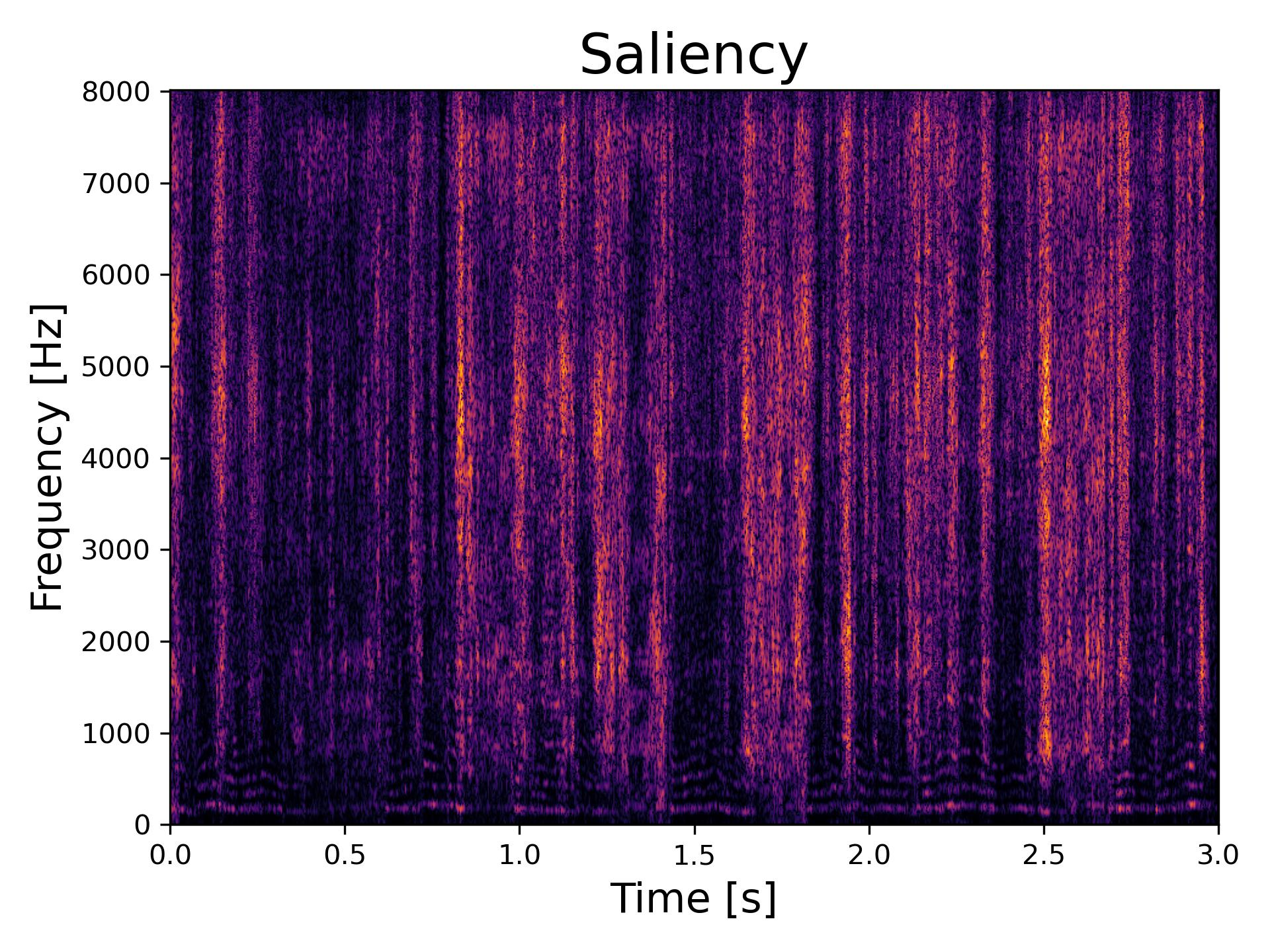

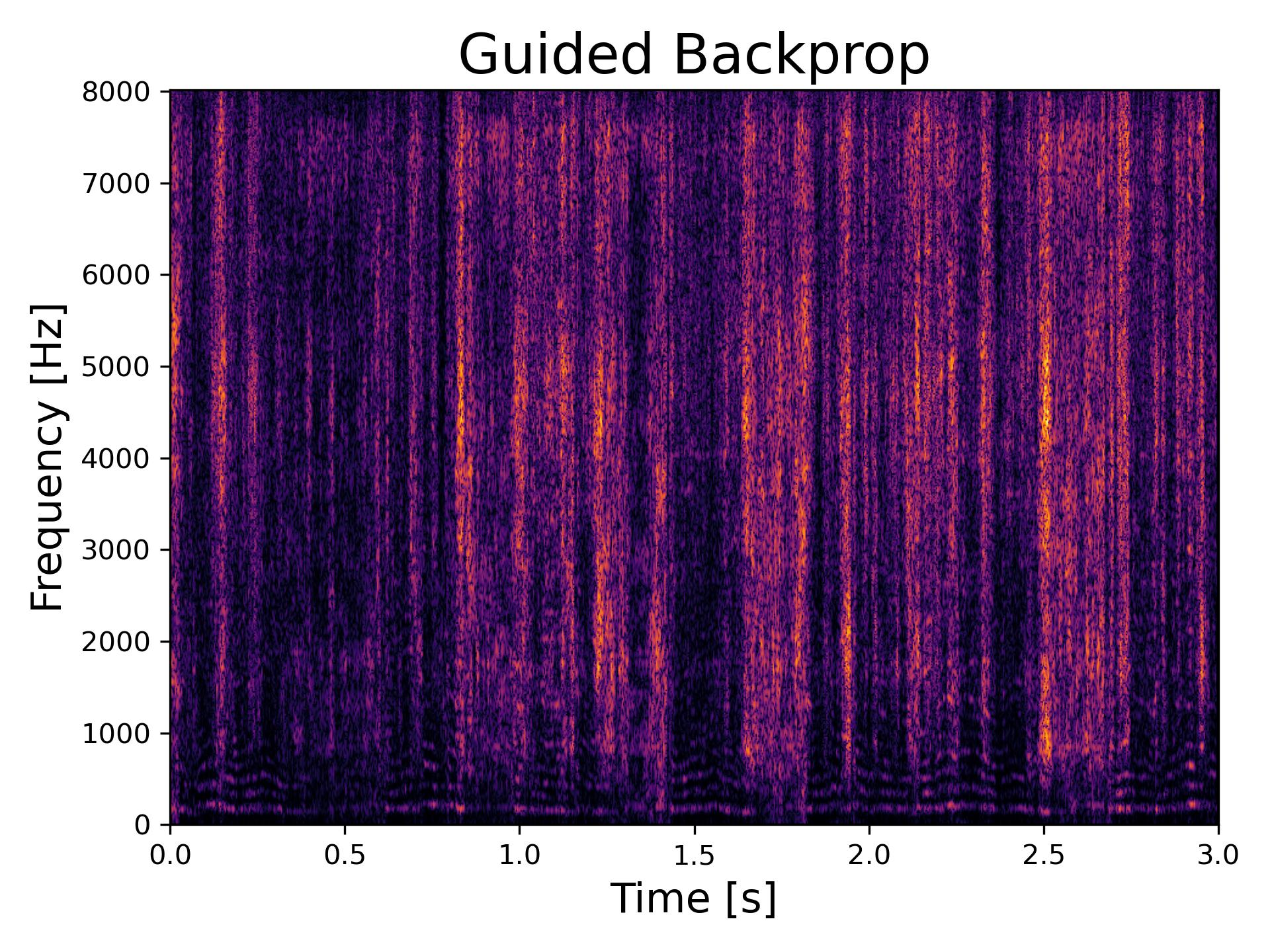

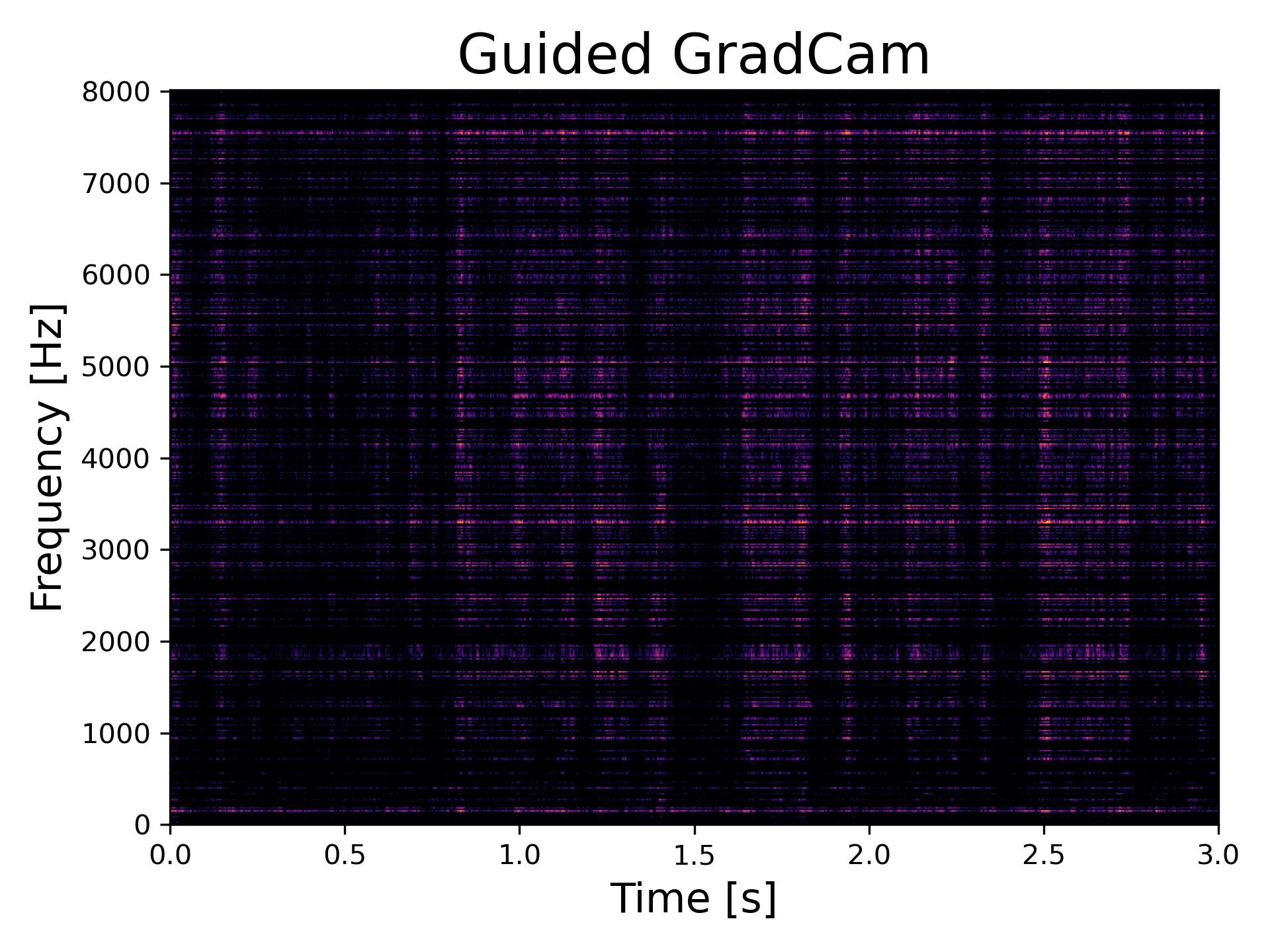

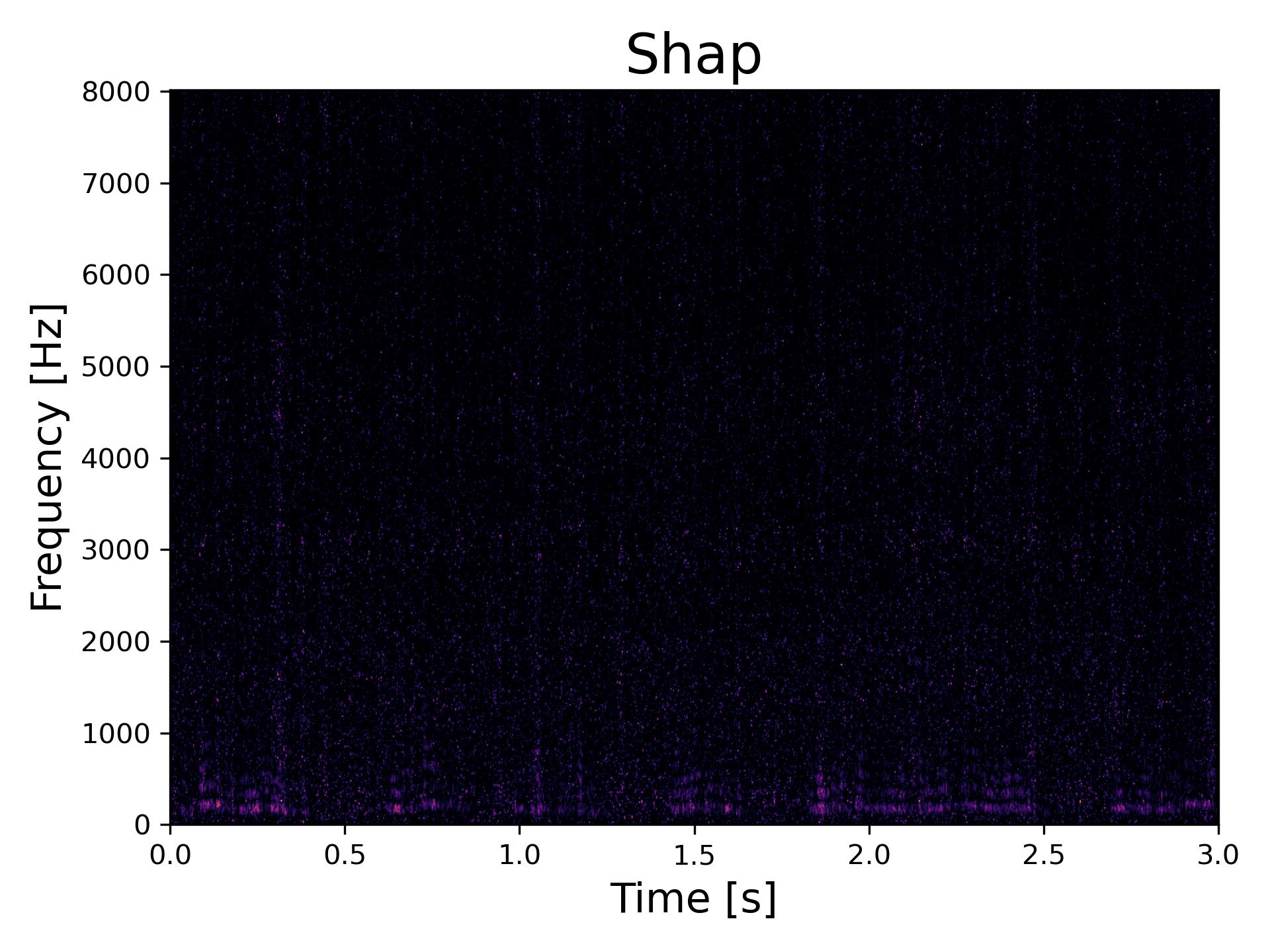

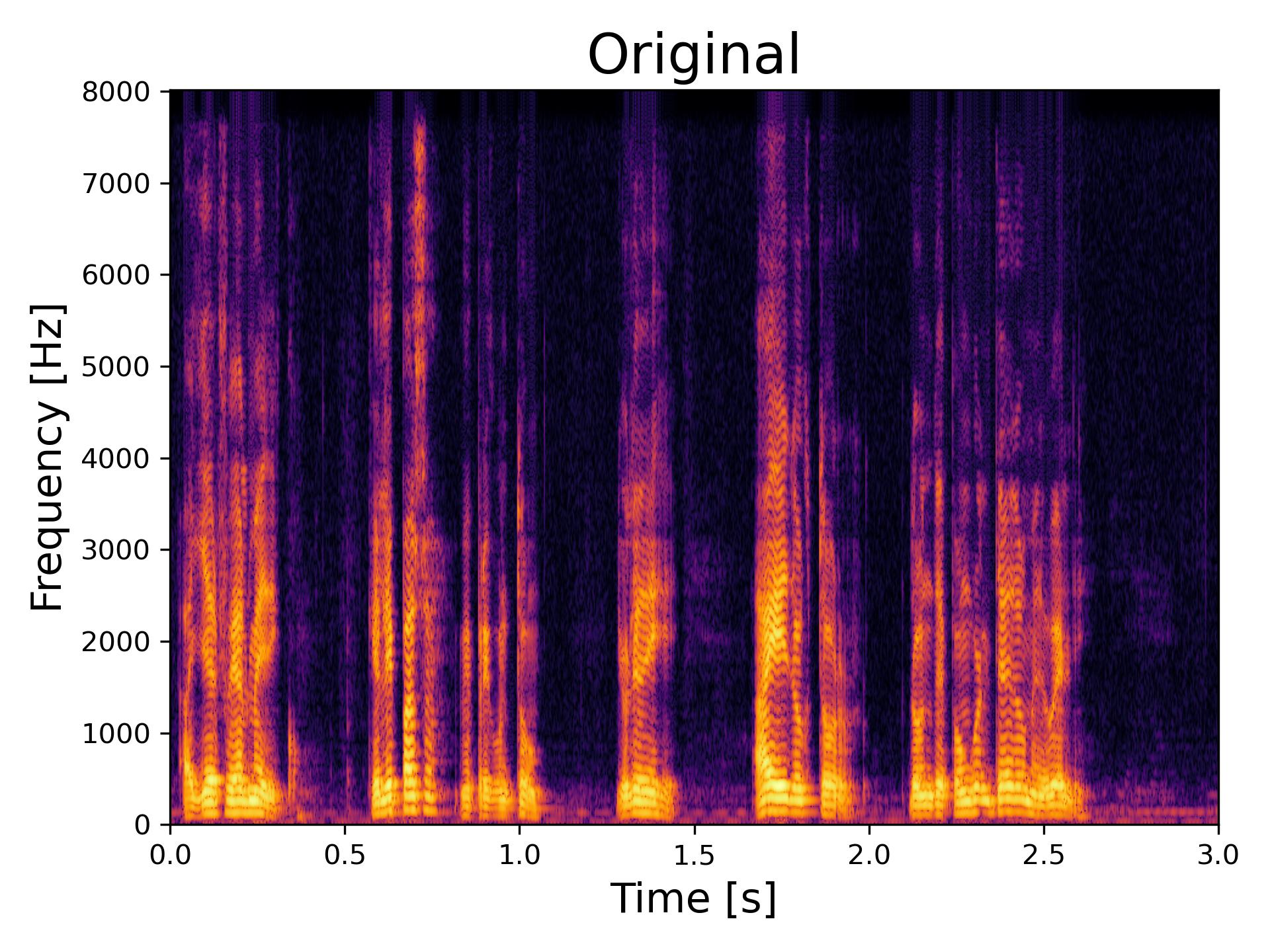

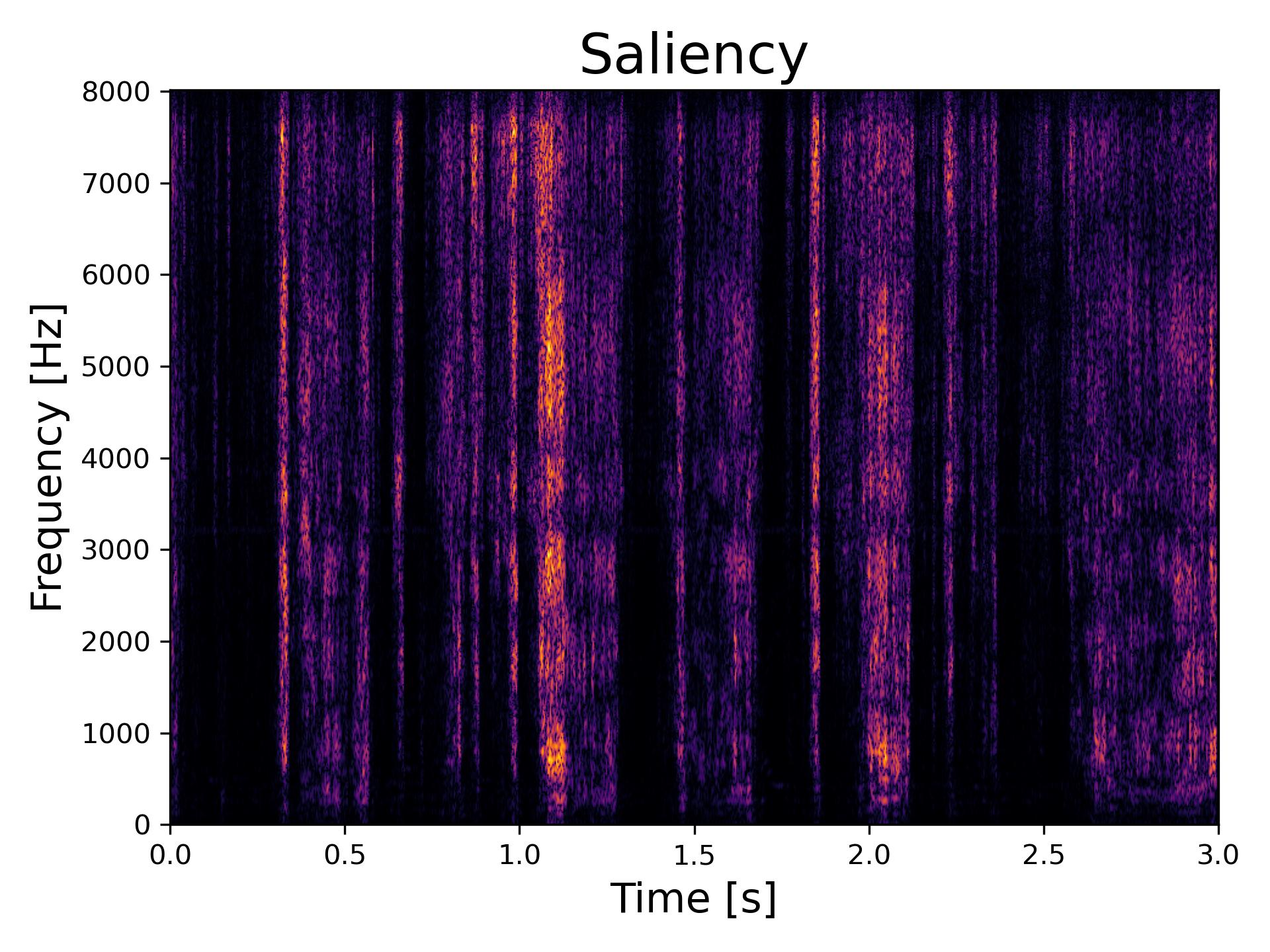

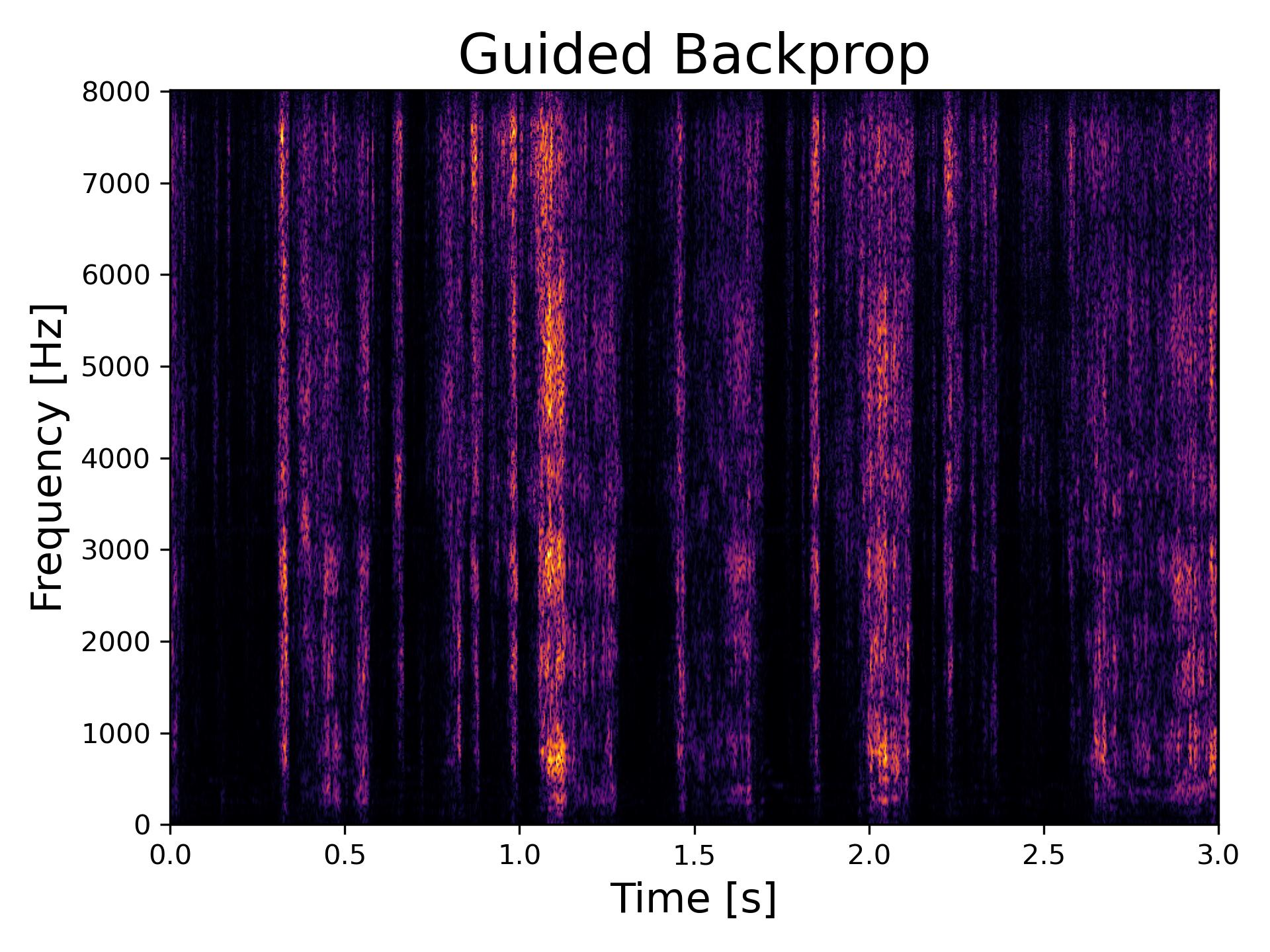

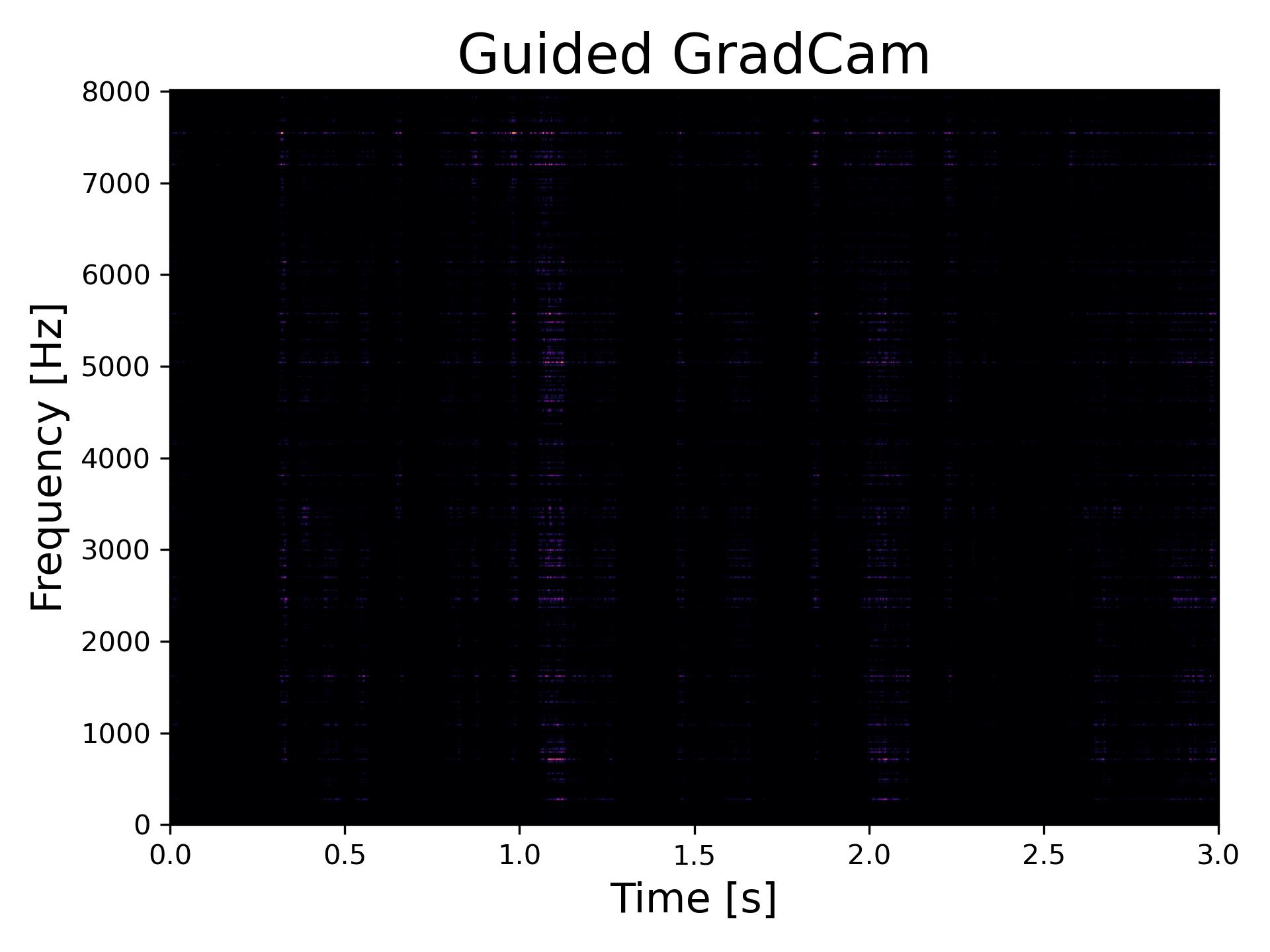

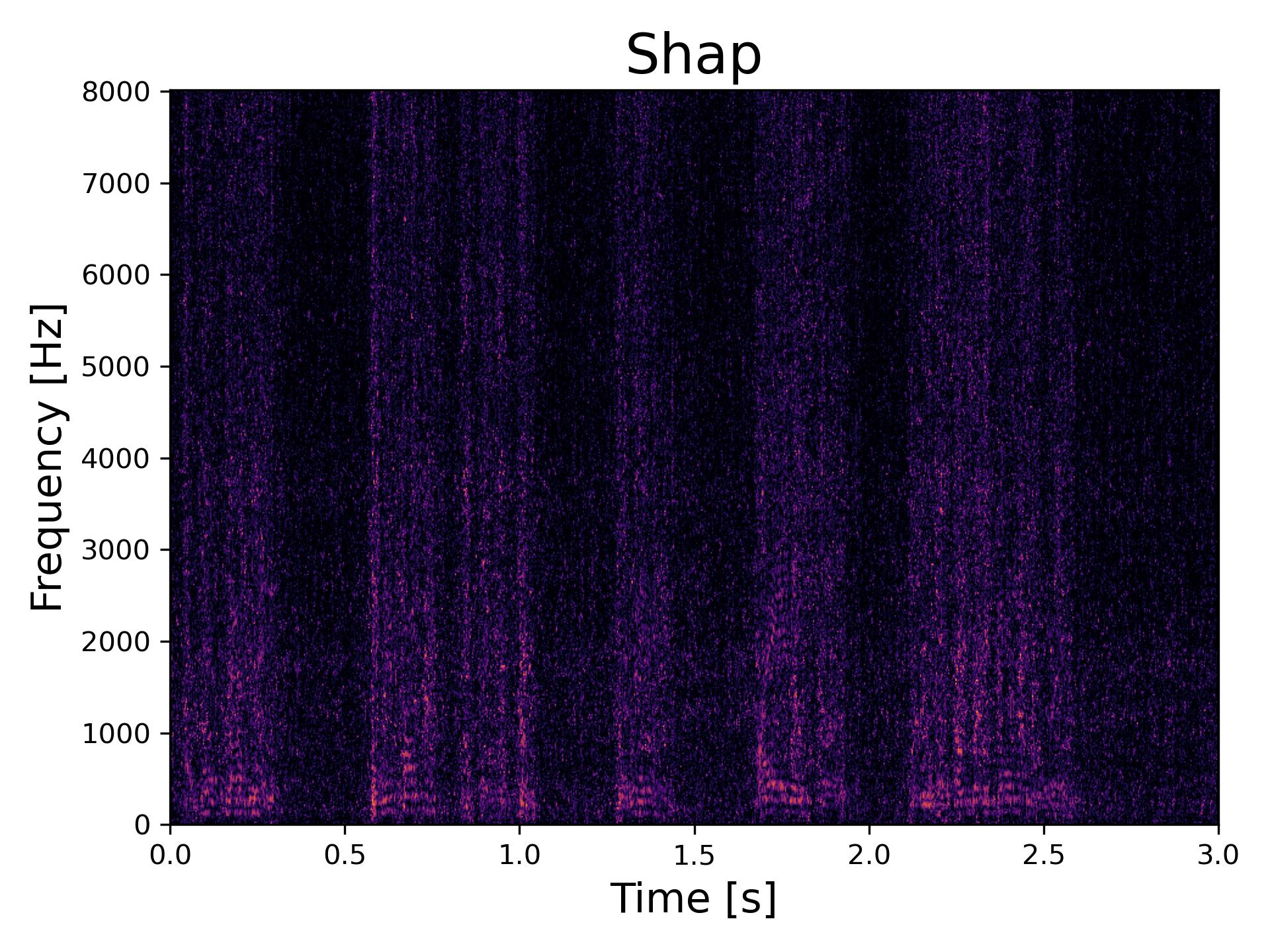

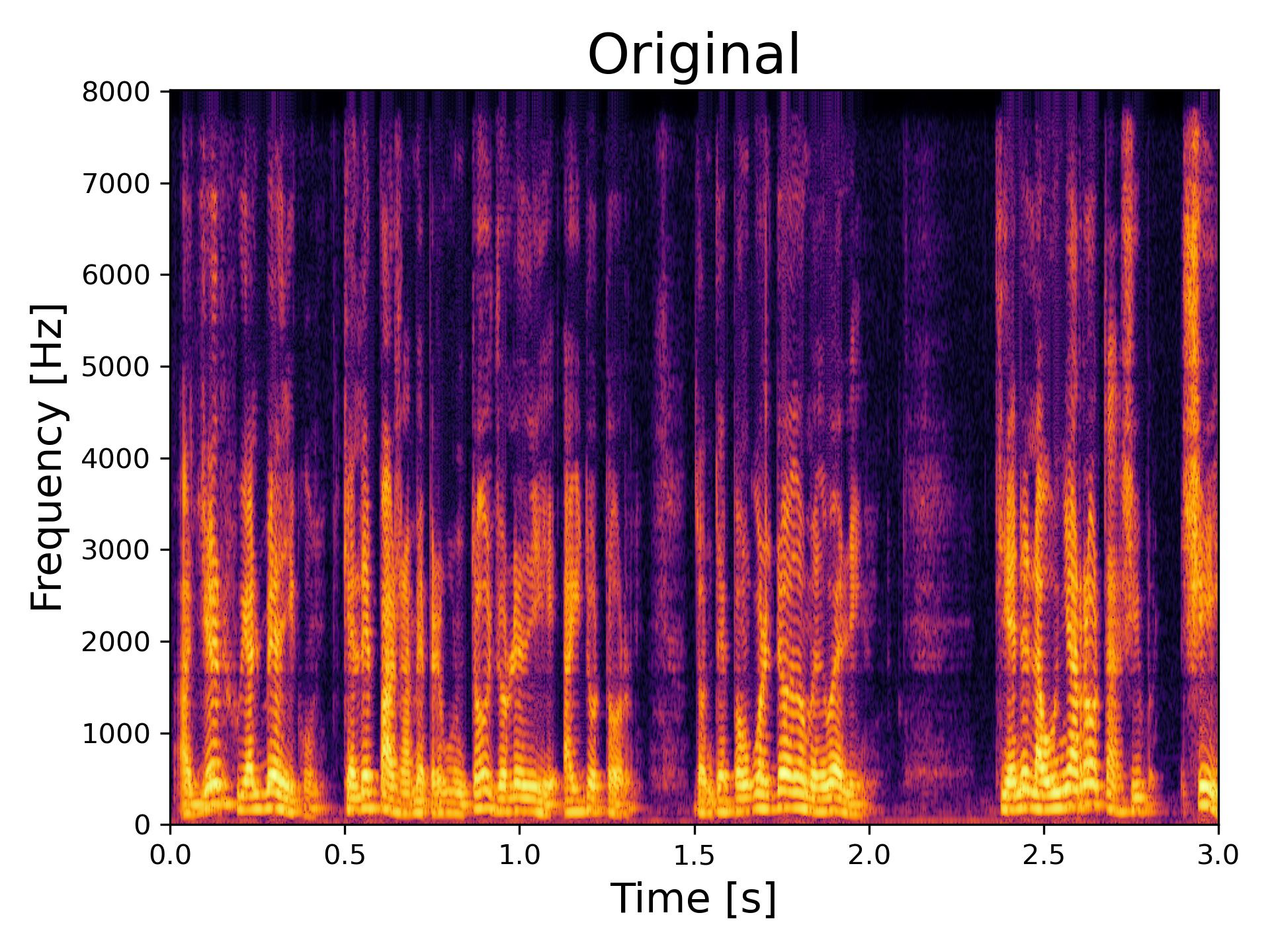

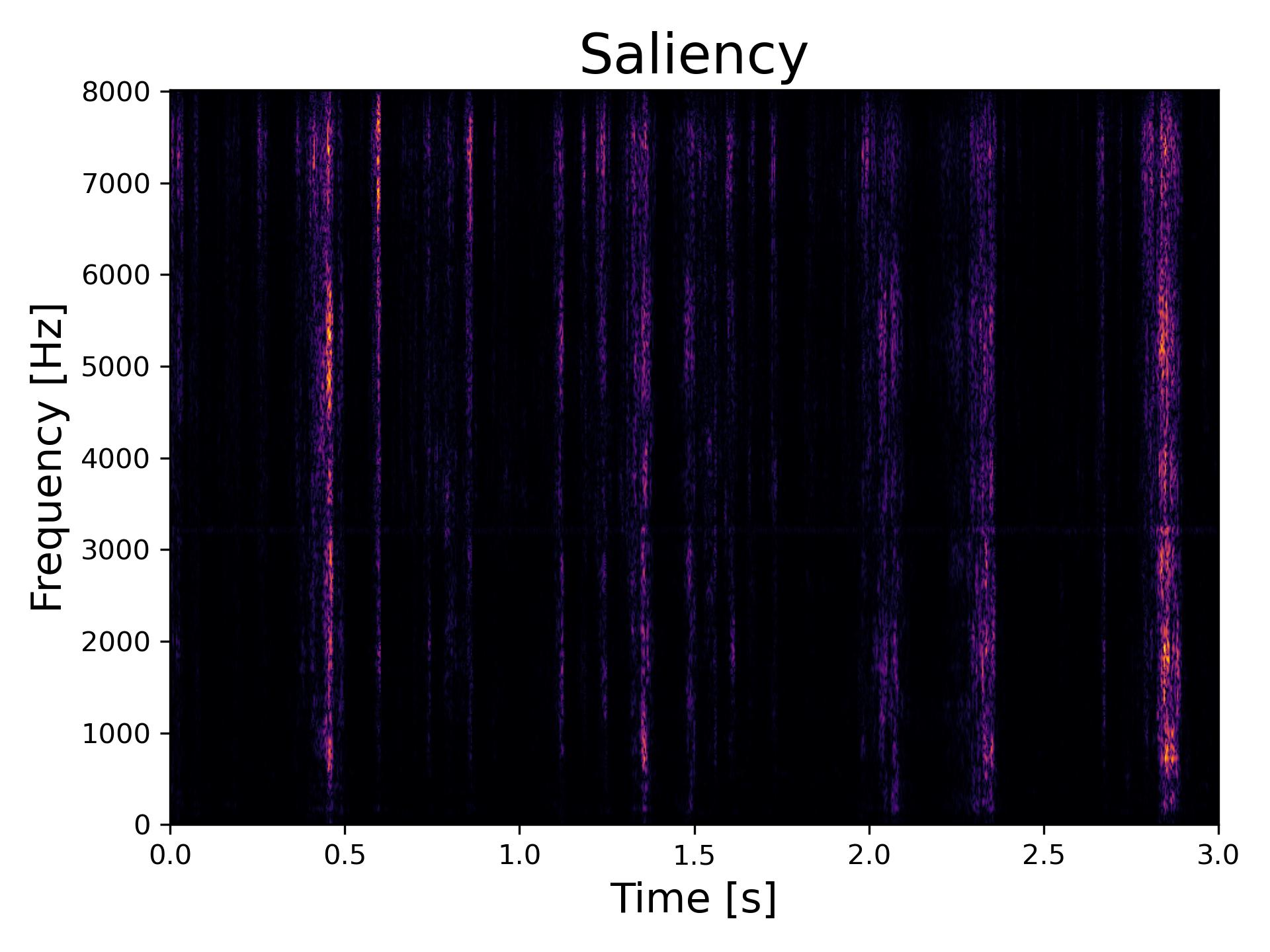

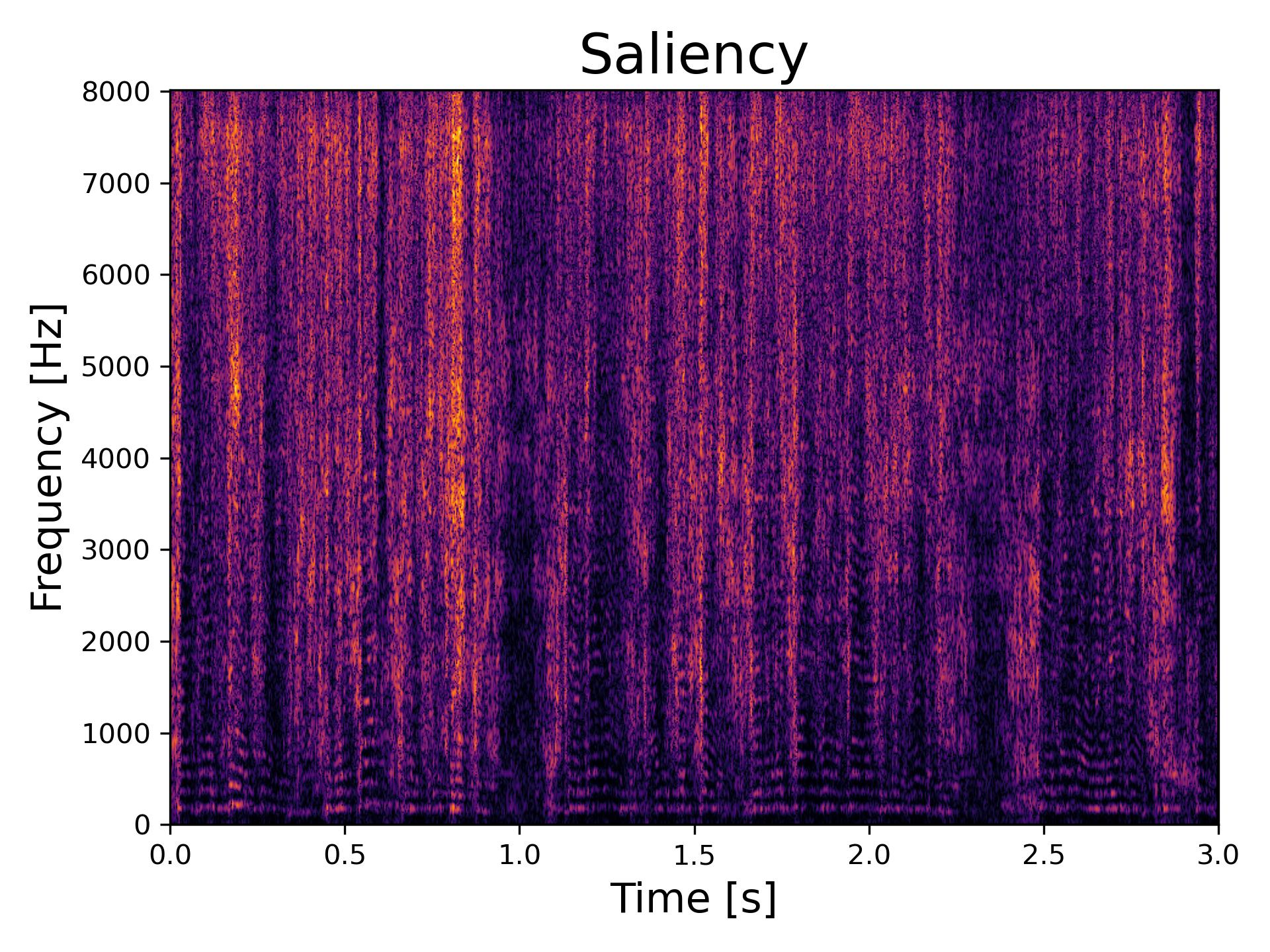

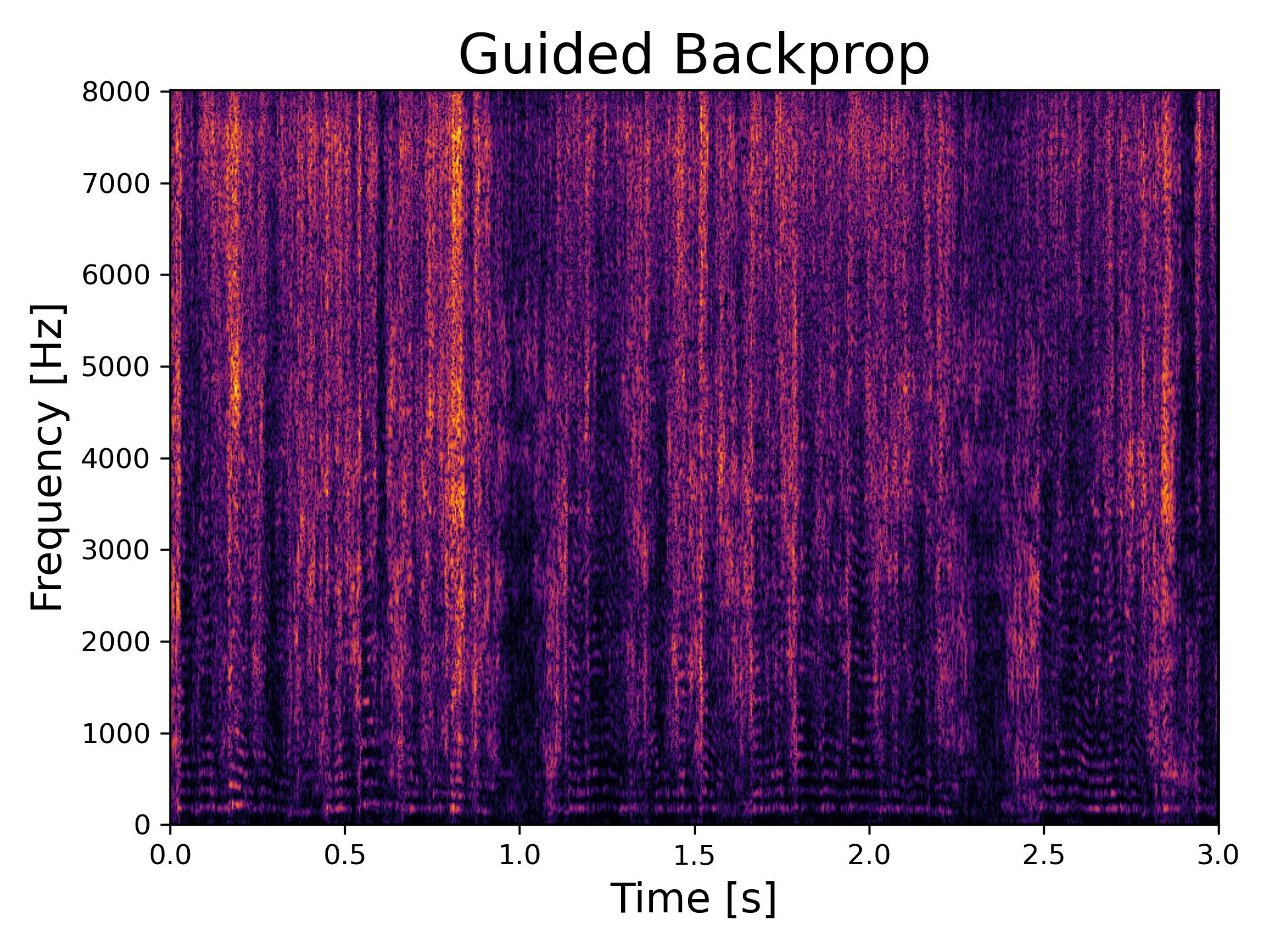

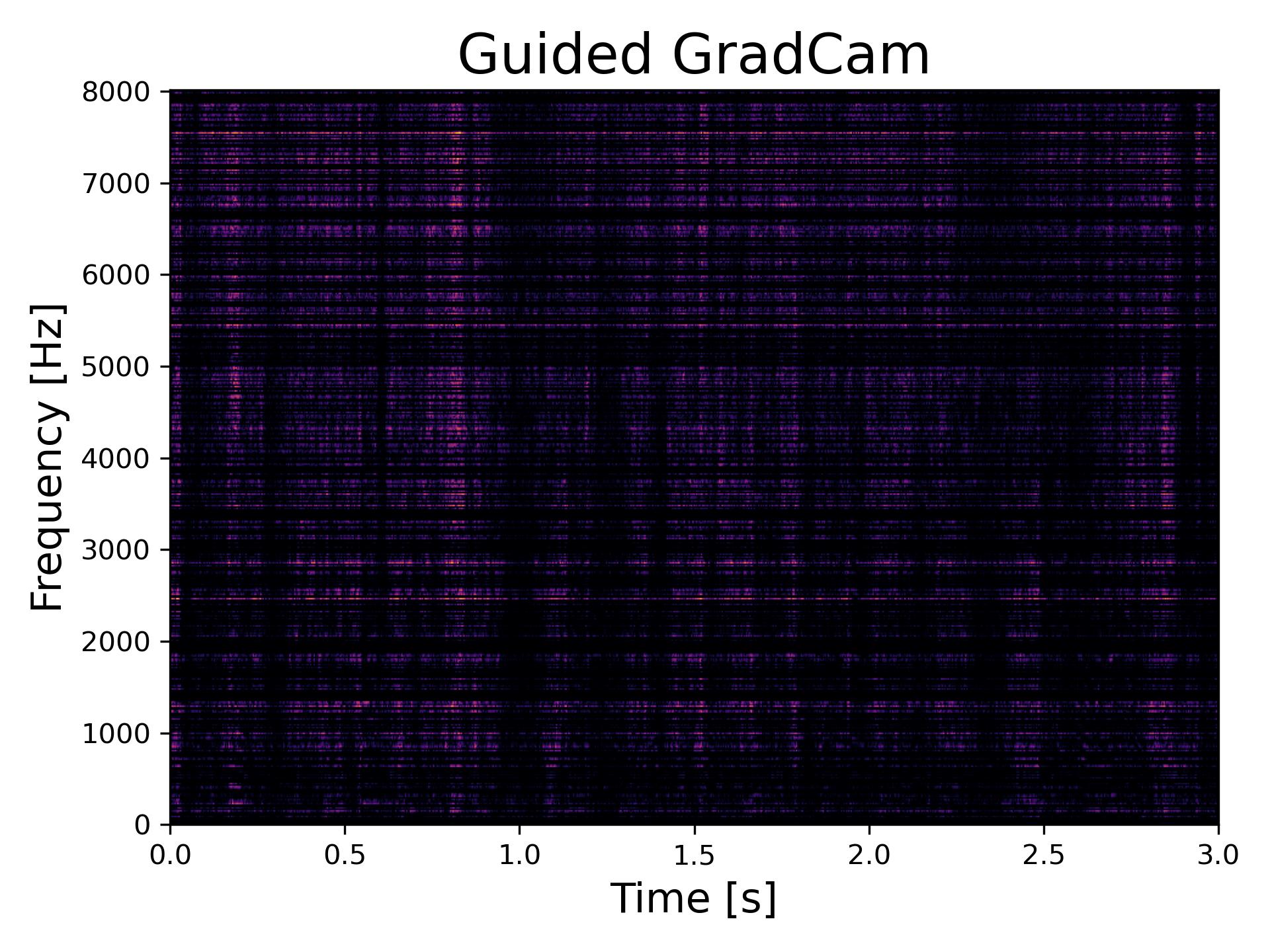

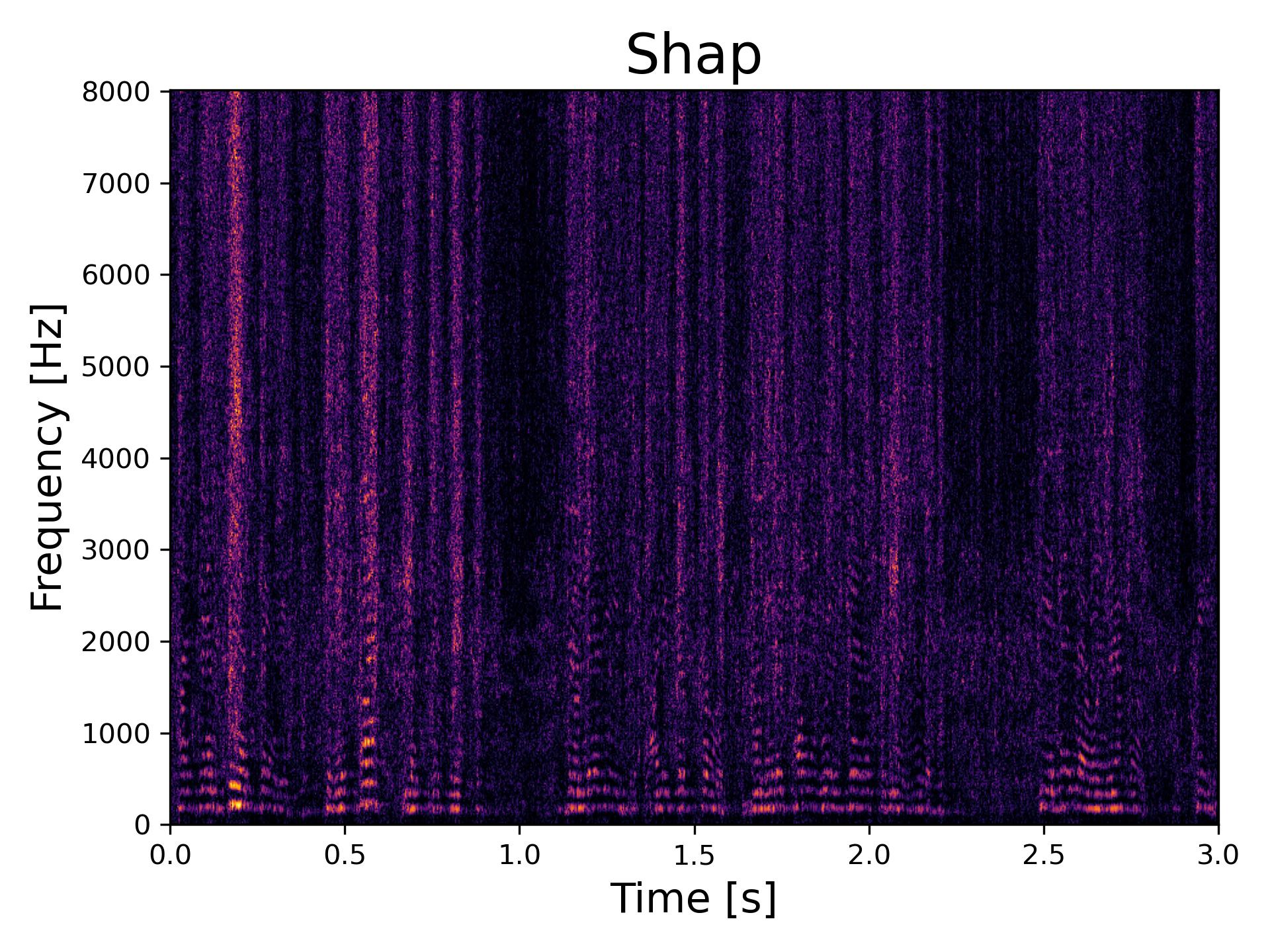

Speech impairments in Parkinson’s disease (PD) provide significant early indicators for diagnosis. While models for speech-based PD detection have shown strong performance, their interpretability remains underexplored. This study systematically evaluates several explainability methods to identify PD-specific speech features, aiming to support the development of accurate, interpretable models for clinical decision-making in PD diagnosis and monitoring. Our methodology involves (i) obtaining attributions and saliency maps using mainstream interpretability techniques, (ii) quantitatively evaluating the faithfulness of these maps and their combinations obtained via union and intersection through a range of established metrics, and (iii) assessing the information conveyed by the saliency maps for PD detection from an auxiliary classifier. Our results reveal that, while explanations are aligned with the classifier, they often fail to provide valuable information for domain experts.

Contribution in a nutshell:

- Experimental results demonstrate that explanations align with the classifier’s decisions.

- Explanations often fail to offer truly informative insights for domain experts.

- Existing methods may lack the interpretability required for practical applications.

- Results highlight the need for more effective explainability approaches.

Explanations

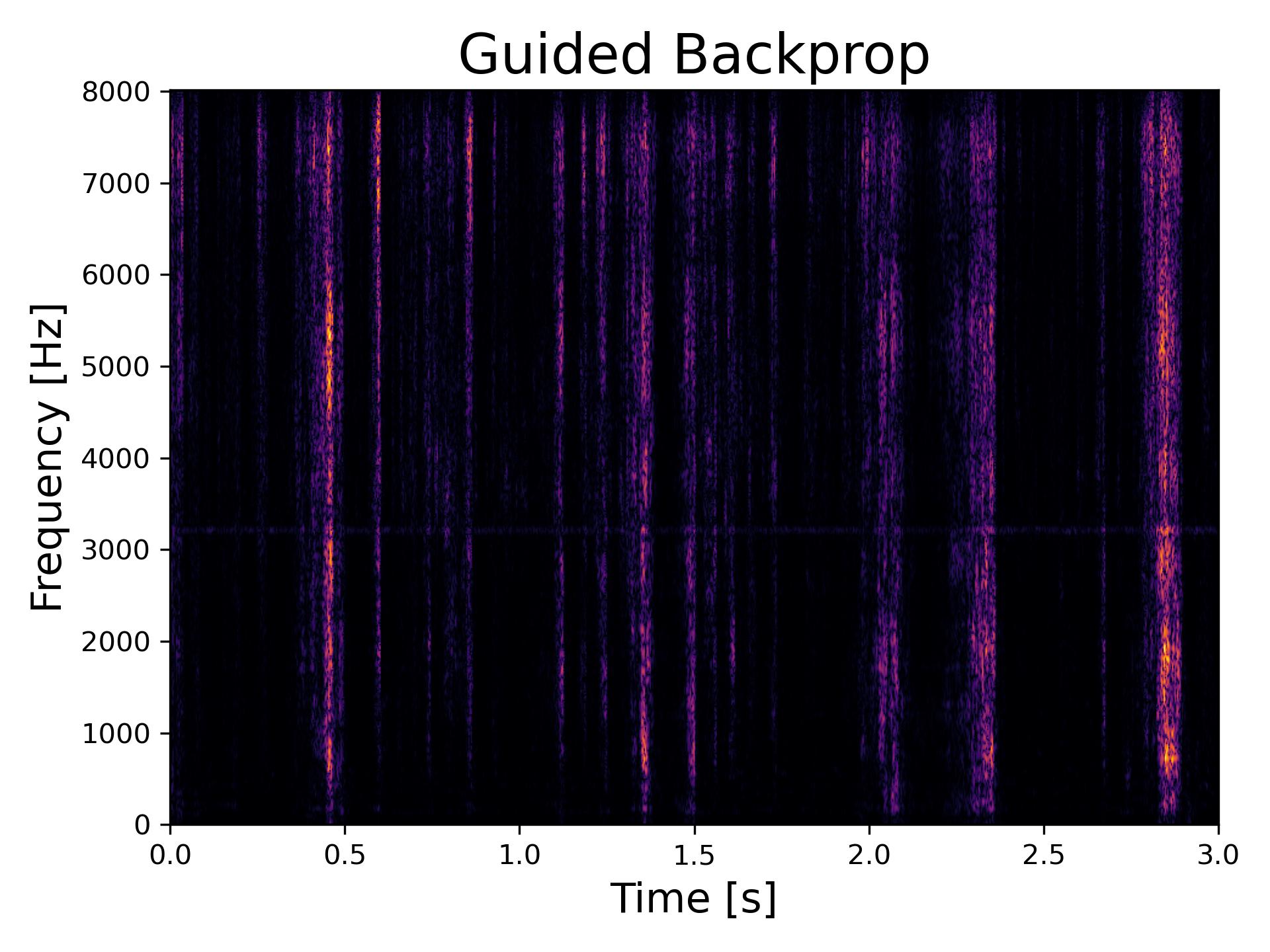

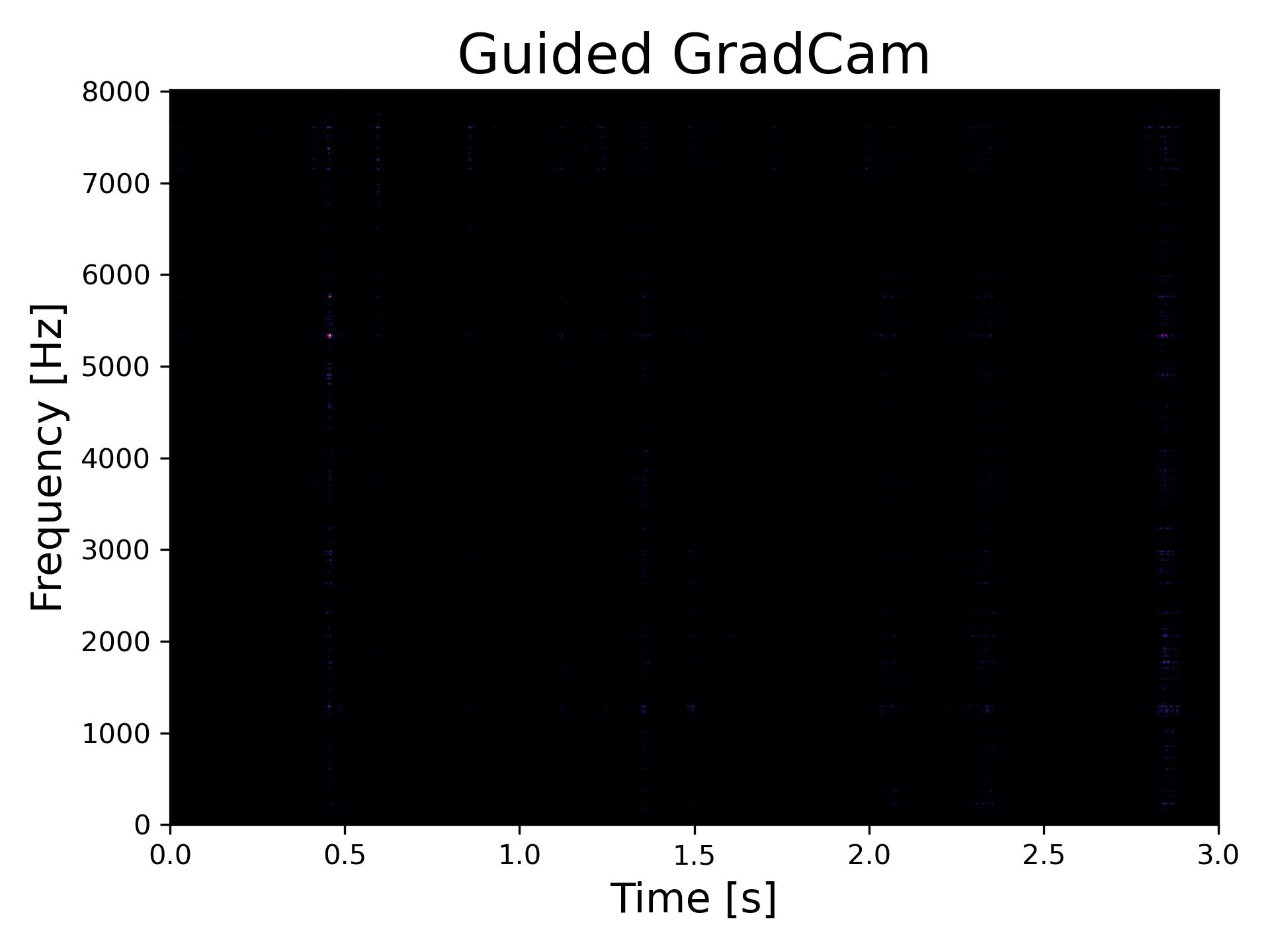

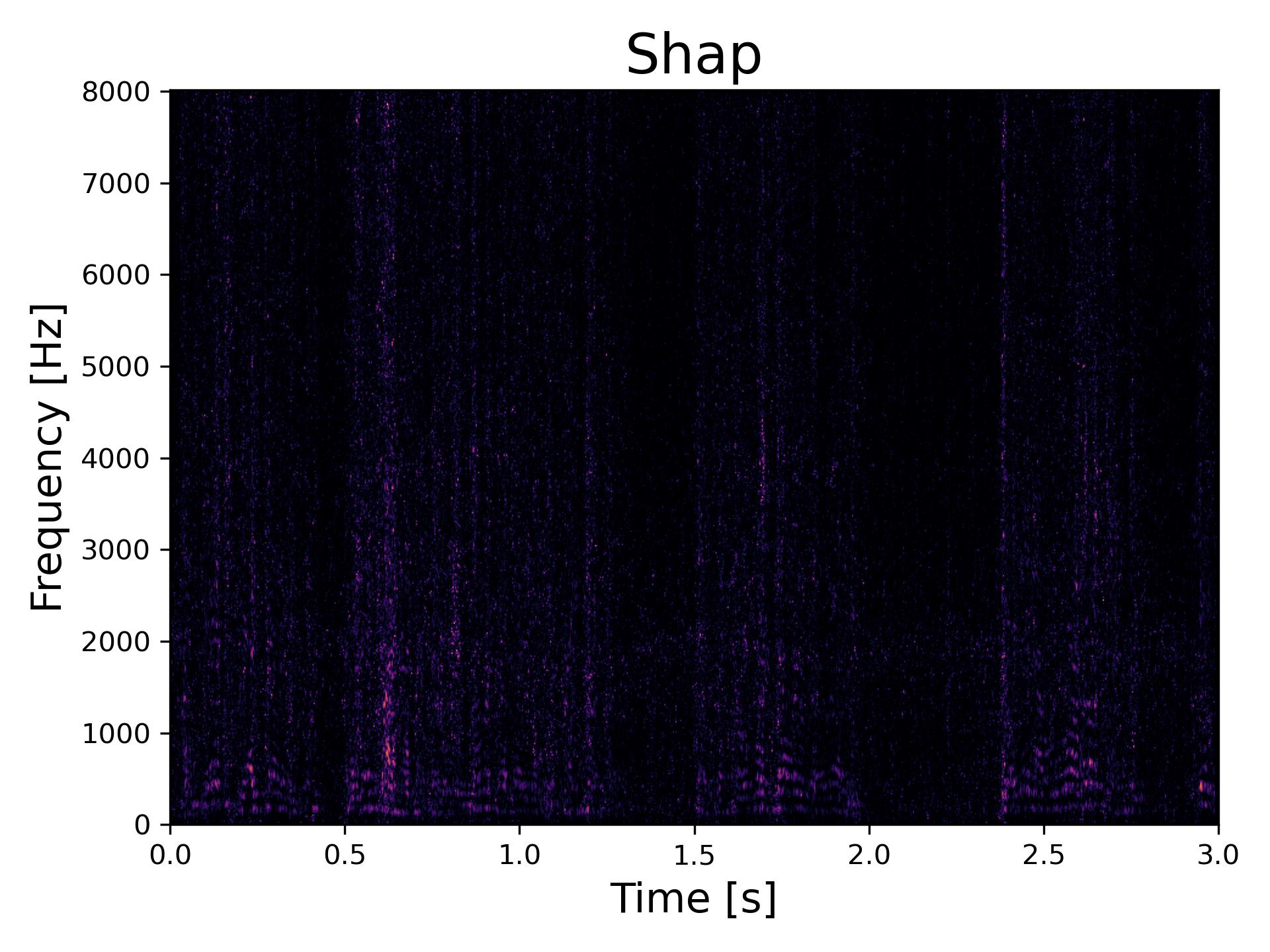

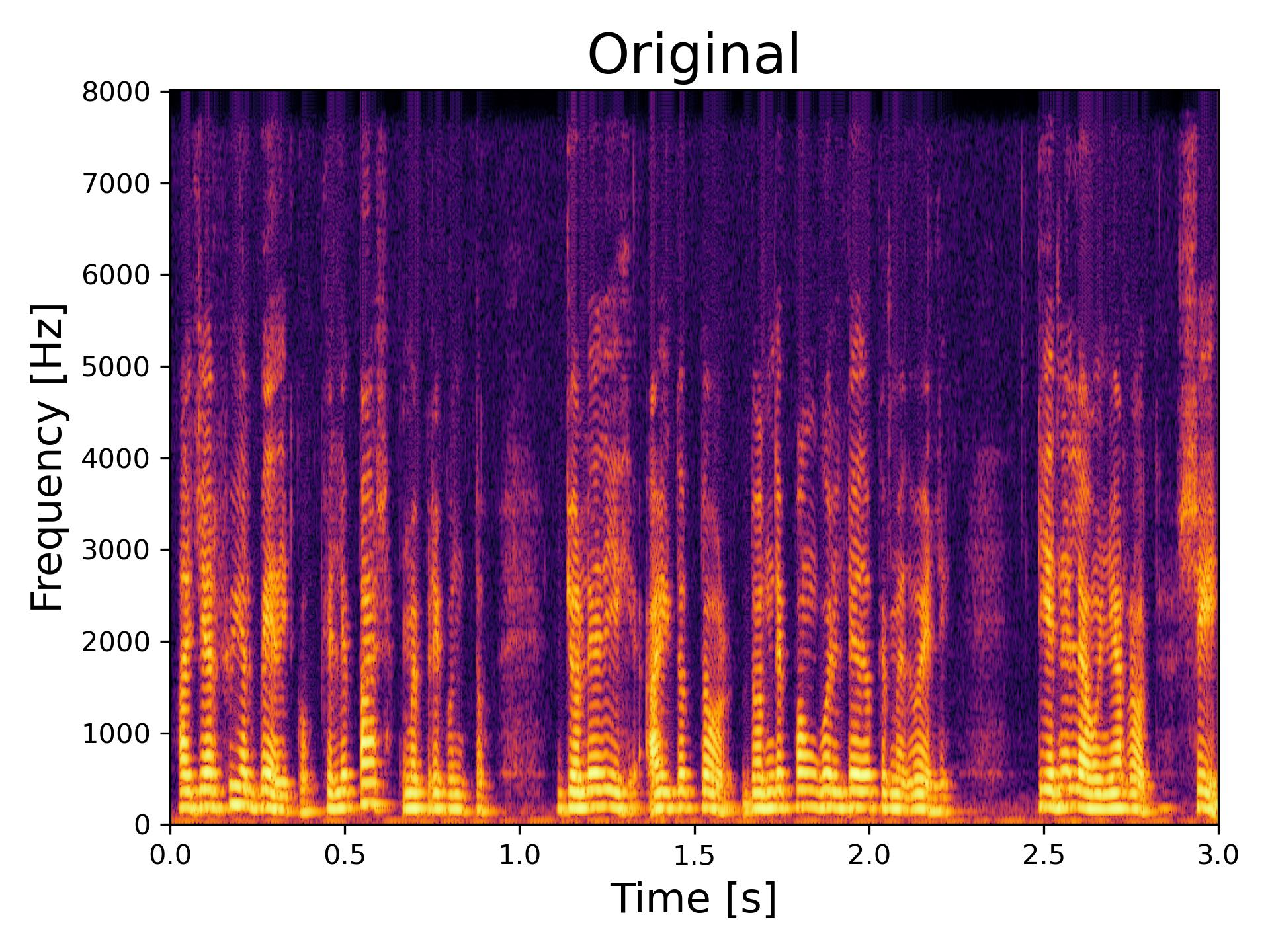

We provide explanations for samples generated from various speech tasks, including diadochokinetic (DDK) exercises, monologues, and reading exercises.

Click on any image to view a larger version in a new tab!

Diadochokinetic (DDK) Exercises Samples

Monologue Samples

Read Text